-

When I try to run the given example `scl run --envision examples/single_agent.py scenarios/sumo/loop`, the error `smarts.core.remote_agent.RemoteAgentException: Timeout while connecting to remote wor…

-

Here is where error happens:

This situation becomes much rare than some version be…

-

## **BUG REPORT**

**I am trying to run the marl benchmark example provided and facing the grpc timeout issue. *

**SMARTS version**

0.4.18

**Previous associated issues**

https://github.com/hua…

-

### Did you test the latest `bugfix-2.1.x` code?

Yes, and the problem still exists.

### Bug Description

There is no updated delta config file for the new version of marlin, enabling delta under nor…

-

Hi, thanks for providing such a powerful open-source framework. This is an awesome repo for MARL training and is what we needed the most to implement some distributed training algorithm without buildi…

-

Awesome work for extending the original pymarl! But will be much better if MPE can be included.

Is there any plan to open source the MPE-related code in this repository?

-

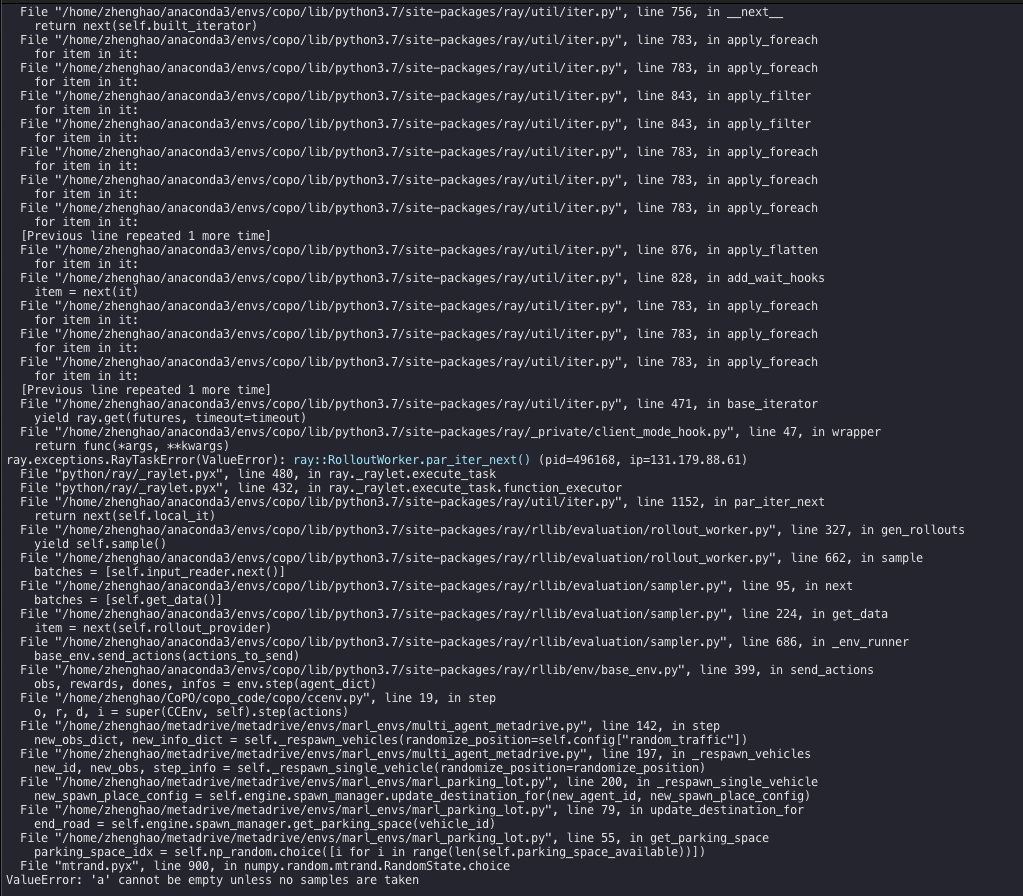

### Search before asking

- [X] I searched the [issues](https://github.com/ray-project/ray/issues) and found no similar issues.

### Ray Component

RLlib

### What happened + What you expect…

-

标题包含关键词:reinforcement learning

摘要包含关键词:MARL

-

One comments shown in marl/algos/hyperparams/finetuned/mpe/maddpg.yaml suggests

```console

# Detailed explanation for each hyper parameter can be found in ray/rllib/agents/ddpg/ddpg.py

```

However…

-

After testing HAPPO, I found that in happo_surrogate_loss, no other agents are considered for each self-agent. I wonder if there is any problem?