Attention-based Contrastive Learning for Winograd Schemas

News

- 11/24/2021: Provided source code

In this repository we will provide the source code for the paper Attention-based Contrastive Learning for Winograd Schemas to be presented at EMNLP 2021. The code is in parts based on the code from Huggingface Tranformers and the paper A Surprisingly Robust Trick for Winograd Schema Challenge.

Abstract

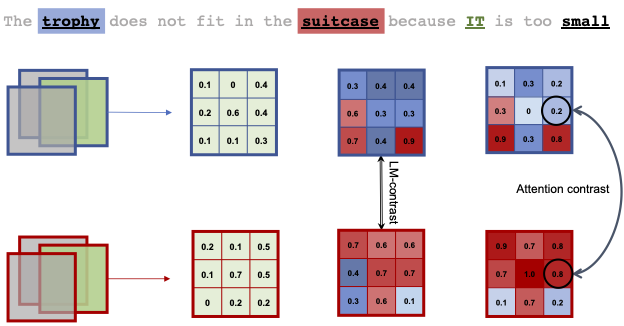

Self-supervised learning has recently attracted considerable attention in the NLP community for its ability to learn discriminative features using a contrastive objective. This paper investigates whether contrastive learning can be extended to Transfomer attention to tackling the Winograd Schema Challenge. To this end, we propose a novel self-supervised framework, leveraging a contrastive loss directly at the level of self-attention. Experimental analysis of our attention-based models on multiple datasets demonstrates superior commonsense reasoning capabilities. The proposed approach outperforms all comparable unsupervised approaches while occasionally surpassing supervised ones.

Authors:

Requirements

- Python (version 3.6 or later)

- PyTorch

- Huggingface Tranformers

Download and Installation

- Install the requiremennts:

conda install --yes --file requirements.txtor

pip install -r requirements.txt-

Clone this repository and install dependencies:

git clone https://github.com/SAP-samples/emnlp2021-attention-contrastive-learning cd emnlp2021-attention-contrastive-learning pip install -r requirements.txt -

Create 'data' sub-directory and download files for PDP, WSC challenge, KnowRef, DPR and WinoGrande:

mkdir data wget https://cs.nyu.edu/faculty/davise/papers/WinogradSchemas/PDPChallenge2016.xml wget https://cs.nyu.edu/faculty/davise/papers/WinogradSchemas/WSCollection.xml wget https://raw.githubusercontent.com/aemami1/KnowRef/master/Knowref_dataset/knowref_test.json wget http://www.hlt.utdallas.edu/~vince/data/emnlp12/train.c.txt wget http://www.hlt.utdallas.edu/~vince/data/emnlp12/test.c.txt wget https://storage.googleapis.com/ai2-mosaic/public/winogrande/winogrande_1.1.zip unzip winogrande_1.1.zip rm winogrande_1.1.zip cd .. -

Train

python main-AMEX.py --do_train --do_eval --learning_rate 1.0e-5 --shuffle --ignore_bestacc --loadcachedfeatures --train_batch_size 18 --num_train_epochs 22 --eval_batch_size 10 --max_seq_length 40 --alpha_param 0.05 --beta_param 0.02 --lambda_param 1.0 --warmup_steps 150 --bert_model=roberta-large --cache_dir cache/ --data_dir=data/ --output_dir model_output/ --task_name wscrHow to obtain support

Create an issue in this repository if you find a bug or have questions about the content. For additional support, ask a question in SAP Community.

Citations

If you use this code in your research, please cite:

@inproceedings{klein-nabi-2021-attention-based,

title = "Attention-based Contrastive Learning for {W}inograd Schemas",

author = "Klein, Tassilo and

Nabi, Moin",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2021",

month = nov,

year = "2021",

address = "Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.findings-emnlp.208",

pages = "2428--2434"

}License

Copyright (c) 2024 SAP SE or an SAP affiliate company. All rights reserved. This project is licensed under the Apache Software License, version 2.0 except as noted otherwise in the LICENSE file.