Steven Rowe (@sarowe) (migrated from JIRA)

Confirming there is an issue, but I don't think the spellings of "example.com" and "example.net" are the problem though; more likely this is related to an end-of-input issue. This test added to TestUAX29URLEmailTokenizer fails for me:

public void testExampleURLs() throws Exception {

Analyzer analyzer = new Analyzer() {

`@Override` protected TokenStreamComponents createComponents(String fieldName) {

return new TokenStreamComponents(new UAX29URLEmailTokenizer(newAttributeFactory()));

}};

// A trailing space allows these to succeed

BaseTokenStreamTestCase.assertAnalyzesTo(analyzer, "example.com ", new String[]{"example.com"}, new String[]{"<URL>"});

BaseTokenStreamTestCase.assertAnalyzesTo(analyzer, "example.net ", new String[]{"example.net"}, new String[]{"<URL>"});

// These fail

BaseTokenStreamTestCase.assertAnalyzesTo(analyzer, "example.com", new String[]{"example.com"}, new String[]{"<URL>"});

BaseTokenStreamTestCase.assertAnalyzesTo(analyzer, "example.net", new String[]{"example.net"}, new String[]{"<URL>"});

}So there is an issue here with no-scheme end-of-input URLs not being recognized as type <URL>.

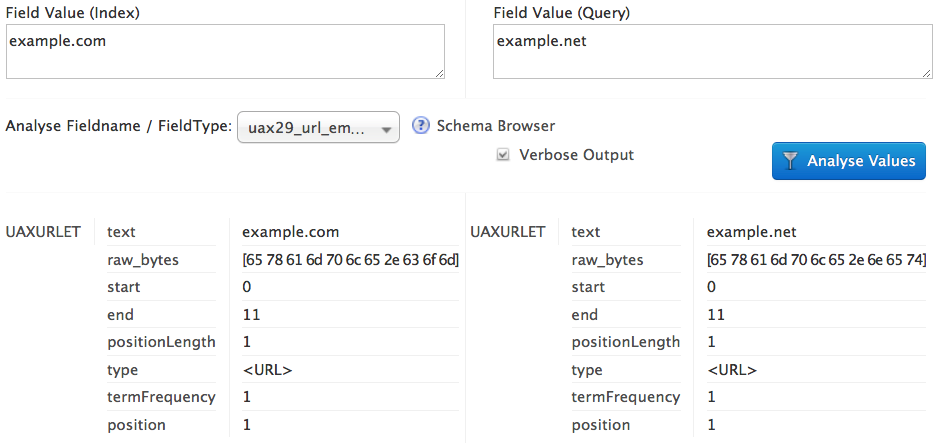

and with the patch:

and with the patch:  .

.

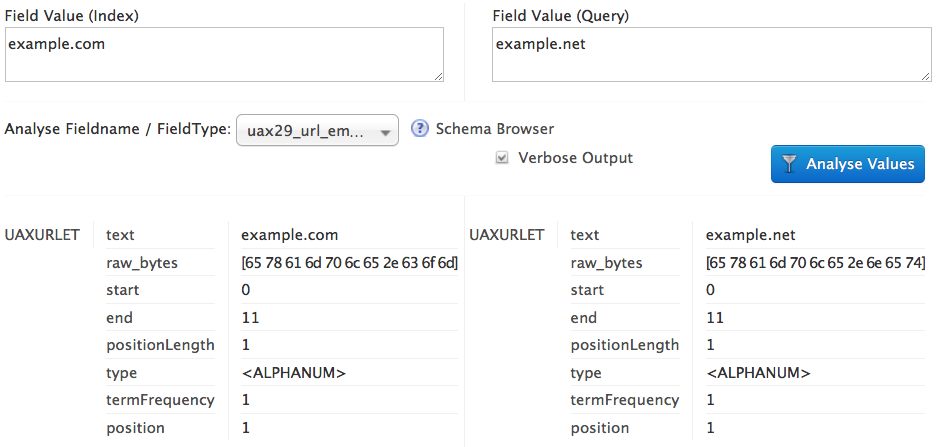

We are using the UAX29URLEmailTokenizer so we can use the token types in our plugins.

However, I noticed that the tokenizer is not detecting certain URLs as <URL> but <ALPHANUM> instead.

Examples that are not working:

But:

Examples that work:

I have checked this JIRA, and could not find an issue. I have tested this on Lucene (Solr) 6.4.1 and 7.3.

Could someone confirm my findings and advise what I could do to (help) resolve this issue?

Migrated from LUCENE-8278 by Junte Zhang, resolved Jun 12 2018 Attachments: LUCENE-8278.patch, patched.png, unpatched.png Linked issues:

6454