Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Closed beegoathz closed 11 months ago

Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

hi @simonkim0515 thank you for taking the time, our team's application has participated in the Governance Call see that you have approved many projects but ours has not, is there any question we need to add?

Total DataCap requested

5PiB

Expected weekly DataCap usage rate

100TiB

Client address

f1rtvg6dhiko5gmuay62z7p5xgvxdc22am6ck73ly

f02049625

f1rtvg6dhiko5gmuay62z7p5xgvxdc22am6ck73ly

50TiB

94143945-4f64-48e5-82ad-f7258b1024d6

Could you give some screenshot of you having more than 500T of raw data?

Can you publish the file coin node that you want to save?

Could you give some screenshot of you having more than 500T of raw data?

Hi,@kernelogic Thanks for your interest in our project. I uploaded some pictures to the google cloud disk, the link is as follows, https://drive.google.com/drive/folders/1_X5dyqdiQ9PaFrFQmo_VLkhn3X286ceL?usp=share_link please check and continue to communicate if you have any questions.

Can you publish the file coin node that you want to save?

Hi, @psh0691 Thanks for your interest in our project. In the previous rounds of Datacap, we planned that part of it might be packaged at the f01985745 and f033462 nodes, but in the end the actual packaging shall prevail. In view of the encapsulation rules, we are actively communicating with SPs such as newwebgroup and nd labs, and strive to distribute Datacap encapsulation in different SPs, but this requires a certain process.

Your Datacap Allocation Request has been proposed by the Notary

bafy2bzaceaf5b5fob7vg5eproq7y3etgv5u7qm7pbqoz2who5f7o37tz3sjyu

Address

f1rtvg6dhiko5gmuay62z7p5xgvxdc22am6ck73ly

Datacap Allocated

50.00TiB

Signer Address

f1qdko4jg25vo35qmyvcrw4ak4fmuu3f5rif2kc7i

Id

94143945-4f64-48e5-82ad-f7258b1024d6

You can check the status of the message here: https://filfox.info/en/message/bafy2bzaceaf5b5fob7vg5eproq7y3etgv5u7qm7pbqoz2who5f7o37tz3sjyu

Your Datacap Allocation Request has been approved by the Notary

bafy2bzacecnwlixbrcwfy3lzhd77ffswa7gux53oxyw25avbeslewcjkjpoim

Address

f1rtvg6dhiko5gmuay62z7p5xgvxdc22am6ck73ly

Datacap Allocated

50.00TiB

Signer Address

f1yjhnsoga2ccnepb7t3p3ov5fzom3syhsuinxexa

Id

94143945-4f64-48e5-82ad-f7258b1024d6

You can check the status of the message here: https://filfox.info/en/message/bafy2bzacecnwlixbrcwfy3lzhd77ffswa7gux53oxyw25avbeslewcjkjpoim

f02049625

f1rtvg6dhiko5gmuay62z7p5xgvxdc22am6ck73ly

100TiB

72be16b5-7e50-4d1d-b6a5-c4b69d555d71

f01858410

f1rtvg6dhiko5gmuay62z7p5xgvxdc22am6ck73ly

kernelogic & psh0691

100% of weekly dc amount requested

100TiB

50TiB

4.95PiB

| Number of deals | Number of storage providers | Previous DC Allocated | Top provider | Remaining DC |

|---|---|---|---|---|

| 1184 | 3 | 50TiB | 37.84 | 0B |

Zilaiyun Designf1rtvg6dhiko5gmuay62z7p5xgvxdc22am6ck73ly

1kernelogic1psh0691

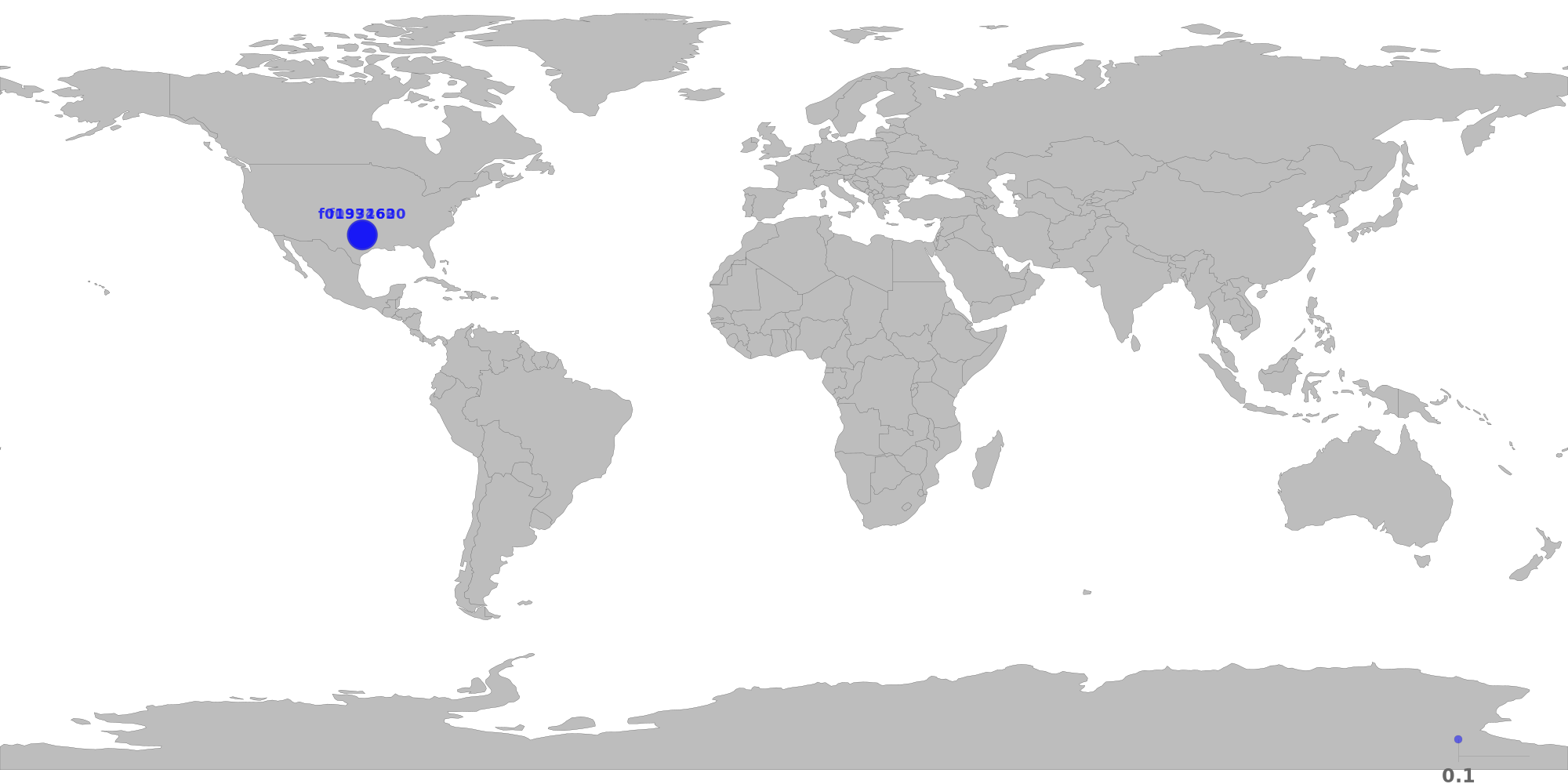

The below table shows the distribution of storage providers that have stored data for this client.

If this is the first time a provider takes verified deal, it will be marked as new.

For most of the datacap application, below restrictions should apply.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ All storage providers are located in the same region.

| Provider | Location | Total Deals Sealed | Percentage | Unique Data | Duplicate Deals |

|---|---|---|---|---|---|

| f01971600 | Dallas, Texas, USFlexential Colorado Corp. |

14.00 TiB | 37.84% | 14.00 TiB | 0.00% |

| f01992630 | Dallas, Texas, USFlexential Colorado Corp. |

13.00 TiB | 35.14% | 13.00 TiB | 0.00% |

| f033462 | Dallas, Texas, USFlexential Colorado Corp. |

10.00 TiB | 27.03% | 10.00 TiB | 0.00% |

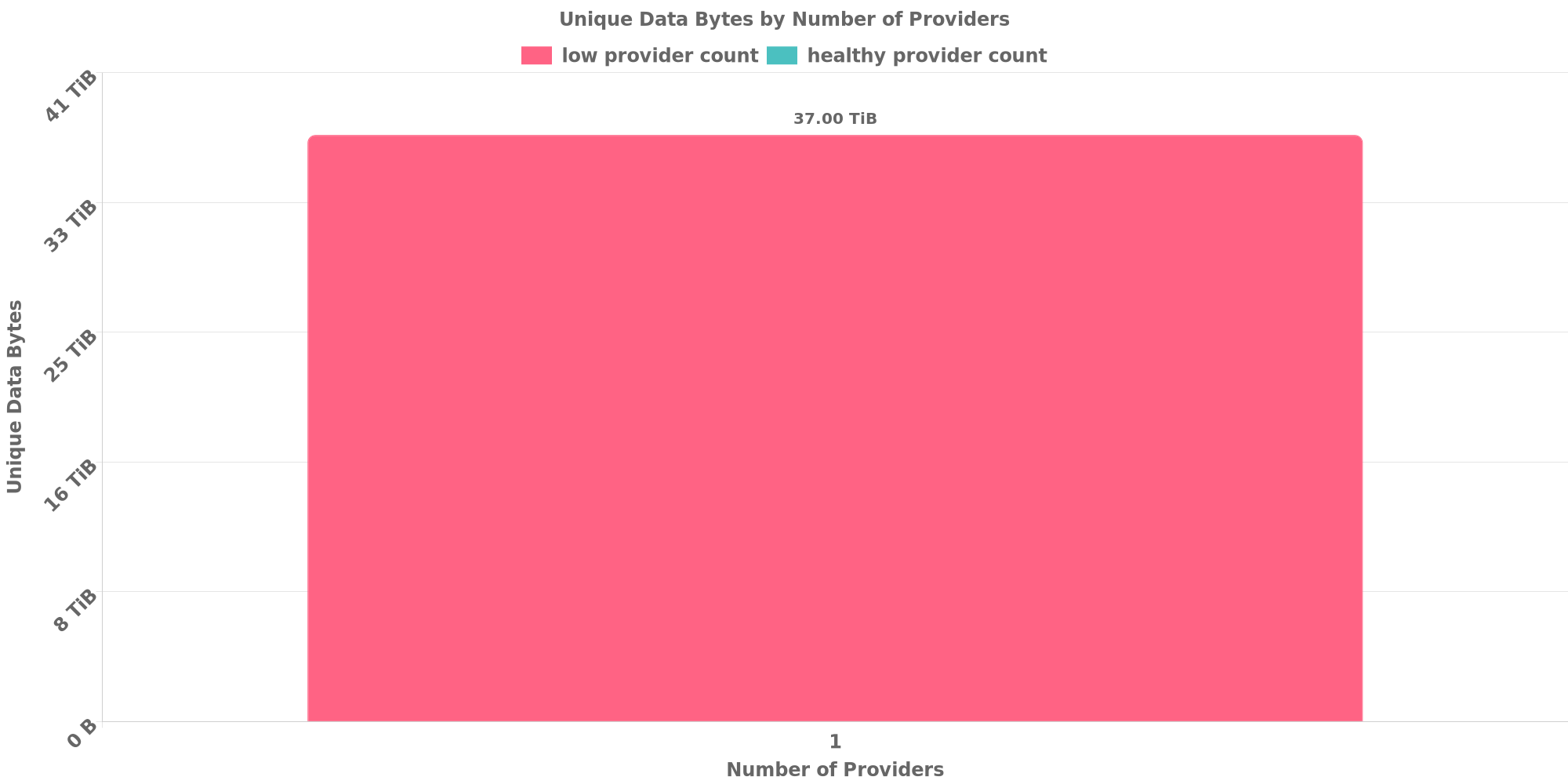

The below table shows how each many unique data are replicated across storage providers.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ 100.00% of deals are for data replicated across less than 3 storage providers.

| Unique Data Size | Total Deals Made | Number of Providers | Deal Percentage |

|---|---|---|---|

| 37.00 TiB | 37.00 TiB | 1 | 100.00% |

The below table shows how many unique data are shared with other clients. Usually different applications owns different data and should not resolve to the same CID.

However, this could be possible if all below clients use same software to prepare for the exact same dataset or they belong to a series of LDN applications for the same dataset.

✔️ No CID sharing has been observed.

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

Good morning @beegoathz

I have been checking on this application.

Can you clarify why you did not follow up on the rules of Fil+? It seems you stored all datacap on one organisation / SP, located on a VPN in the USA. Out of this 3 there are 5 other SP's on your ip on ports 6000 / 6025. See below*

Second, i cannot perform any duediligence since none of the data stored is retrievable. See below*

Until explanation is given we cannot continue with this LDN for datacap.

Thanks for your understanding.

lotus net connect f01971600 f01971600 -> {12D3KooWBNh4V7JeEvYLKvSbGeMMMFJyB3vavEyEipqNYaZh9cNS: [/ip4/128.136.157.164/tcp/8004]} ERROR: failed to parse multiaddr "f01971600": must begin with /

lotus net connect f01992630 f01992630 -> {12D3KooWSPav6xffB6rk8KL2xuEMgtQZJKGZusfEaM8PPT7NgtMo: [/ip4/128.136.157.164/tcp/8013]} ERROR: failed to parse multiaddr "f01992630": must begin with /

lotus net connect f033462 f033462 -> {12D3KooWMrhzqoTb6rJUYcw15qpiwKsRLEiyNCXTsWW4pLnqYmyh: [/ip4/128.136.157.164/tcp/8010]} ERROR: failed to parse multiaddr "f033462": must begin with /

VPN data : nmap -O 128.136.157.164 Starting Nmap 7.80 ( https://nmap.org ) at 2023-01-18 09:56 CET Nmap scan report for 128.136.157.164 Host is up (0.11s latency). Not shown: 988 filtered ports PORT STATE SERVICE 22/tcp open ssh 80/tcp open http 113/tcp closed ident 6000/tcp open X11 6001/tcp open X11:1 6002/tcp open X11:2 6009/tcp open X11:9 6025/tcp open x11 8002/tcp open teradataordbms 8008/tcp open http 8009/tcp open ajp13 8010/tcp open xmpp

telnet 128.136.157.164 6001 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host. telnet 128.136.157.164 6002 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host. telnet 128.136.157.164 6009 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host. telnet 128.136.157.164 6025 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host.

Retrieval testing:

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

Flagging link to #1236

Same SP's , same behaviour.

Good morning @beegoathz

I have been checking on this application.

Can you clarify why you did not follow up on the rules of Fil+? It seems you stored all datacap on one organisation / SP, located on a VPN in the USA. Out of this 3 there are 5 other SP's on your ip on ports 6000 / 6025. See below*

Second, i cannot perform any duediligence since none of the data stored is retrievable. See below*

Until explanation is given we cannot continue with this LDN for datacap.

Thanks for your understanding.

lotus net connect f01971600 f01971600 -> {12D3KooWBNh4V7JeEvYLKvSbGeMMMFJyB3vavEyEipqNYaZh9cNS: [/ip4/128.136.157.164/tcp/8004]} ERROR: failed to parse multiaddr "f01971600": must begin with /

lotus net connect f01992630 f01992630 -> {12D3KooWSPav6xffB6rk8KL2xuEMgtQZJKGZusfEaM8PPT7NgtMo: [/ip4/128.136.157.164/tcp/8013]} ERROR: failed to parse multiaddr "f01992630": must begin with /

lotus net connect f033462 f033462 -> {12D3KooWMrhzqoTb6rJUYcw15qpiwKsRLEiyNCXTsWW4pLnqYmyh: [/ip4/128.136.157.164/tcp/8010]} ERROR: failed to parse multiaddr "f033462": must begin with /

VPN data : nmap -O 128.136.157.164 Starting Nmap 7.80 ( https://nmap.org ) at 2023-01-18 09:56 CET Nmap scan report for 128.136.157.164 Host is up (0.11s latency). Not shown: 988 filtered ports PORT STATE SERVICE 22/tcp open ssh 80/tcp open http 113/tcp closed ident 6000/tcp open X11 6001/tcp open X11:1 6002/tcp open X11:2 6009/tcp open X11:9 6025/tcp open x11 8002/tcp open teradataordbms 8008/tcp open http 8009/tcp open ajp13 8010/tcp open xmpp

telnet 128.136.157.164 6001 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host. telnet 128.136.157.164 6002 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host. telnet 128.136.157.164 6009 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host. telnet 128.136.157.164 6025 Trying 128.136.157.164... Connected to 128.136.157.164. Escape character is '^]'. /multistream/1.0.0 ^CConnection closed by foreign host.

Retrieval testing:

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: getting pieces containing block bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6: failed to lookup index for mh a0e40220ae39aff89a1704a9b8904b1da0c588aa4c9309da83cd9446e1937dc5c407589f, err: datastore: key not found

Hi, @cryptowhizzard We are now saving the first copy, and subsequent copies will find more SPs in different regions. We asked SP to check the following questions: Your usage is wrong. lotus net connect f01971600 become lotus net connect /ip4/128.136.157.164/tcp/8004/p2p/12D3KooWBNh4V7JeEvYLKvSbGeMMMFJyB3vavEyEipqNYaZh9cNS

I checked the data list. I don't have this data. Where did you get it? bafykbzacecxdtl7ytilqjknysbfr3igfrcvezeyj3kb43fcg4gjx3roea5mj6

checker:manualTrigger

✔️ Storage provider distribution looks healthy.

⚠️ 100.00% of deals are for data replicated across less than 3 storage providers.

✔️ No CID sharing has been observed.

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

[^2]: Deals from those addresses are combined into this report as they are specified with checker:manualTrigger

[^3]: To manually trigger this report with deals from other related addresses, add a comment with text checker:manualTrigger <other_address_1> <other_address_2> ...

Click here to view the full report.

checker:manualTrigger

✔️ Storage provider distribution looks healthy.

⚠️ 100.00% of deals are for data replicated across less than 3 storage providers.

✔️ No CID sharing has been observed.

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

[^2]: Deals from those addresses are combined into this report as they are specified with checker:manualTrigger

[^3]: To manually trigger this report with deals from other related addresses, add a comment with text checker:manualTrigger <other_address_1> <other_address_2> ...

Click here to view the full report.

100.00% of deals are for data replicated across less than 3 storage providers.

@beegoathz Can you explain why this happened?

100.00% of deals are for data replicated across less than 3 storage providers.

@beegoathz Can you explain why this happened?

Hi, @Normalnoise Because it is the first round, the DC amount is only 50TiB, later we will pay attention to the dispersion of SPs

@beegoathz If you can give me an allocation plan in the next round. I will support your next round, and at the same time I will keep my eyes on dataset allocation

This application has not seen any responses in the last 10 days. This issue will be marked with Stale label and will be closed in 4 days. Comment if you want to keep this application open.

This application has not seen any responses in the last 14 days, so for now it is being closed. Please feel free to contact the Fil+ Gov team to re-open the application if it is still being processed. Thank you!

Hey @simonkim0515 we are now data ready and will start sealing, please reopen the application for me, thank you!

I am against re-opening this application.

I downloaded a deal from this LDN and the applicant is not storing the data he told us he was going to store.

Please see here:

I am against re-opening this application.

I downloaded a deal from this LDN and the applicant is not storing the data he told us he was going to store.

Please see here:

Hello, we didn't know how to store data in filecoin at a very early stage. We were looking for corresponding cases and tools, but we didn't find a suitable one. Later, we wrote our own tools based on go-car, and we had piece cid data in our database. At that time, we combined small files into one large file, and then split them into small files and sent them to SP to realize data dispersion. and high data availability. Our script uses the sum name in the process of preparing files and packaging files, so we see the current results.

So what is the point distributing this data to other SP's then and reopen this application?

Close it, start a new one and do it right?

So what is the point distributing this data to other SP's then and reopen this application?

Close it, start a new one and do it right?

Hi @cryptowhizzard , It's just that the filename doesn't work as expected, so why doesn't it make sense? for the new incoming filecoin, we think it is valuable to try and experience, with the accumulation of experience, we know about the ecosystem is more and more.

So what is the point distributing this data to other SP's then and reopen this application? Close it, start a new one and do it right?

Hi @cryptowhizzard , It's just that the filename doesn't work as expected, so why doesn't it make sense? for the new incoming filecoin, we think it is valuable to try and experience, with the accumulation of experience, we know about the ecosystem is more and more.

FIL+ is about storing valuable data. This data you stored is not the data you indicated you were going to store. Close this one, open a new one and do it right please.

So what is the point distributing this data to other SP's then and reopen this application? Close it, start a new one and do it right?

Hi @cryptowhizzard , It's just that the filename doesn't work as expected, so why doesn't it make sense? for the new incoming filecoin, we think it is valuable to try and experience, with the accumulation of experience, we know about the ecosystem is more and more.

FIL+ is about storing valuable data. This data you stored is not the data you indicated you were going to store. Close this one, open a new one and do it right please.

It was explained above, thanks.

checker:manualTrigger

✔️ Storage provider distribution looks healthy.

⚠️ 100.00% of deals are for data replicated across less than 3 storage providers.

✔️ No CID sharing has been observed.

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

[^2]: Deals from those addresses are combined into this report as they are specified with checker:manualTrigger

[^3]: To manually trigger this report with deals from other related addresses, add a comment with text checker:manualTrigger <other_address_1> <other_address_2> ...

Click here to view the CID Checker report. Click here to view the Retrieval Dashboard. Click here to view the Retrieval report.

This application has not seen any responses in the last 10 days. This issue will be marked with Stale label and will be closed in 4 days. Comment if you want to keep this application open.

-- Commented by Stale Bot.

checker:manualTrigger

✔️ Storage provider distribution looks healthy.

⚠️ 100.00% of deals are for data replicated across less than 3 storage providers.

✔️ No CID sharing has been observed.

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

[^2]: Deals from those addresses are combined into this report as they are specified with checker:manualTrigger

[^3]: To manually trigger this report with deals from other related addresses, add a comment with text checker:manualTrigger <other_address_1> <other_address_2> ...

Click here to view the CID Checker report. Click here to view the Retrieval Dashboard. Click here to view the Retrieval report.

This application has not seen any responses in the last 10 days. This issue will be marked with Stale label and will be closed in 4 days. Comment if you want to keep this application open.

-- Commented by Stale Bot.

This application has not seen any responses in the last 14 days, so for now it is being closed. Please feel free to contact the Fil+ Gov team to re-open the application if it is still being processed. Thank you!

-- Commented by Stale Bot.

Large Dataset Notary Application

To apply for DataCap to onboard your dataset to Filecoin, please fill out the following.

Core Information

Please respond to the questions below by replacing the text saying "Please answer here". Include as much detail as you can in your answer.

Project details

Share a brief history of your project and organization.

What is the primary source of funding for this project?

What other projects/ecosystem stakeholders is this project associated with?

Use-case details

Describe the data being stored onto Filecoin

Where was the data in this dataset sourced from?

Can you share a sample of the data? A link to a file, an image, a table, etc., are good ways to do this.

Confirm that this is a public dataset that can be retrieved by anyone on the Network (i.e., no specific permissions or access rights are required to view the data).

What is the expected retrieval frequency for this data?

For how long do you plan to keep this dataset stored on Filecoin?

DataCap allocation plan

In which geographies (countries, regions) do you plan on making storage deals?

How will you be distributing your data to storage providers? Is there an offline data transfer process?

How do you plan on choosing the storage providers with whom you will be making deals? This should include a plan to ensure the data is retrievable in the future both by you and others.

How will you be distributing deals across storage providers?

Do you have the resources/funding to start making deals as soon as you receive DataCap? What support from the community would help you onboard onto Filecoin?