My own inclination is towards the non-blocking API with a bounded overflow list. A blocking API seems antithetical to the goal of reducing contention and may lead to performance anomalies if a goroutine or OS thread is descheduled while it has a shard checked out and a non-blocking API with a required combiner may prevent certain use cases (e.g., large structures, or uses that never read the whole sharded value.) It also devolves to the blocking API if the bound is 0.

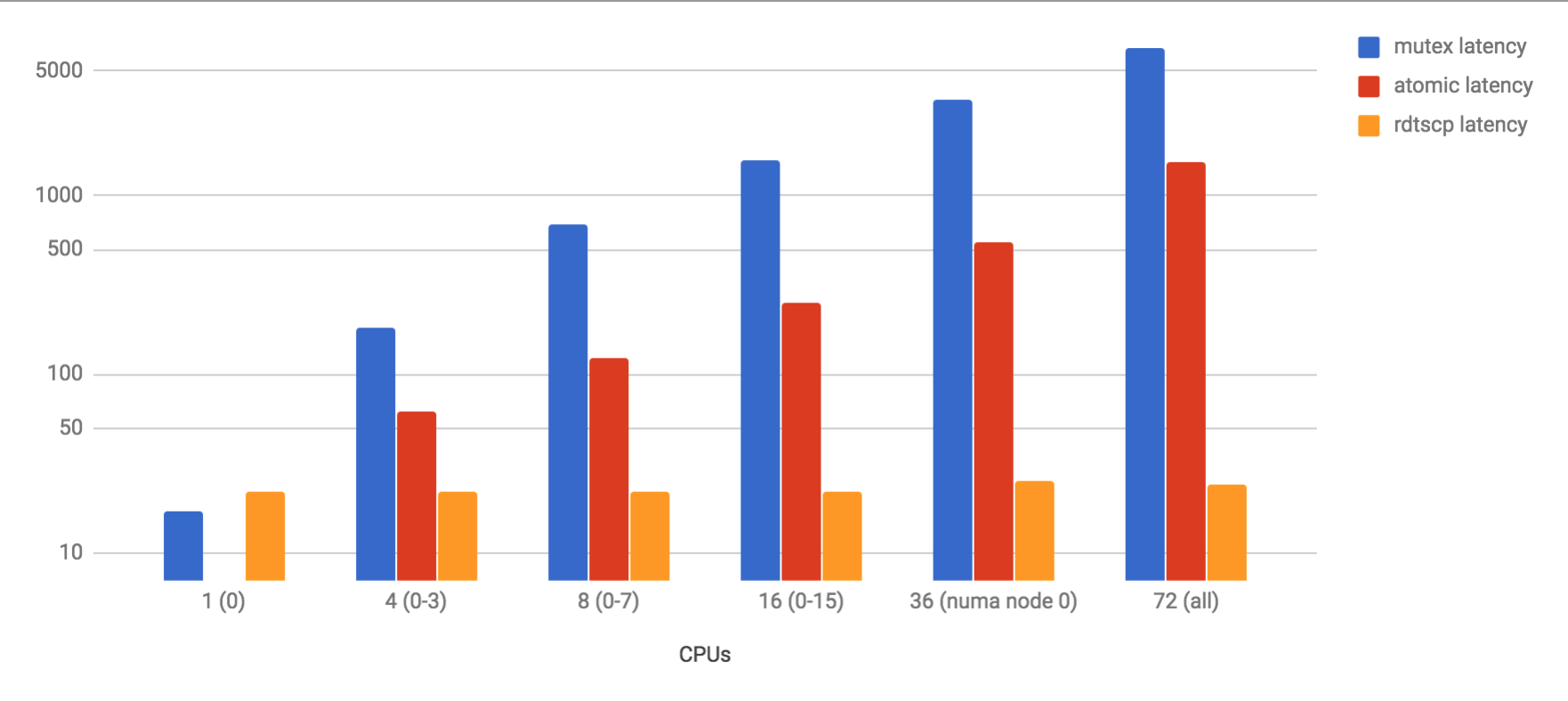

Per-CPU sharded values are a useful and common way to reduce contention on shared write-mostly values. However, this technique is currently difficult or impossible to use in Go (though there have been attempts, such as @jonhoo's https://github.com/jonhoo/drwmutex and @bcmills' https://go-review.googlesource.com/#/c/35676/).

We propose providing an API for creating and working with sharded values. Sharding would be encapsulated in a type, say

sync.Sharded, that would haveGet() interface{},Put(interface{}), andDo(func(interface{}))methods.GetandPutwould always have to be paired to makeDopossible. (This is actually the same API that was proposed in https://github.com/golang/go/issues/8281#issuecomment-66096418 and rejected, but perhaps we have a better understanding of the issues now.) This idea came out of off-and-on discussions between at least @rsc, @hyangah, @RLH, @bcmills, @Sajmani, and myself.This is a counter-proposal to various proposals to expose the current thread/P ID as a way to implement sharded values (#8281, #18590). These have been turned down as exposing low-level implementation details, tying Go to an API that may be inappropriate or difficult to support in the future, being difficult to use correctly (since the ID may change at any time), being difficult to specify, and as being broadly susceptible to abuse.

There are several dimensions to the design of such an API.

GetandPutcan be blocking or non-blocking:With non-blocking

GetandPut,sync.Shardedbehaves like a collection.Getreturns immediately with the current shard's value or nil if the shard is empty.Putstores a value for the current shard if the shard's slot is empty (which may be different from whereGetwas called, but would often be the same). If the shard's slot is not empty,Putcould either put to some overflow list (in which case the state is potentially unbounded), or run some user-provided combiner (which would bound the state).With blocking

GetandPut,sync.Shardedbehaves more like a lock.Getreturns and locks the current shard's value, blocking furtherGets from that shard.Putsets the shard's value and unlocks it. In this case,Puthas to know which shard the value came from, soGetcan either return aputfunction (though that would require allocating a closure) or some opaque value that must be passed toPutthat internally identifies the shard.It would also be possible to combine these behaviors by using an overflow list with a bounded size. Specifying 0 would yield lock-like behavior, while specifying a larger value would give some slack where

GetandPutremain non-blocking without allowing the state to become completely unbounded.Docould be consistent or inconsistent:If it's consistent, then it passes the callback a snapshot at a single instant. I can think of two ways to do this: block until all outstanding values are

Putand also block furtherGets until theDocan complete; or use the "current" value of each shard even if it's checked out. The latter requires that shard values be immutable, but it makesDonon-blocking.If it's inconsistent, then it can wait on each shard independently. This is faster and doesn't affect

GetandPut, but the caller can only get a rough idea of the combined value. This is fine for uses like approximate statistics counters.It may be that we can't make this decision at the API level and have to provide both forms of

Do.I think this is a good base API, but I can think of a few reasonable extensions:

Provide

PeekandCompareAndSwap. If a user of the API can be written in terms of these, thenDowould always be able to get an immediate consistent snapshot.Provide a

Valueoperation that uses the user-provided combiner (if we go down that API route) to get the combined value of thesync.Sharded.