pip install --upgrade scrapydwebClosed luzihang123 closed 5 years ago

pip install --upgrade scrapydwebPerfect~

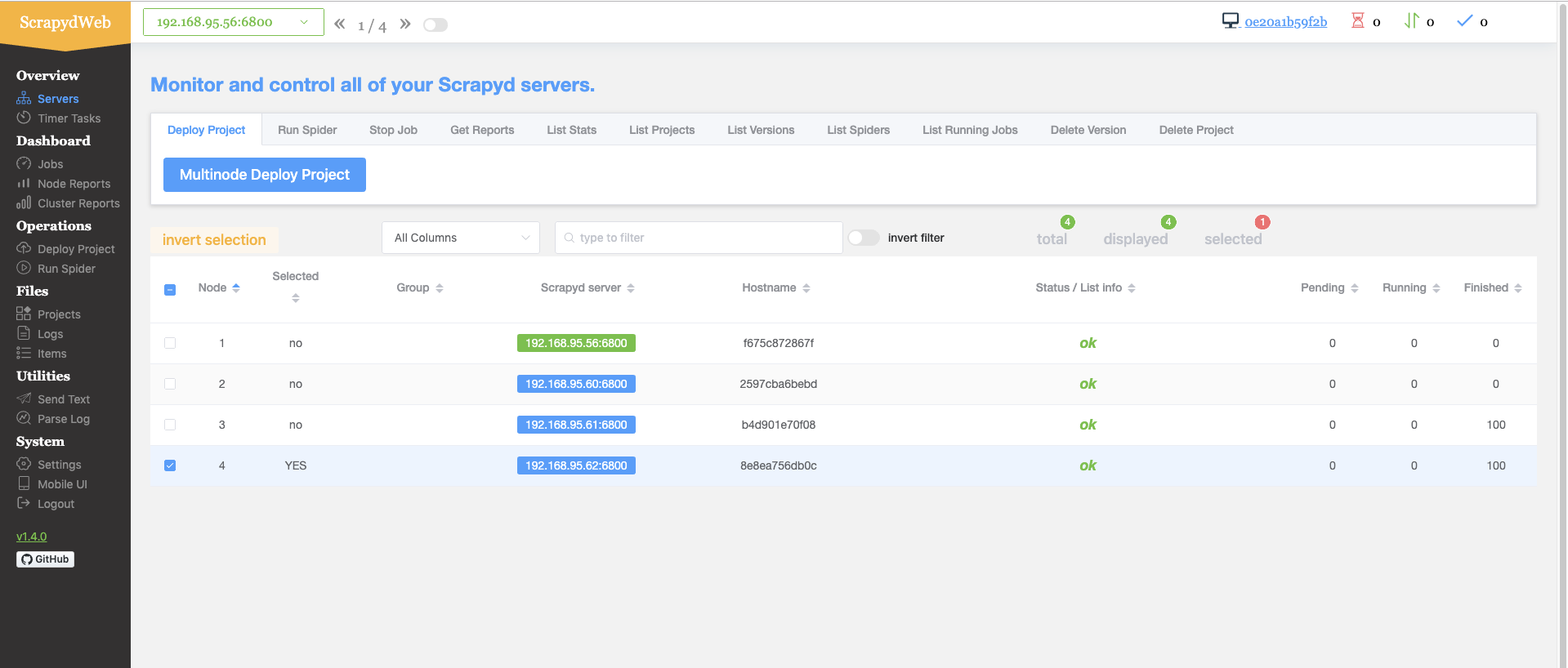

my dockerhub scrapydweb,update to v1.4 https://cloud.docker.com/u/chinaclark1203/repository/docker/chinaclark1203/scrapydweb

Dockfile:

entrypoint.sh

Scrapyd.conf

docker log:

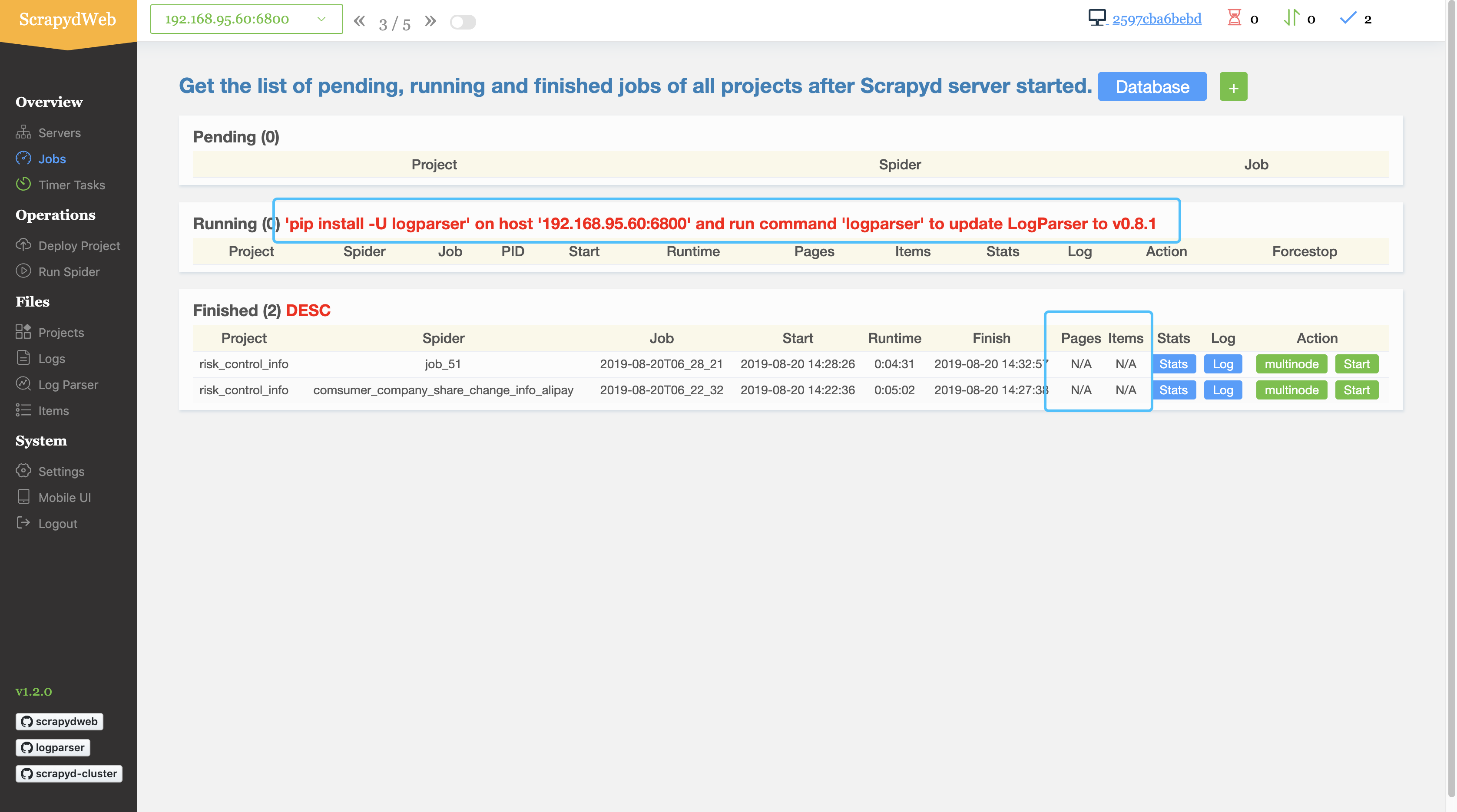

My question: Still show information “'pip install -U logparser' on host '192.168.95.60:6800' and run command 'logparser' to update LogParser to v0.8.1”