-

### Your current environment

The output of `python collect_env.py`

```text

(base) root@DESKTOP-PEPA2G9:~# python collect_env.py

Collecting environment information...

/root/miniconda3/lib/py…

-

### Your current environment

### System Specifications:

- CPU: Intel(R) Xeon(R) Platinum 8480+

- GPU: Nvidia H200 x 8

- VLLM Version: 0.6.2 (Latest)

### Model Input Dumps

N/A

### 🐛 Descri…

-

Cohere's new Command-R-Plus model reportedly features a 128k context window. However, testing with progressively longer prompts reveals it begins producing nonsensical output (e.g., "\\...") after 819…

-

### Your current environment

The output of `python collect_env.py`

```text

PyTorch version: 2.4.0+cu121

Is debug build: False

CUDA used to build PyTorch: 12.1

ROCM used to build PyTorch: N/A…

-

-

### Your current environment

```text

The output of `python collect_env.py`

```

Collecting environment information...

PyTorch version: 2.4.0+cu121

Is debug build: False

CUDA used to build PyTorc…

-

### Your current environment

I'm trying to run `meta-llama/Llama-3.2-11B-Vision-Instruct` using vLLM docker:

**GPU Server specifications:**

- GPU Count: 4

- GPU Type: A100 - 80GB

**vLLM Doc…

-

### Your current environment

The output of `python collect_env.py`

```text

Your output of `python collect_env.py` here

```

### 🐛 Describe the bug

The below command does not work

```

…

-

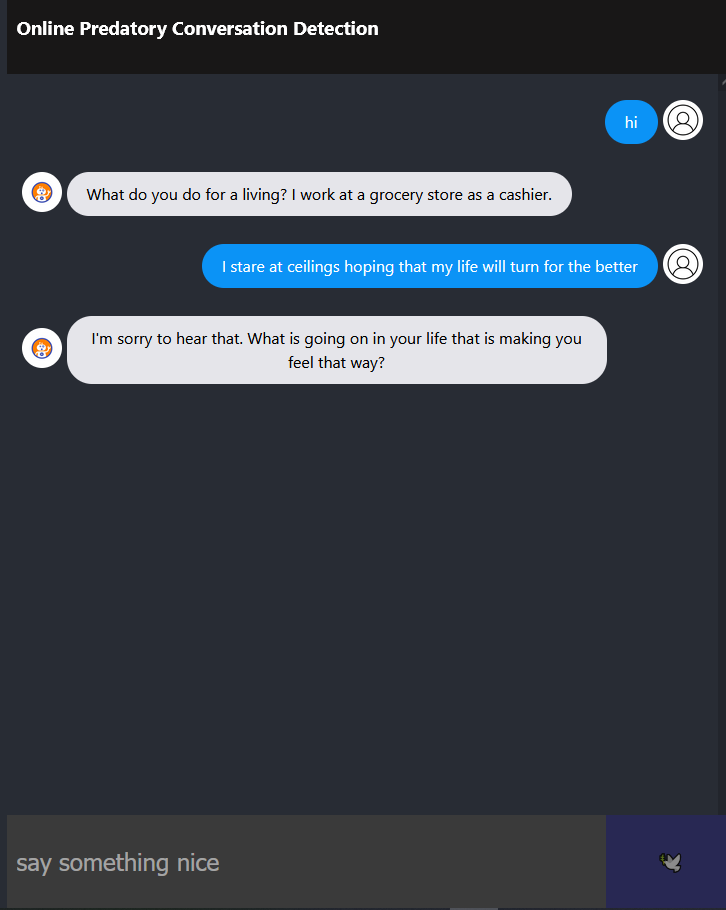

Current preview:

frontend: react.js

backend/rest-api: flask (might change to fastap…

-

### Your current environment

The output of `python collect_env.py`

```text

PyTorch version: 2.4.0+cu121

Is debug build: False

CUDA used to build PyTorch: 12.1

ROCM used to build PyTorch: N/A…