-

In espnet2_tts_demo.ipynb google colab and in my local notebook shows strange problem.

When I input the following sentence,

"この谿谷の、最も深いところには、木曽福島の関所も、隠れていた。"

last character is missing, no sound.

…

ycat3 updated

4 years ago

-

Dears,

anyone had done this?

from MelGan Generator h5 to tflite.

thanks

-

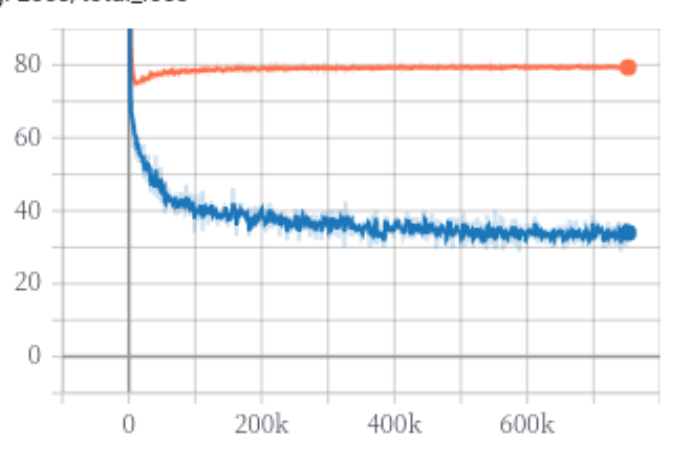

When I train model FastPitch from NVIDIA source code, I have the images like above…

-

seem my fastspeech2 implementation can't handle long sentence in some dataset such as KSS. FOr Ljspeech and other dataset from other person report that it's still fine. I'm thinking about the maximum …

-

Hi @machineko @dathudeptrai @ZDisket I have two questions.

Q1: I cleaned the text in ljspeech using `english_cleaners`, but when I run:

```python

python examples/mfa_extraction/run_mfa.py \

-…

-

Hi~

I want to check voice by combining your fastspeech, fastspeech2 and nvidia-waveglow [waveglow](https://github.com/NVIDIA/waveglow)

When training waveglow, if I train with min ~ max (80~7…

-

Hello all! First of all thanks for the repo, the work is incredible! 🥇

I am trying to train FastSpeech2 on LJSpeech, but I am finding this blocking error when extracting the durations using MFA. W…

-

I just found that `tf.keras.layers.experimental.SyncBatchNormalization` in multi-gpu can yield `nan` loss sometimes. There are some issue already in github (https://github.com/tensorflow/tensorflow/is…

-

Hello, thank you for your code.

I tried to test the models with Python, but the generated wav file is wrong.

Can you help me to check my code?

thank you!

```

import numpy as np

import soundfi…

-

Hi @monatis.

Thanks for your efforts on training models based on my free german dataset.

This is not an issue, more like an information on what i'm planning to do next on my dataset.

In mid janua…