-

Hi everyone,

I implemented model-agnostic meta-learning (MAML) for dialogue state tracking (DST) based on trade in this [repo](https://github.com/ha-lins/MAML-DST). The implementation is similar to…

-

-

### What would you like added/changed?

It would be great if extensions had access to an established resource management API. What do I mean by that? Take Bukkit/Spigot/Paper for example and how it ha…

-

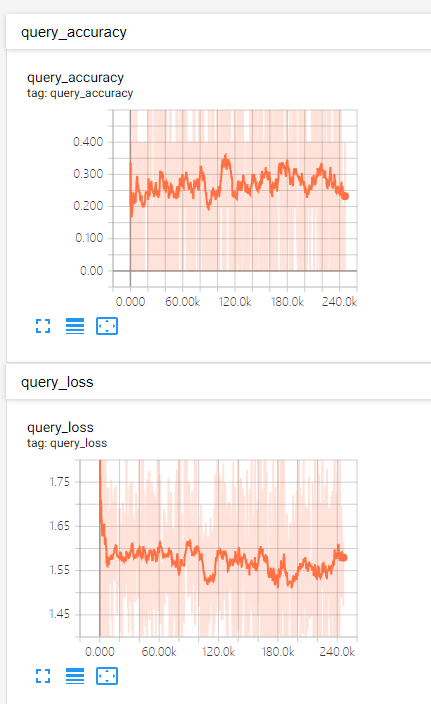

数据集就是Omniglot,直接运行代码。

与readme中的图很不一样。

-

比如,5-way-5shot few shot在测试阶段不是会去5*5的support data进行训练微调然后在query data上进行测试嘛,我看你的代码中在test阶段好像没有更新参数的过程?是我哪里理解错了嘛?

-

Hi,

I use `MAML.adapt` to update my cloned learner with first order approximation, and find the training speed decreases with the number of training steps in each iteration.

My code is as follow:

…

-

Hi,

I'm trying to train MTP models with the previous example data and notebook from mlearn package (current MAML seems not having that notebook), but the training process fails with configuration fil…

-

Thanks for the excellent hyper-grad implementation! I read the iMAML example, but in MAML, the whole network is the meta-model, and in the feature-head setting, we have a common feature extractor as t…

-

Hey! Thank you very much for the code base!

I am having troubles reproducing the results in the paper / setting the hps for the cifar and imagenet datasets.

Could you provide the calls to do so? A…

-

In the code, when training Per-FedAvg, there are two steps, and each step sample a batch of data and perform parameter update. But in the MAML framework, I think the first step is to obtain a fast wei…