Hi @junyuancat1

I''d advice using one of the examples in ACL to implement your test, a good candidate would be: https://github.com/ARM-software/ComputeLibrary/blob/main/examples/cl_sgemm.cpp

In ACL, the computation for CL operators and functions is performaned on the GPU so you should not see high CPU usage.

Without the full source code of your test it's difficult to speculate about the reasons you see this behavior on some boards. I would suspect the software configuration on these boards is not identical (mali drivers, power managerment. frequencies, etc)

Hope this helps.

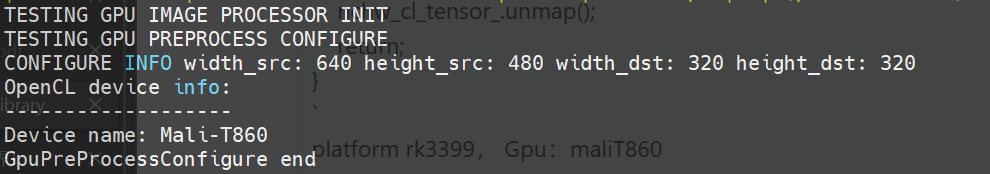

` // funtion one void ImageProcess::GpuPreProcessConfigure(const uint32_t width_src, const uint32_t height_src, const uint32_t width_dst, const uint32_t height_dst) { std::cout << "TESTING GPU PREPROCESS CONFIGURE" << std::endl; std::cout << "CONFIGURE INFO width_src: " << width_src << " height_src: " << height_src << " width_dst: " << width_dst << " height_dst: " << height_dst << std::endl; // 0. cl shedule && init input tensor // CLScheduler::get().default_init(); CLBackendType backend_type = CLBackendType::Native; auto ctx_dev_err = create_opencl_context_and_device(backend_type); // print device info form tuple std::cout << "OpenCL device info: " << std::endl; std::cout << "-------------------" << std::endl; std::cout << "Device name: " << std::get<1>(ctx_dev_err).getInfo() << std::endl;

CLScheduler::get().default_init_with_context(std::get<1>(ctx_dev_err), std::get<0>(ctx_dev_err), nullptr);

inputtensor.allocator()->init(TensorInfo(TensorShape(3U, width_src, height_src), 1, DataType::U8).set_data_layout(DataLayout::NHWC));

inputtensor.info()->set_format(Format::U8);

}

void ImageProcess::GpuPreProcessXBGR(const char src, const uint32_t width_src, const uint32_t height_src, char dest, const uint32_t width_dst, const uint32_t height_dst) { // 0. cl shedule std::cout << "GpuPreProcessXBGR start" << std::endl; std::cout << "PROCESS INFO: width_src: " << width_src << ", height_src: " << height_src << ", width_dst: " << width_dst << ", height_dst: " << height_dst << std::endl; std::cout << "GpuPreProcessXBGR default init cl shedule" << std::endl; // CLScheduler::get().default_init();

} ` platform rk3399, Gpu:maliT860 branch v22.08

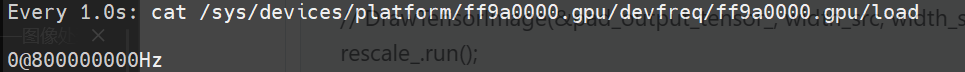

This program runs on some rk3399 boards and can normally call the gpu. You can see that the range occupied by the gpu is 20-100.

You can see that the range occupied by the gpu is 20-100.

And the recognition thread using about 10% cpu.

And the recognition thread using about 10% cpu.

However, when the same program is running on some other rk3399 boards, the thread that called the function has a high CPU usage, but a low gpu usage.

And It is strange that all boards can print device Mali-T860 by the code std::cout << "Device name: " << std::get<1>(ctx_dev_err).getInfo() << std::endl;