Have you tried to do a face swap in supervised mode?

Closed adeptflax closed 3 years ago

Have you tried to do a face swap in supervised mode?

How would I do that?

For the reference we also provide fully-supervised segmentation. For fully-supervised add --supervised option. And run git clone https://github.com/AliaksandrSiarohin/face-makeup.PyTorch face_parsing which is a fork of @zllrunning.

That's what I am doing in the code I posted. I'm using that face parser in the code.

Motion segmentation network is not needed than. You can use fomm.

what's fomm?

First order motion model.

This is the code for running and loading the model. I have first_order_motion_model=True on load_checkpoints().

from part_swap import load_checkpoints

cpu = True

reconstruction_module, segmentation_module = load_checkpoints(config='config/vox-512-sem-10segments.yaml',

checkpoint='log/vox-512-sem-10segments 26-04-21 19:25:33/00000005-checkpoint.pth.tar',

blend_scale=0.125, first_order_motion_model=True,cpu=cpu)

from part_swap import make_video, load_face_parser

face_parser = load_face_parser(cpu=cpu)

def swap(source_image, target_image):

shape = source_image.shape

source_image = resize(source_image, (512, 512))[..., :3]

target_video = [resize(target_image, (512, 512))[..., :3]]

out = make_video(swap_index=[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15], source_image = source_image,

target_video = target_video, use_source_segmentation=True, segmentation_module=segmentation_module,

reconstruction_module=reconstruction_module, face_parser=face_parser, cpu=cpu)[0]

return resize(out, (shape[0], shape[1]))OK, but checkpoint should also be one from first order.

oh, I didn't realized you could use the first order model directly to do it. I thought had to train a face swap on top of it.

I dunno I'll use that.

Same model I was trying to train on issue https://github.com/AliaksandrSiarohin/motion-cosegmentation/issues/43. The face swap model doesn't output very good results. How do I improve the model results? Face swap examples on epoch 5 https://imgur.com/a/lMhvZOf and on epoch 10 https://imgur.com/a/yCNyjx9 on images.

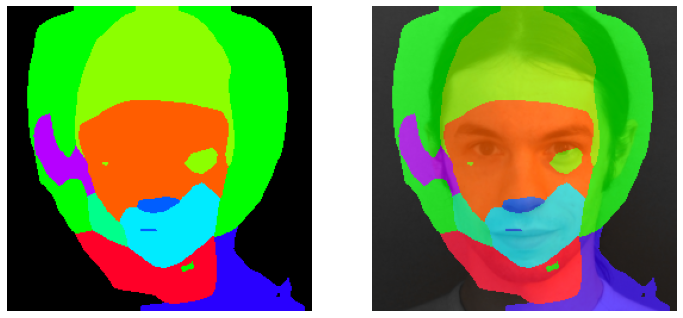

Segmentation module example output on epoch 5:

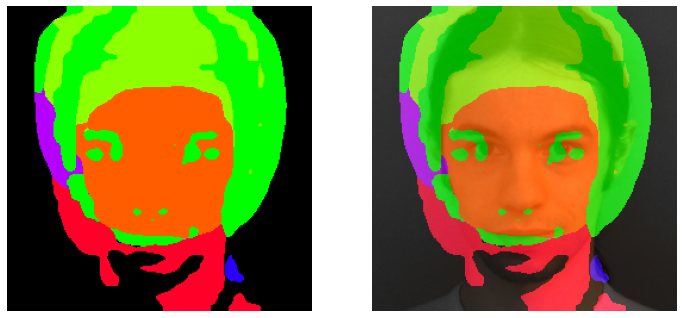

Segmentation module example output on epoch 10:

I replaced this line: https://github.com/AliaksandrSiarohin/motion-cosegmentation/blob/571e26f04b8c40c5454a158b4b570e4ba034c856/part_swap.py#L88 With this:

I did that in order to avoid tensors errors about tensors being different size.

Logs messages when I reran the code to get the warning messages at beginning. I didn't keep the logs when I trained the model.

Code I used to get the face swap output:

That's all the information I could think of sending.