When utilizing the "None" option for the private DNS zone, the cluster creates without failing using on-prem DNS in the VNET. However, after creation it seems like the portal no longer works to interact with the cluster.

Looking at network traffic, it looks like it's trying to find a DNS record with the format -priv.portal.hcp.centralus.azmk8s.io. That record doesn't seem to get created from what I can see when using the None option.

Is that expected behavior? If so, is that GUID available anywhere to lookup programmatically so that we can create a record on our internal DNS server?

cam we create a DNS Zone post the deployment of the AKS cluster? Would that work @feiskyer @miwithro @viralpat218 @anttipo

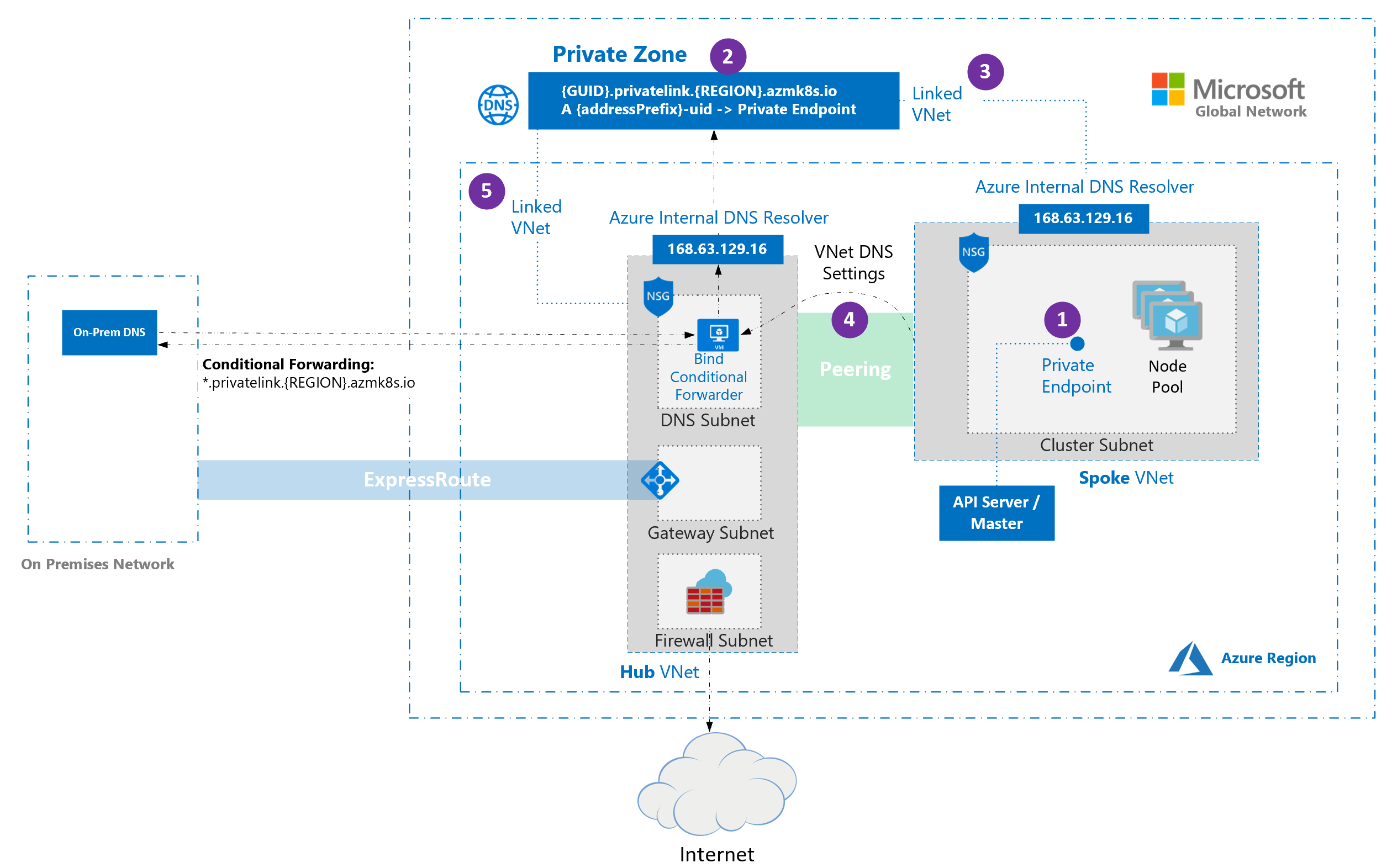

The AKS team uncovered issues with BYO VNet + DNS setup in AKS private clusters: https://docs.microsoft.com/en-us/azure/aks/private-clusters

In some cases, AKS private cluster creation fails for BYO VNet configuration when the DNS zone is not properly attached to BYO VNet or your Hub network. You may see messages like:

Agents are unable to resolve Kubernetes API server name. It's likely custom DNS server is not correctly configured, please see https://aka.ms/aks/private-cluster#hub-and-spoke-with-custom-dns for more informationToday worker node to control plane communication requires the private DNS zone for API server name resolution.

Going forward we are working on removing private DNS zone dependency for node to control plane communication.

Current ETA for public preview is in summer 2020.

In the meantime, you may want to consider one of the workarounds:

Related issues

1508