@gapra-msft @rickle-msft could you please take a look?

Closed vida-bandara-by closed 4 years ago

@gapra-msft @rickle-msft could you please take a look?

@vida-bandara-by Just to be clear, are you saying this is a problem with the SDK or with the portal? It seems like you're suggesting the portal has a bug, but I want to make sure.

And can you try making a get properties call on the file in question and see what size is returned on the http response?

Thank you @rickle-msft for your help with this matter. I'm sorry I was not clear - I suspect the issue is with the SDK.

Actual size of the file is under 1MB. Storage account metrics reflect the usage correctly.

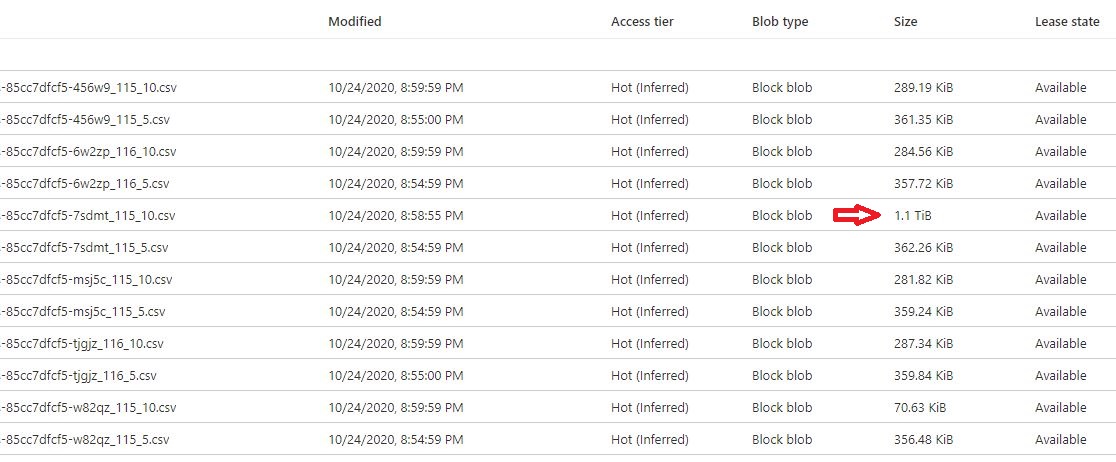

However, Storage explorer (in portal) shows a size over 1TB (please see the original message).

We see the large file size on Microsoft Azure Data Explorer as well.

Tried getting the file size as you suggested with this code:

public class AdlStats {

private static final String DATA_LAKE = "<<DATALAKE>>";

private static final String SECRET = "<<SECRET>>";

private static final String CONTAINER = "<<CONTAINER>>";

private DataLakeFileSystemClient fileSystemClient;

public AdlStat() {

final StorageSharedKeyCredential credential = new StorageSharedKeyCredential(DATA_LAKE, SECRET);

final DataLakeServiceClient dataLakeServiceClient = new DataLakeServiceClientBuilder()

.endpoint(String.format("https://%s.dfs.core.windows.net", DATA_LAKE))

.credential(credential)

.buildClient();

fileSystemClient = dataLakeServiceClient.getFileSystemClient(CONTAINER);

}

public long getFileSize(final String filename) {

final DataLakeFileClient fileClient = fileSystemClient.getFileClient(filename);

final PathProperties properties = fileClient.getProperties();

return properties.getFileSize();

}

}When the following invoked

final long fileSize = adlStats.getFileSize("85cc7dfcf5-7sdmt_115_10.csv");

System.out.println(fileSize);we got

1212245672256Whereas the actual amount of data written to the file is under 1MB.

Thanks again for your help!

Hi @vida-bandara-by Can I please have the account name and the file paths where you see these massive files. I will work with my friends in the service to get this resolved for you. If you don't want to put it here, send me an email with the information

Thanks Ashish

Thank you so much @amishra-dev for your kind offer! Please allow me to send you the file url to your email. Thanks again!!!

@vida-bandara-by Sorry for the delay. You can send an email to

Thank you very much for reaching out @rickle-msft! Let me connect you via email. Thanks again!

I am going to close this issue since it has been taken offline and is not a bug in the SDK.

We have an application that constantly write to datalake. At a given time, we would be appending to about 50 CSV files. Rows are appended to each file over a period of 5 minutes. A file would grow to under 1MB by the end of 5 minutes.

The SDK version:

compile group: 'com.azure', name: 'azure-storage-file-datalake', version: '12.2.0'Once a couple of weeks or so, we see a file showing size over 1TB (actual is under 1MB). Please see the figure:

Few observations: