As discussed offline: yeah!!

Closed CraigFeldspar closed 2 years ago

As discussed offline: yeah!!

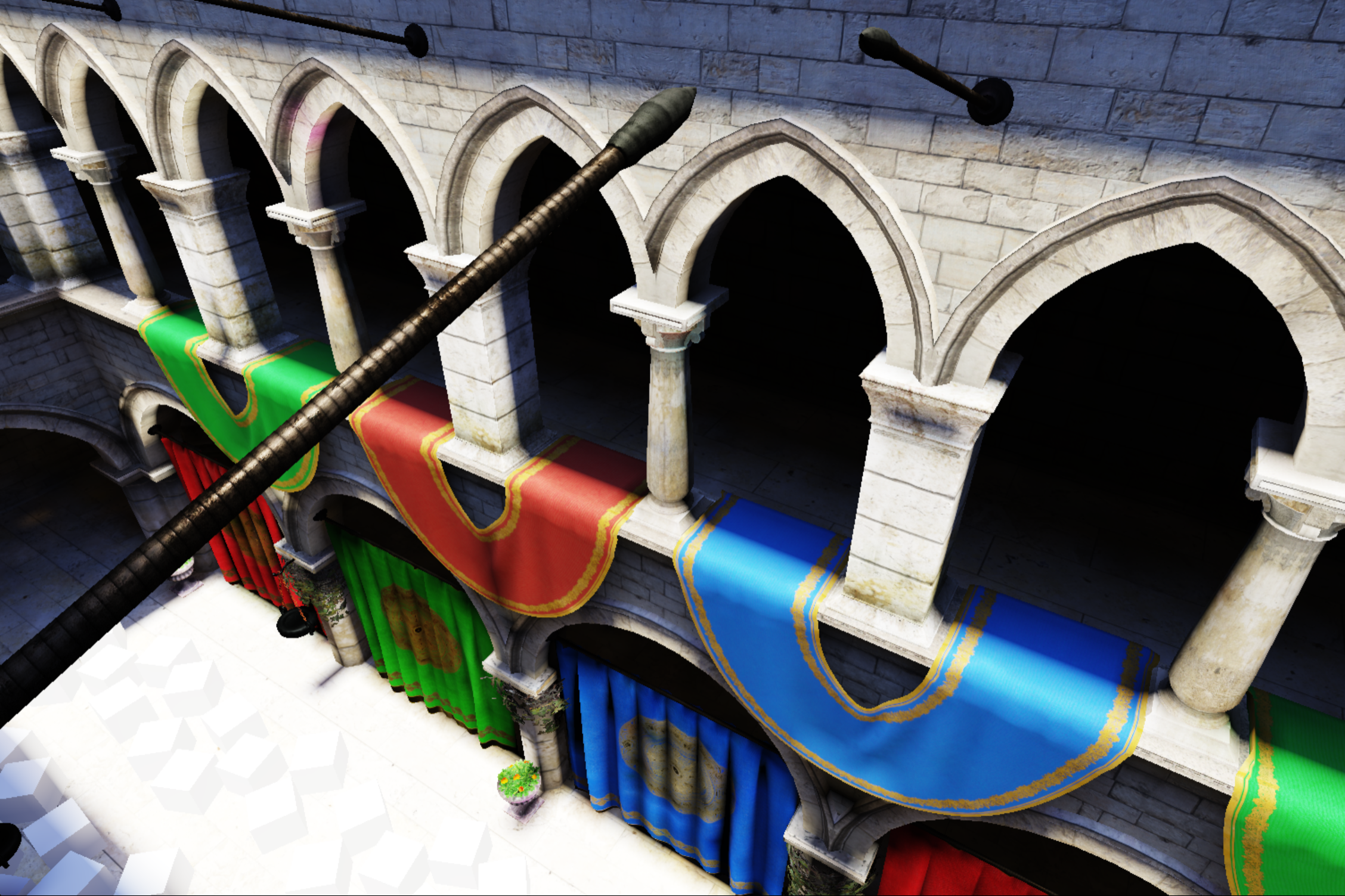

Progressive radiosity is looking pretty sharp so far !

https://drive.google.com/file/d/1JcY1IYmiKKqh1XZNKWymbWpjLYkSLXVz/view?usp=sharing

WOOOOT! can't wait to try it!! Why is it blinking?

i interleaved some renders of the scene during the baking process to see the progress. Somehow the framebuffer is cleared by something making it blink. Will fix it for nicer viewing :)

What have you planned about the workflow: is the lighting will be computed each time scene is loaded, or is it be saved to a cache system, allowing final user to load lighting without calculation?

@Vinc3r for now i'm focusing on making the baking work on the fly. Then i'll work on exporting the created lightmaps, and probably other baking info like spherical harmonics on probes.

Some news?? CAN'T WAIT TO TRY IT ;D

CAN’T WAIT AS WELL =D

Some updates for you guys :

The radiosity renderer is working pretty well, but still needs strong prerequisites. We need to have proper lightmap uv coordinates on all meshes, that share the same scale (1 texel side should approx. be 0.25 m in the world scale).

We also need to split meshes into "surfaces", that share the same lightmap bit, without occluding each other.

Finally, since I'm using spherical projection for the visibility pass, i need to retesselate the scene at a higher resolution to limit distortion from the rasterization. I'm doing this on the fly, but i don't know how many triangles i can handle before it crashes horribly.

So as you can see, there is still a lot of work, but i'm hoping i can tackle all of this. Biggest work imo is the automatic UV mapping, but I think i can port some code from Blender.

I'll keep you in touch.

Some news?? CAN'T WAIT TO TRY IT ;D

Looking at this question from another angle, why can't it interface with the existing open source raytracing renderer?

The raytracing renderer may not necessarily run on the web.

@FishOrBear I think regular lightmap support for baking in offline renderers is already supported: https://doc.babylonjs.com/babylon101/lights#lightmaps

Maybe this project is of interest aswell: https://lighttracer.org/

I was just wondering if this is it still active?

Some news?? CAN'T WAIT TO TRY IT ;D

Any updates on this ? :)

@br-matt unfortunately not for the moment as I had to drop the subject a while ago. But maybe if we ask this kindly to @deltakosh for a future milestone ? 😄

Would be amazing let s see how you could potentially collab with @CraigFeldspar ???

Some news?? CAN'T WAIT TO TRY IT ;

@CraigFeldspar can probably provide some ?

cannot wait to try this too!

Yo guys,

Unfortunately I'm not working on this feature anymore. What I got so far required lots of manual work setting up uvs correctly and handling different layers of depth. I don't think I will have any bandwidth working on that any time soon, but if someone would like to start over or continue this work, I'd be happy to provide insights

ok, closing the issue. Please re-open if needed.

@CraigFeldspar Hi, Do you have a code base or should one start from scratch? Would your code be able to help with light baking? I am currently pondering whether I should do it inside BJS or find another way. What's your experience so far concerning the difficulty to implement this in BJS? Just to taking the temperature before deciding. Thanks

Don't think a code base would help you much. Best to stay close to the recent academic literature and different ways to solve global illumination (Nvidia has some good papers). Then it's all about finding a data structure for parsing the scene (e.g. voxels), a way to store global illumination data and incorporate them into the shaders etc.

You could also look into what Godot did recently (signed distance fields).

Might also be good to do this exclusively for WebGPU, because it has a lot less limitations around async code / compute shaders etc - don't think you'd get anything decent / universal with WebGL

best of luck

Thanks, I guess I will do it otherwise 😉

I've come up with a baked global illumination system for my engine that works in WebGL. I haven't had time to do a proper writeup but I thought I'd share something here. It's heavily based on this frostbite presentation: https://media.contentapi.ea.com/content/dam/eacom/frostbite/files/gdc2018-precomputedgiobalilluminationinfrostbite.pdf but extends the idea to light volumes consisting of 3D textures.

Firstly, I bake 4 textures out with the mitsuba renderer using my script here: https://github.com/expenses/mitsuba-lightvol-baking/blob/master/lightvol.py and some tools from https://github.com/expenses/lightmap-tools/, one texture for each spherical harmonic coefficient. The first texture is the average irradiance per-probe while the others coefficients contain the degree to which the irradiance is coming from one direction (ranging from -1 to 1. These can be scaled down to a 0 to 1 range but I haven't done that yet). These textures are stored as KTX2s (currently in a rgba16f format) and loaded at runtime.

The fragment shader translates the fragment position to coordinates in the 3D textures and samples the spherical harmonics, letting the hardware do the interpolation. To avoid banding the spherical harmonics are sampled in a non-linear fashion (see page 55 of the frostbite pdf and https://web.archive.org/web/20160313132301/http://www.geomerics.com/wp-content/uploads/2015/08/CEDEC_Geomerics_ReconstructingDiffuseLighting1.pdf).

Here's the code for that (in Rust):

pub type L1SphericalHarmonics = [Vec3; 4];

pub fn spherical_harmonics_channel_vectors(harmonics: L1SphericalHarmonics) -> (Vec3, Vec3, Vec3) {

(

Vec3::new(harmonics[1].x, harmonics[2].x, harmonics[3].x),

Vec3::new(harmonics[1].y, harmonics[2].y, harmonics[3].y),

Vec3::new(harmonics[1].z, harmonics[2].z, harmonics[3].z),

)

}

// See https://grahamhazel.com/blog/2017/12/22/converting-sh-radiance-to-irradiance/

// https://web.archive.org/web/20160313132301/http://www.geomerics.com/wp-content/uploads/2015/08/CEDEC_Geomerics_ReconstructingDiffuseLighting1.pdf

// https://media.contentapi.ea.com/content/dam/eacom/frostbite/files/gdc2018-precomputedgiobalilluminationinfrostbite.pdf

pub fn eval_spherical_harmonics_nonlinear(harmonics: L1SphericalHarmonics, normal: Vec3) -> Vec3 {

fn eval_scalar(r_0: f32, r_1_div_r_0: Vec3, normal: Vec3) -> f32 {

let r1_r0_ratio = r_1_div_r_0.length();

let a = (1.0 - r1_r0_ratio) / (1.0 + r1_r0_ratio);

let p = 1.0 + 2.0 * r1_r0_ratio;

let q = 0.5 * (1.0 + r_1_div_r_0.dot(normal));

r_0 * (a + (1.0 - a) * (p + 1.0) * q.powf(p))

}

let (red_vector, green_vector, blue_vector) = spherical_harmonics_channel_vectors(harmonics);

Vec3::new(

eval_scalar(harmonics[0].x, red_vector, normal),

eval_scalar(harmonics[0].y, green_vector, normal),

eval_scalar(harmonics[0].z, blue_vector, normal),

)

}for specular lighting, I use an approximation based on the average incoming light direction:

fn spherical_harmonics_specular_approximation(

spherical_harmonics: [Vec3; 4],

normal: glam_pbr::Normal,

view: glam_pbr::View,

material_params: glam_pbr::MaterialParams,

) -> Vec3 {

let (red, green, blue) =

shared_structs::spherical_harmonics_channel_vectors(spherical_harmonics);

let average_light_direction = (red + green + blue) / 3.0;

let directional_length = average_light_direction.length();

let smoothness = 1.0 - material_params.perceptual_roughness.0;

let adjusted_smoothness = smoothness * directional_length.sqrt();

let adjusted_roughness = glam_pbr::PerceptualRoughness(1.0 - adjusted_smoothness);

let actual_roughness = adjusted_roughness.as_actual_roughness();

let light = glam_pbr::Light(average_light_direction / directional_length);

let halfway = glam_pbr::Halfway::new(&view, &light);

let view_dot_halfway = glam_pbr::Dot::new(&view, &halfway);

let strength = spherical_harmonics[0] * directional_length;

let f0 = glam_pbr::calculate_combined_f0(material_params);

let f90 = glam_pbr::calculate_combined_f90(material_params);

let fresnel = glam_pbr::fresnel_schlick(view_dot_halfway, f0, f90);

let normal_dot_light = glam_pbr::Dot::new(&normal, &light);

glam_pbr::specular_brdf(

glam_pbr::Dot::new(&normal, &view),

normal_dot_light,

glam_pbr::Dot::new(&normal, &halfway),

actual_roughness,

fresnel,

) * strength

* normal_dot_light.value

}Here's a few screenshots:

I don't have a demo out yet but hopefully I will in a bit.

Very promising!

Nice, how expensive is it? (Time to render..) I am considering luxcore to do the rendering, but it means a lot of configuration beforehand..

Nice, how expensive is it? (Time to render..) I am considering luxcore to do the rendering, but it means a lot of configuration beforehand..

The baking step for the light volumes in the screenshots was pretty fast. I bake out the direct and indirect lighting seperately and combine them later. I think I used around 15000 samples for the direct lighting and maybe 4096 for indirect? Took maybe 15-30m on my laptop's CPU. Using Cuda would naturally be faster.

That's really good Ashley 🙂

Implement a static global illumination solver, only for diffuse lightning in the first place. Several algorithms could be tried, voxel GI, photon mapping/path tracing, light propagation volumes, virtual pointlights, metropolis light transport...

Leverage webassembly for low-level operations ?