Thanks for the python3 fixes. Hmm, could you post your training config here? Changing line 70 in geometry.py should not be necessary.

Closed davideCremona closed 4 years ago

Thanks for the python3 fixes. Hmm, could you post your training config here? Changing line 70 in geometry.py should not be necessary.

Hi Martin, thank you for responding. I had to change that line in geometry.py because it gave me "OutOfBounds" when reading the list of vertices.

I think I have fixed this looking at the .ply that I was loading. The file is listing vertices and then faces, but the code in geometry.py was reading the vertices in order. For example, the original code is assuming that vertices are face-ordered like this: [vertex1_face1, vertex2_face1, vertex3_face1, vertex1_face2, vertex2_face2, vertex3_face2, ... ] But in a normal .ply file you find: [vertex,1, vertex2, vertex3,.... ] and then a description of how faces are build, referencing vertices with their index: [(0, 4, 100), (0, 3, 10), ...]

So you can immagine that reading a standard .ply file assuming that vertices are face-ordered will cause the sort of glitches that are shown in my previous post.

I have changed the way vertices are passed to "compute_normals" in geometry.py to have a list of face-ordered vertices, copying some vertices if necessary.

Just to be sure: are you pre-processing the .ply files with some code?

I have another issue to be honest: I have trained an AAE with correctly rendered images but now I am getting the same (wrong) prediction for different test poses:

Test Image 1:

predicted Rotation:

predicted Rotation:

[[ 1.0000000e+00 0.0000000e+00 0.0000000e+00] [ 0.0000000e+00 -1.0000000e+00 -1.2246468e-16] [ 0.0000000e+00 1.2246468e-16 -1.0000000e+00]]

pred_view 1:

Test image 2:

predicted Rotation (same as test image 1):

[[ 1.0000000e+00 0.0000000e+00 0.0000000e+00] [ 0.0000000e+00 -1.0000000e+00 -1.2246468e-16] [ 0.0000000e+00 1.2246468e-16 -1.0000000e+00]]

pred_view 2:

I'm thinking that there can be some problem in the codebook, like KNN failure or something similar. Do you have some idea about this?

I've did some debugging and I don't know why but if I set a breakpoint at line 59 of file "codebook.py" and run debug, the "cosine_similarity" list is filled with zeros.

How the codebook is built? Where are the latent representations with the corresponding rotations memorized?

Ok, solved by executing "ae_embed.py" after the training. Now I'm getting correctly predicted rotations and pass to the next step of 6D pose estimation, yayy

@davideCremona Hi, I met the same issue with you caused by the .ply file vertices order. Would you mind sharing the method to changing the code or .ply file to get the correct result? Thank you very much!

Hi @FoxinSnow , I have added some lines of code that reads the .ply faces and duplicate vertices when needed. It's not optimized but it still works :)

In auto_pose/meshrenderer/gl_utils/geometry.py in function load_meshed(obj_files, vertex_tmp_store_folder, recalculate_normals=False) just after this line inside the for loop: mesh = scene.meshes[0]

Add the following code:

vertices = []

for face in mesh.faces:

vertices.extend([mesh.vertices[face[0]], mesh.vertices[face[1]], mesh.vertices[face[2]]])

vertices = np.array(vertices)This should work (it worked for me). Let me know if something happens :)

@davideCremona Thank you very much for your sharing :)

Hi, I'm experimenting with your repository using T-LESS object number 1.

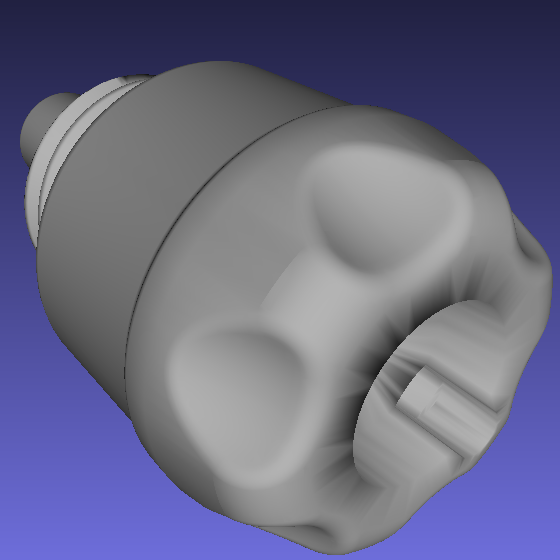

I've created my custom cfg file as described in the readme and trained an AAE with "ae_train". When I explore the output checkpoint images produced by the script during training I'm seeing some strange glitches with the target images as shown here: training_images_9999.png: training_images_19999.png:

training_images_19999.png:

training_images_29999.png:

training_images_29999.png:

I'm wondering if that ugly rendering is an OpenGL fault or because of some modifications I had to do to convert to Python3 but nothing special:

printtoprint()xrangetorange()-in geometry.py line 45 change fromhashed_file_name = hashlib.md5( ''.join(obj_files) + 'load_meshes' + str(recalculate_normals) ).hexdigest() + '.npy'tohashed_file_name = hashlib.md5( (''.join(obj_files) + 'load_meshes' + str(recalculate_normals)).encode('utf-8') ).hexdigest() + '.npy'for i in range(0, N-1, 3):tofor i in range(0, N-2, 3):--> maybe this line is causing that problem? I've changed this because you are accessing vertices[i+2] and N-1 was causing _index out of bounds error.int()forHandWright after theassert(someway their type was float)I'm currently working to figure out what is causing this problem, any idea is precious to me.. thank you in advance!

EDIT

My config file is the following: