I'm not sure how likely it would be for such a specific/custom change top be added to the codebase, but it's relatively simple to make the modifications on your own local copy.

First, the current video runner doesn't include a frame index, so you'd have to add that in. You'd also want to create a folder to store the pgm files for each separate video (in case you process multiple videos, you don't want them overwriting each other). This can be done by adding some extra code to create the save folder path and start a frame counter just above the existing while loop, something like:

video_name = os.path.splitext(os.path.basename(filename))[0]

pgm_folder_path = os.path.join(args.outdir, video_name)

os.makedirs(pgm_folder_path, exist_ok=True)

frame_idx = 0And then the only other change would be to save pgm files and update the frame counter. You can do this by adding some new lines just after the normalized depth image is created:

pgm_save_path = os.path.join(pgm_folder_path, f"{frame_idx:06}.pgm")

cv2.imwrite(pgm_save_path, depth)

frame_idx += 1

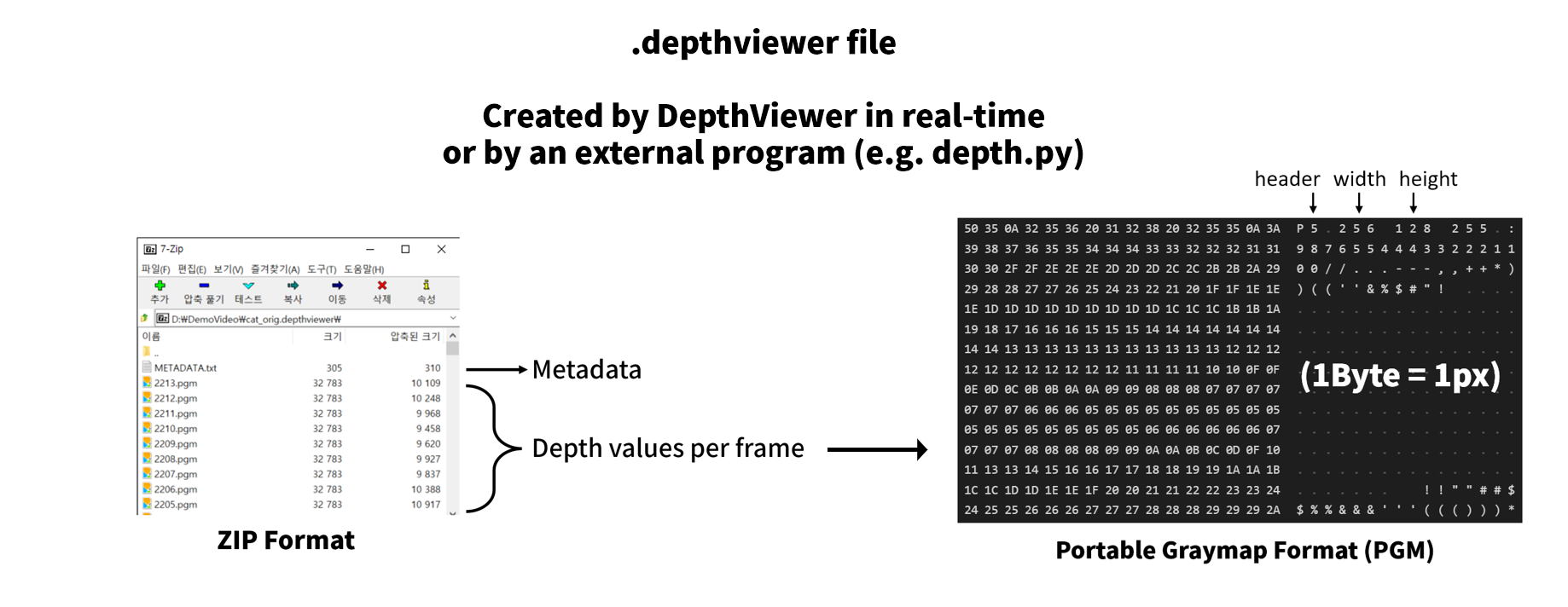

It'd be nice to be able to use Depth Anything V2 in DepthViewer (2D to 3D video converter/player for VR/3D displays), but it only supports V1 using this. I'm particularly interested in 2D to 3D video/movie conversion, and considering it might not be possible get stable, realtime (like 24FPS) conversion using the highest quality models, the best alternative is to pre-cache the depth map for every frame beforehand. Luckily, DepthViewer already has a cache format for videos, which is basically just a ZIP file containing every raw frame in PGM/PFM format and some metadata. So could you please add an option to run_video.py to output every depth frame into numbered PGM/PFM files, perhaps in a (probably solid) ZIP file?

Then we could just manually add a metadata file from a template to use it in DepthViewer 👀👌

So could you please add an option to run_video.py to output every depth frame into numbered PGM/PFM files, perhaps in a (probably solid) ZIP file?

Then we could just manually add a metadata file from a template to use it in DepthViewer 👀👌

If you need a sample, here's Wildlife and the .depthviewer file generated by DepthViewer's built-in model.