Open longnguyenQB opened 2 years ago

Hello, I’m new in deep learning. Please help me fix this error, my model:

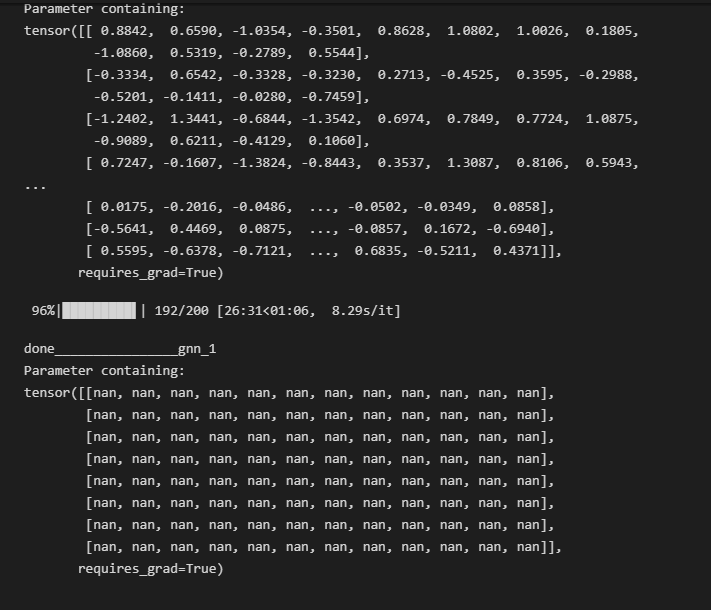

HybridGNN( (leaky_relu): LeakyReLU(negative_slope=0.01) (gnn_0): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (gnn_1): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (gnn_2): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (gnn_3): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (gnn_4): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (gnn_5): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (gnn_6): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (gnn_7): SpGAT( (attention_0): SpGATLayer (8 -> 12) (attention_1): SpGATLayer (8 -> 12) (attention_2): SpGATLayer (8 -> 12) (out_att): SpGATLayer (36 -> 72) ) (fc): Sequential( (0): LazyLinear(in_features=0, out_features=72, bias=True) (1): LeakyReLU(negative_slope=0.01) (2): Linear(in_features=72, out_features=36, bias=True) (3): ReLU() (4): Linear(in_features=36, out_features=2, bias=True) ) ) This is the error I get:

Hello, I’m new in deep learning. Please help me fix this error, my model: