Prior art, for addition and subtraction: Investigating the Limitations of Transformers with Simple Arithmetic Tasks https://arxiv.org/abs/2102.13019

Closed leogao2 closed 1 year ago

Prior art, for addition and subtraction: Investigating the Limitations of Transformers with Simple Arithmetic Tasks https://arxiv.org/abs/2102.13019

Prior art, for addition and subtraction: Investigating the Limitations of Transformers with Simple Arithmetic Tasks https://arxiv.org/abs/2102.13019

The paper's authors concluded from their experiments that models couldn't learn addition rules independent of the length of numbers seen in training, is that observation concrete enough to answer the RFP?

We know LLMs can learn to write code, I suspect they would do extremely well on arithmetic if you just do prompt engineering such as "//javascript to solve 3+5-3" or something along those lines

In the vein of this problem though, thinking about how DeepMind used database lookups to shrink their LM and boost performance (https://deepmind.com/blog/article/language-modelling-at-scale), what if you had 2 smaller language models. One trained to automatically identify math problems, and the other trained to internally generate the prompt to solve those math problems, then executing the code to solve the math problems outside of the neural net, but passed back to it, then a final language model reads the original question, the predicted math equation, and the generated answer.

Each submodel can be trained independently of the others. The model at step 4 would most resemble existing language models, but would not be attempting to generate an answer like current LLMs, but instead relying on the submodels to generate the answer. As research advances, the symbolic programming interpreter could be replaced with perhaps a fully neural module, or new type of neural network that specializes in program generation.

Each submodel can be trained independently of the others. The model at step 4 would most resemble existing language models, but would not be attempting to generate an answer like current LLMs, but instead relying on the submodels to generate the answer. As research advances, the symbolic programming interpreter could be replaced with perhaps a fully neural module, or new type of neural network that specializes in program generation.

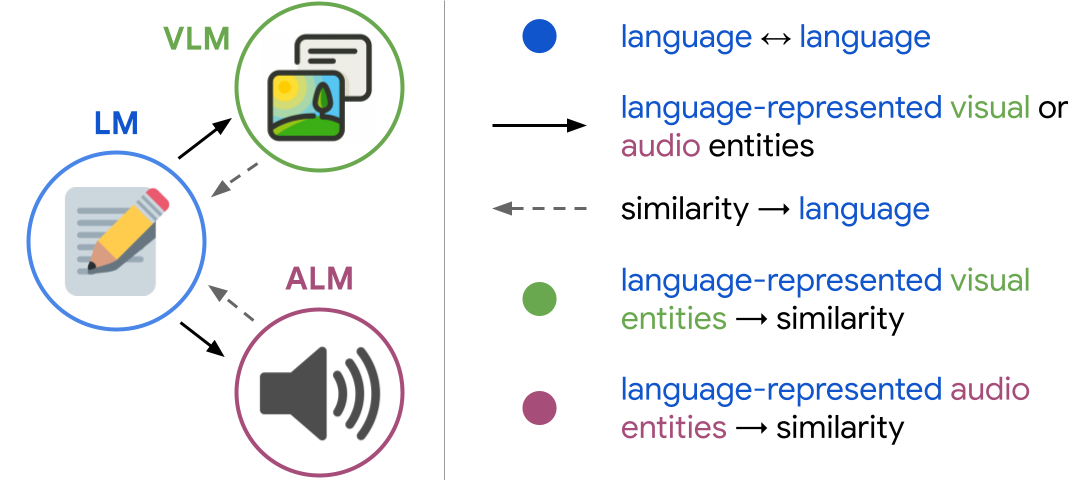

The other idea behind the sub models is that something like clip can be plugged in and trained to similar text encodings, and the same math submodels should transfer to VQA tasks.

This approach would also allow for multi-step computations

https://arxiv.org/abs/2203.13224

An interesting paper here that I think could inform an approach to this issue

looks like Google took a whack at arithmetic by adding a calculator

http://ai.googleblog.com/2022/04/pathways-language-model-palm-scaling-to.html

For example, with 8-shot prompting, PaLM solves 58% of the problems in GSM8K, a benchmark of thousands of challenging grade school level math questions, outperforming the prior top score of 55% achieved by fine-tuning the GPT-3 175B model with a training set of 7500 problems and combining it with an external calculator and verifier.

Further work here, related to robotics, and having models talk to each other, with a very similar circular diagram as above: https://socraticmodels.github.io/

link to paper https://arxiv.org/abs/2204.00598

some other work in this area

https://www.ai21.com/blog/jurassic-x-crossing-the-neuro-symbolic-chasm-with-the-mrkl-system

Here’s a user of gpt neo that uses an extensive prompt to get reliable arithmetic

Here’s a user of gpt neo that uses an extensive prompt to get reliable arithmetic

This is GPT-3, presumably the 175B model, not GPT-Neo

Prior art, for addition and subtraction: Investigating the Limitations of Transformers with Simple Arithmetic Tasks https://arxiv.org/abs/2102.13019

The paper's authors concluded from their experiments that models couldn't learn addition rules independent of the length of numbers seen in training, is that observation concrete enough to answer the RFP?

I think it is. What additional observations are needed?

Prior art, for addition and subtraction: Investigating the Limitations of Transformers with Simple Arithmetic Tasks https://arxiv.org/abs/2102.13019

The paper's authors concluded from their experiments that models couldn't learn addition rules independent of the length of numbers seen in training, is that observation concrete enough to answer the RFP?

I think it is. What additional observations are needed?

This paper reminds me of the following one, where the author claims that BERT models cannot deductively reason, i.e. use rules, due to "statistical features". https://arxiv.org/abs/2205.11502

But then we saw how GPT-3 can do arithmetic reasonably well if steps are explicitly written out

Here’s a user of gpt neo that uses an extensive prompt to get reliable arithmetic

This is GPT-3, presumably the 175B model, not GPT-Neo

Is there a way to reconcile both these facts? I know this is not an apple-to-apple comparison, but it seems that models would have to elaborate steps sequentially in order to reason in general, instead of shoving everything into input and expecting correct output all at once.

What's curious is that perhaps LLMs can interact with external systems just like humans can:

But that starts from making it understand its own limitations, which is what I've done here with this prompt.

Background

If really overpowered models can't learn something basic like arithmetic well (assuming all-arithmetic training set, sane tokenization w/out BPEs, etc), that implies something about what LMs can and can't learn. If present day models can't learn a simple task with the huge advantage of massive overparameterization and a purely-task dataset and favorable tokenization, then we should be skeptical that future LMs will be superhuman at language tasks. Somewhat tangentially related in spirit to #21.

What to plot?

For each of

{+, -, *, /} x [1, 10] digits, train like a 6B+ model with character level tokenization on just math problems. Plot accuracy+perplexity for each. Also would be interesting to look at our of distribution generalization (i.e train on 5 digits, test on 6).Maybe also repeat for a bunch of smaller models too, so we have a trendline to look at.

Then maybe we can also pick the model apart to see why it's failing at certain tasks. I bet for the multiplication tasks in particular the number of layers will be very important since there's a certain number of sequential steps tat need to happen.