Hi @cgpeltier !

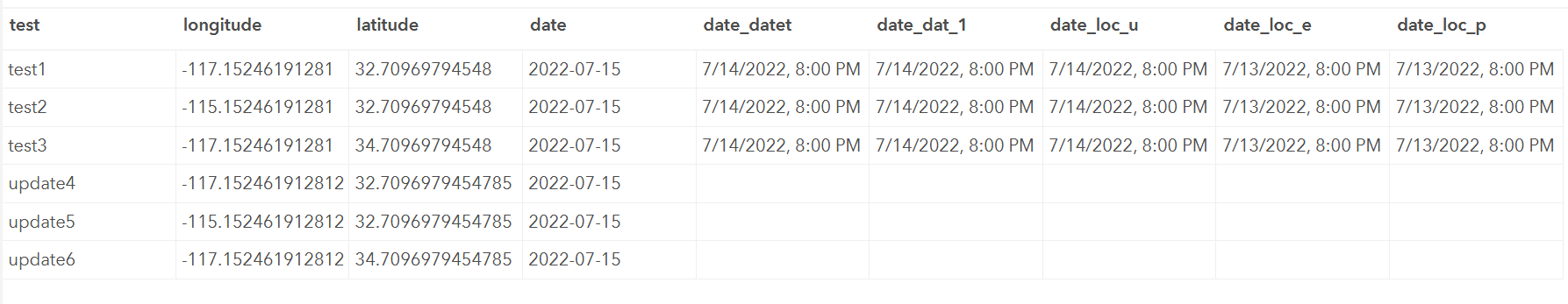

I ran using the code in the other github issue you posted and this is my result from that:

I then added this code above and got an error related to the dtypes and this seems to come from the arcpy side as stated in the first issue, so we will wait and see what they say.

Once they reply I'll test this again if there is a fix and let you know what happens

Describe the bug When attempting to append data by converting features in a spatially enabled dataframe to a featureset (using

to_featureset()) and thenedit_featuresto update an AGOL layer, datetime attrributes are converted to unix timestamps. If an AGOL layer contains date columns, then that data is not appended to the hosted feature service.The code below is connected to another github issue.

To Reproduce Steps to reproduce the behavior:

Screenshots

Expected behavior Datetime columns are maintained as dates and appended to AGOL successfully (this just may not be possible via

to_featureset/edit_features; if so, please let me know!😄).Platform (please complete the following information):