I'm not sure how many features of the existing ruby version at https://github.com/HXL-CPLP/Auxilium-Humanitarium-API will be implemented as built in --objectivum-formulam, like as in

hxltmcli schemam-un-htcds.tm.hxl.csv --objectivum-formulam formulam/exemplum-linguam.🗣️.json --objectivum-linguam por-Latn@pt > resultatum/formulam/exemplum-linguam.por-Latn.jsonwe will implement. But maybe will be just a subset. It may not have all features, but at least could be used sooner as part of Github Actions or other automations for simpler cases

Source:

Source:

The current

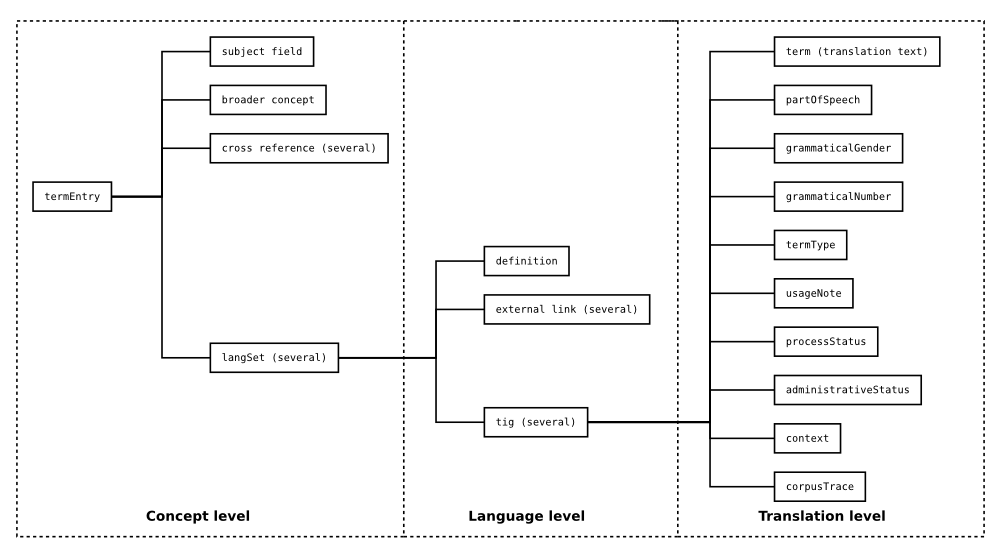

hxltmcli, as documented on https://hdp.etica.ai/hxltm/archivum/, allows several exporters of the complete dataset. But some of the "more basic" functionality of https://github.com/HXL-CPLP/Auxilium-Humanitarium-API / https://hapi.etica.ai/, were is possible to use the HXLTM as terms to create custom formats, still need to use Ruby code.Either the Hapi or the

hxltmcli(or a new cli) must be able to allow the extra fields (like the term definitions) to be used on templates.