It looks like it is finding a peak as a connected object that goes across a whole module. How high is the background level compared to your threshold levels?

Closed jadball closed 3 years ago

It looks like it is finding a peak as a connected object that goes across a whole module. How high is the background level compared to your threshold levels?

Thanks, that has corrected most of the problems. Our background was around 7000, so thresholding at 10000 fixed it. However, near thin module gaps, we are still getting incorrect peak position assignments:

What do you think is the best way of cleaning them up? Perhaps just masking to avoid them?

Here's another example in y/z for clarity:

I think you are getting an incorrect position whenever a peak is connected to the mask?

One option is to set the gap value to a high number (> threshold) and then everything will be connected to that mask object making a single 'peak'. You then just delete the one 'peak' which has a huge number of pixels.

e.g. data_to_peaksearch = np.where( img == maskvalue, 10001, img)

The more recent dectris detectors are using gap values of (unsigned)(-1) which can make this happen semi-automatically.

Our gap values are all masked to -1 in the original .cbf files. Is there a way to detect whether a peak is "connected" to any pixels with that value? We'd rather not alter the original images.

I think you would need to edit some code somewhere ?

The cleanest way to do this at the moment is probably via sandbox/hdfscan.py. It offers you a wrapper script that you can use to change numbers in place when reading data, also to remap new file formats etc.

Another option would be to edit ImageD11/correct.py to make the first line read as:

data_object.data = data_object.data.view( np.uint32 )

By casting to unsigned then (-1) becomes (2^32-1) and always above any threshold. At some later stage you would probably want to delete all peaks with IMax_int == (2^32-1). I guess this could be added as some new command line option.

Alternatively you could load a mask, grow it by one pixel (scipy.ndimage.binary_dilate) and then try to figure out the intersections somewhere inside labelimage.

In the longer term, I am try to stop using this peaksearch code and just save the "active" pixels into a sparse array. But that is not really finished yet.

... maybe the grow-by-one mask approach could be useful and not all that bad to implement

Perfect! That seems to have worked very well. I did the following:

Added data_object.data = data_object.data.view( numpy.uint32 ) to the top of correct.py

Thresholded above highest recorded background

Processed resultant peaks file:

In [29]: peaks = columnfile("ceo2_t15000.flt")

In [30]: peaks.filter(peaks.IMax_int < 4294967294)

In [31]: print(peaks.IMax_int)

[32914. 51443. 15534. ... 15784. 15233. 16624.]

In [32]: print(peaks.IMax_int.max())

2221822.0

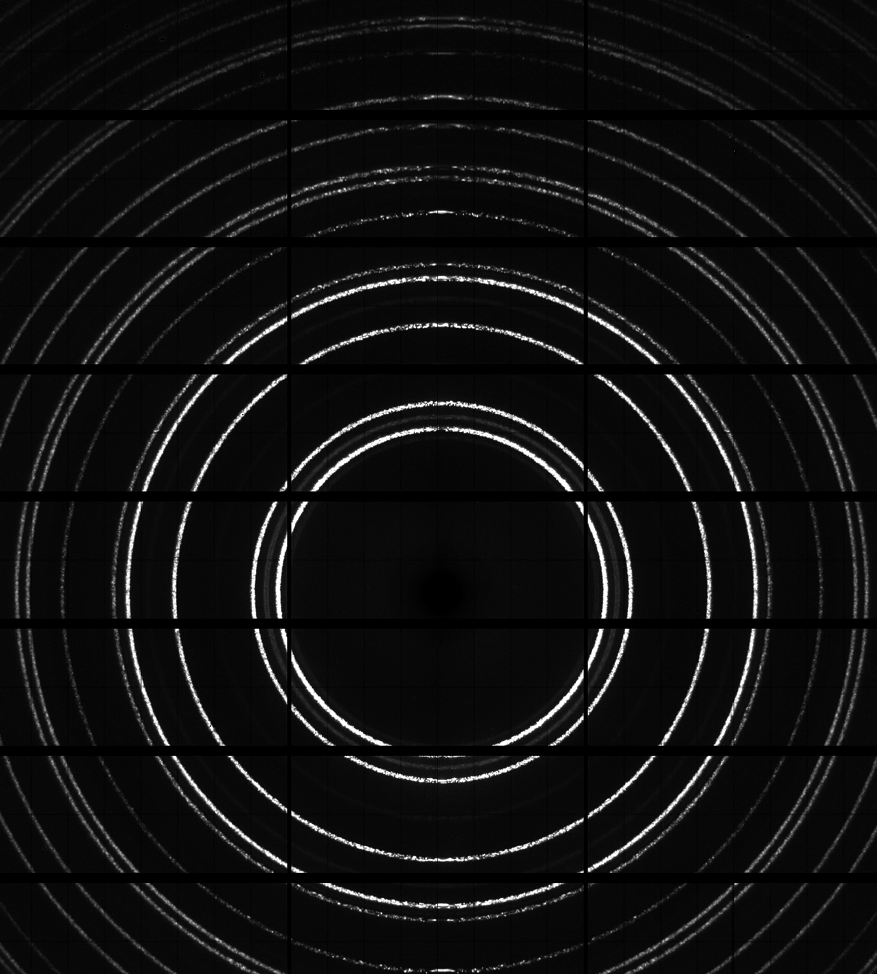

In [33]: peaks.writefile("filtered_15k.flt")This looks very clean now:

Many thanks for your help!

Hello,

Not sure whether this is a bug or something I am doing wrong. Below is a single frame powder diffraction image from our detector:

Now, I use the following commands to generate peaks from it:

Here's a quick look at c0000.edf:

It looks great! However, when I plot the peaks in ImageD11:

Am I doing something wrong? To me, it looks like peaksearch isn't counting the module dead zones as actual regions of the image?

Any inputs welcome.

Thanks!