Finally I found one parameter and replaced

net.set_feed(im_in, lay_gt, label_gt, i)

with

net.set_feed(im_in, lay_gt, label_gt, i, is_training=False)

Now results are stable from run to run, but the quality of predictions is very low. So I think that there is still a problem with network. Is there something I do wrong with your code? Maybe I need to add some preprocessing steps before feeding images into net, or something like this? Do you have any idea?

BTW: can you please provide sample names from the images that are shown on the repo page (in Readme.md)? It would be nice to have those both for vanilla and rcnn architectures. Thanks

Hi! I have a problem with reproducibility of the predictions on inference. Below I attached the code I use to make predictions on samples from the file you provided:

sample.npz.I made one more function in addition to yours

trainandtestfor inference:and one more change in

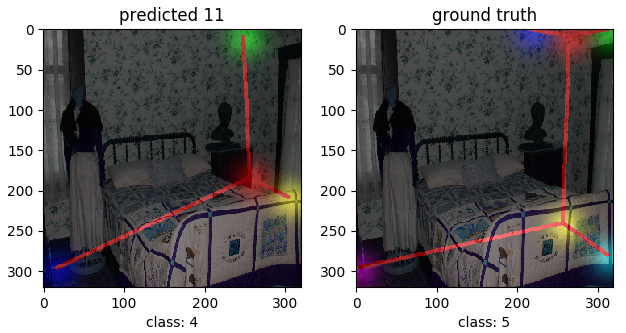

main:Then I noticed that after each time I run inference i get different results. For example, couple of predictions for image with index 11 in

sample.npz:P.S. I used the following command to run the inference:

python main.py --inference 0 --net vanilla --out_path pretrained_model --gpu 1.So, the question is how to make results on inference reproducible?