I am in complete agreement with this view. Looking at real mobile/3G user data it's obvious that Lighthouse is overly pessimistic about page performance - and by a huge margin. While the suggestions from Lighthouse are really useful it's important not to get obsessed over 'perfect' web pages when they are technically and commercially impossible to create. Awful code can run quick enough on average hardware and network conditions so fairly decent code should be scored fairly.

Would it be possible to tune the calculated the score based on averages of real sites or, even better, real data? From my personal experience/opinion I'd be giving cnn around 75 but I've just run it through Lighthouse and got 4!

While the original poster is having problems with internal communication some companies seem to be using this to make commercial decisions so it's having a real world impact.

Posting this as "other", it may be in the wrong place but it does say "post anything on your mind about Lighthouse" so I hope this is welcome here. If not, feel free to remove.

My discussion/feedback is about Lighthouse scoring and how it relates to Pagespeed Insights. In my experience, Pagespeed insights is traditionally used by business people and marketeers to quickly get a sense of the performance of a web experience. These people are often not (performance) engineers and therefore struggle to properly interpret results in the right context. They pretty much take the score number and color and leave it at that.

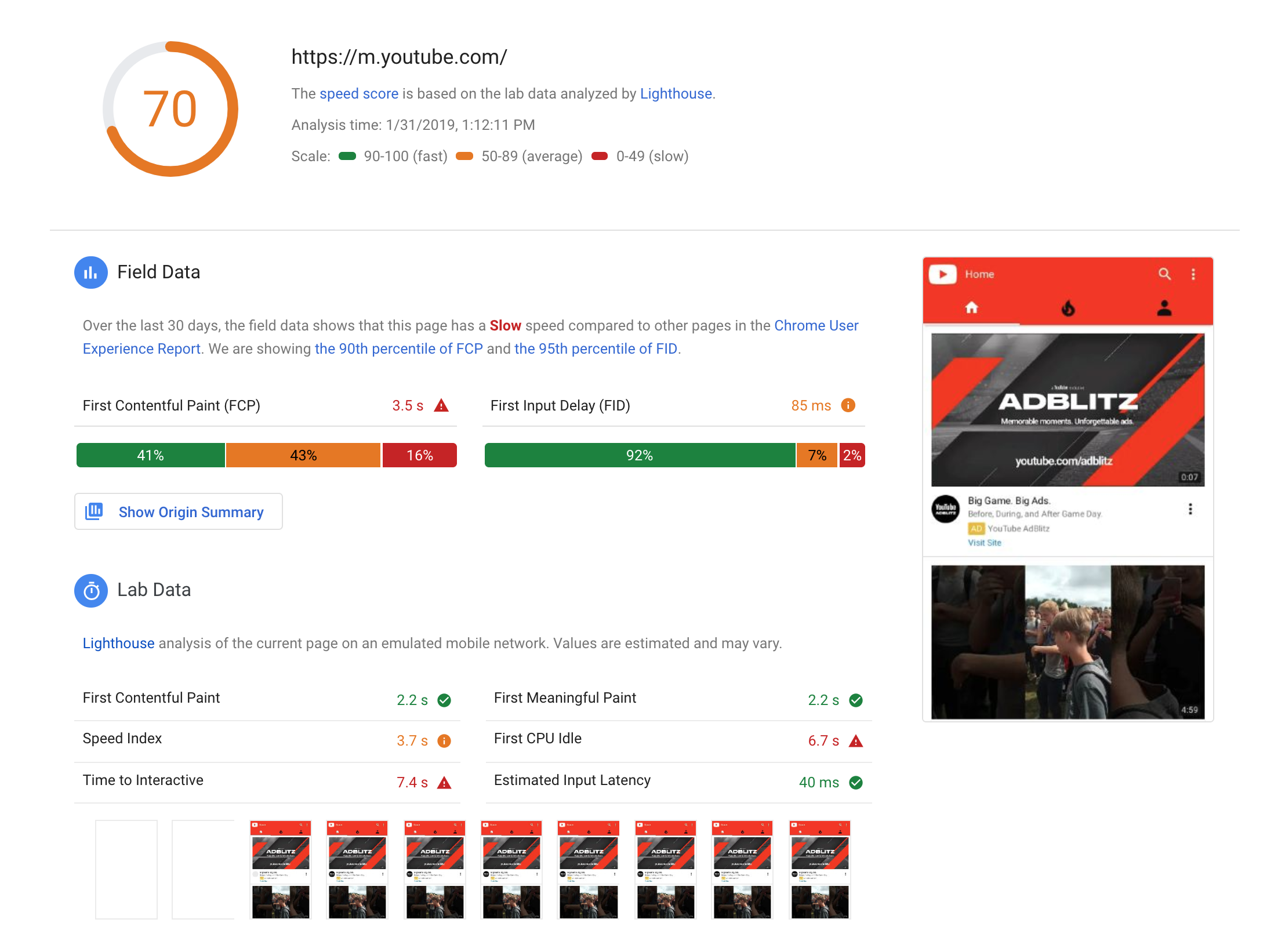

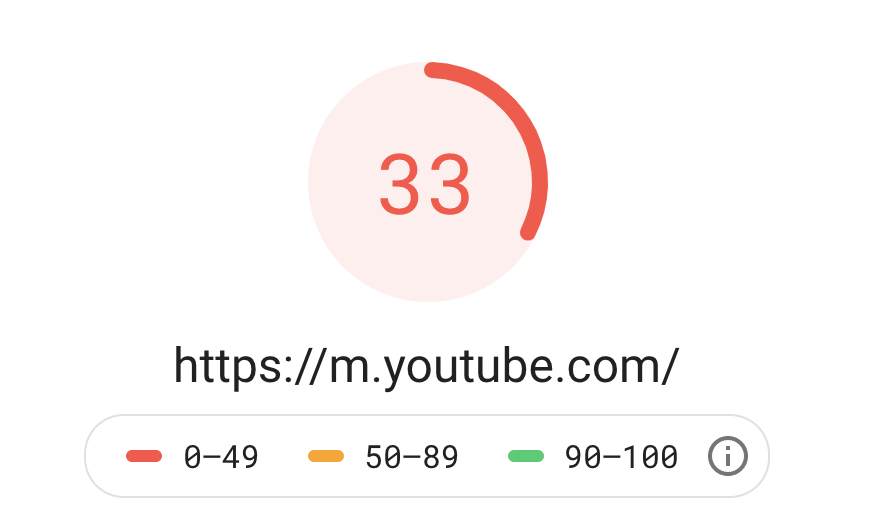

For these stakeholders, the current model leads to consistently awful results on mobile. A few examples:

https://developers.google.com/speed/pagespeed/insights/?url=cnn.com https://developers.google.com/speed/pagespeed/insights/?url=https%3A%2F%2Fnew.siemens.com%2Fglobal%2Fen.html https://developers.google.com/speed/pagespeed/insights/?url=youtube.com https://developers.google.com/speed/pagespeed/insights/?url=https%3A%2F%2Fwww.dell.com https://developers.google.com/speed/pagespeed/insights/?url=https%3A%2F%2Fwww.amazon.com https://developers.google.com/speed/pagespeed/insights/?url=https%3A%2F%2Fwww.walmart.com

I'm not cherry picking here, it seems pretty much anybody is in the red as it comes to mobile performance. This paints a bleak picture as to mobile performance being awful and problematic across pretty much the whole web.

Which may not be entirely false, yet I believe it is overstated. The aggressive mobile-first tresholds of Lighthouse are not only aggressive in itself (as in, almost impossible to technically achieve), they typically apply to a minority audience for many websites, the 10-20%. If giant digital teams like Amazon or even Google itself cannot come close to green, what hope is there?

I believe the current scoring model is overly harsh, to the point where it is detrimental. If everybody scores deeply into the red and is unlikely to ever score into the green, I think the tresholds are counter productive. Even companies taking performance serious (as an example: last year our team shaved off seconds of performance) are "rewarded" with their website still being terribly slow on mobile, if Pagespeed Insights is to be believed. Followed by business stakeholders weaponizing the score.

I don't neccessarily disagree with the mobile web being pretty slow in general, nor do I disagree with Lighthouse putting an emphasis on mobile-first performance. I disagree with taking the most aggressive performance tresholds possible and widely communicating them as if they represent mobile performance to non-technical stakeholders.

I know Pagespeed Insights does contain some of the nuances to put the number in perspective, but as said, that's not how it is used. It's a business/marketeer tool, not an engineering tool.

In its current shape, I believe the way Pagespeed Insights reports performance is too harsh, miscommunicates performance to non-technical stakeholders, and as such the report loses its credibility and causes a lot of miscommunication or even frustration. Furthermore, targets seem unachievable in the real world, unless you start over again and create a 2 pager app, which is not what the web is.

I don't expect some kind of immediate solution, consider it food for thought. If everybody is red and green is impossible, I think it is reasonable to question the tresholds.