This is super cool! I love this interpretation :D

It might relate to how this butterfly pattern of connection was used in telephone switching networks and computer networks

Would love to chat more!

Open justheuristic opened 2 years ago

This is super cool! I love this interpretation :D

It might relate to how this butterfly pattern of connection was used in telephone switching networks and computer networks

Would love to chat more!

Thanks for the reference. I did not know about telephone switching, but i've seen NVIDIA use the same pattern for adding vectors in parallel in scientific computing.

Would love to chat as well. We can find each other on discord (i'm yozh there) -- or your alternatives.

Random thoughts:

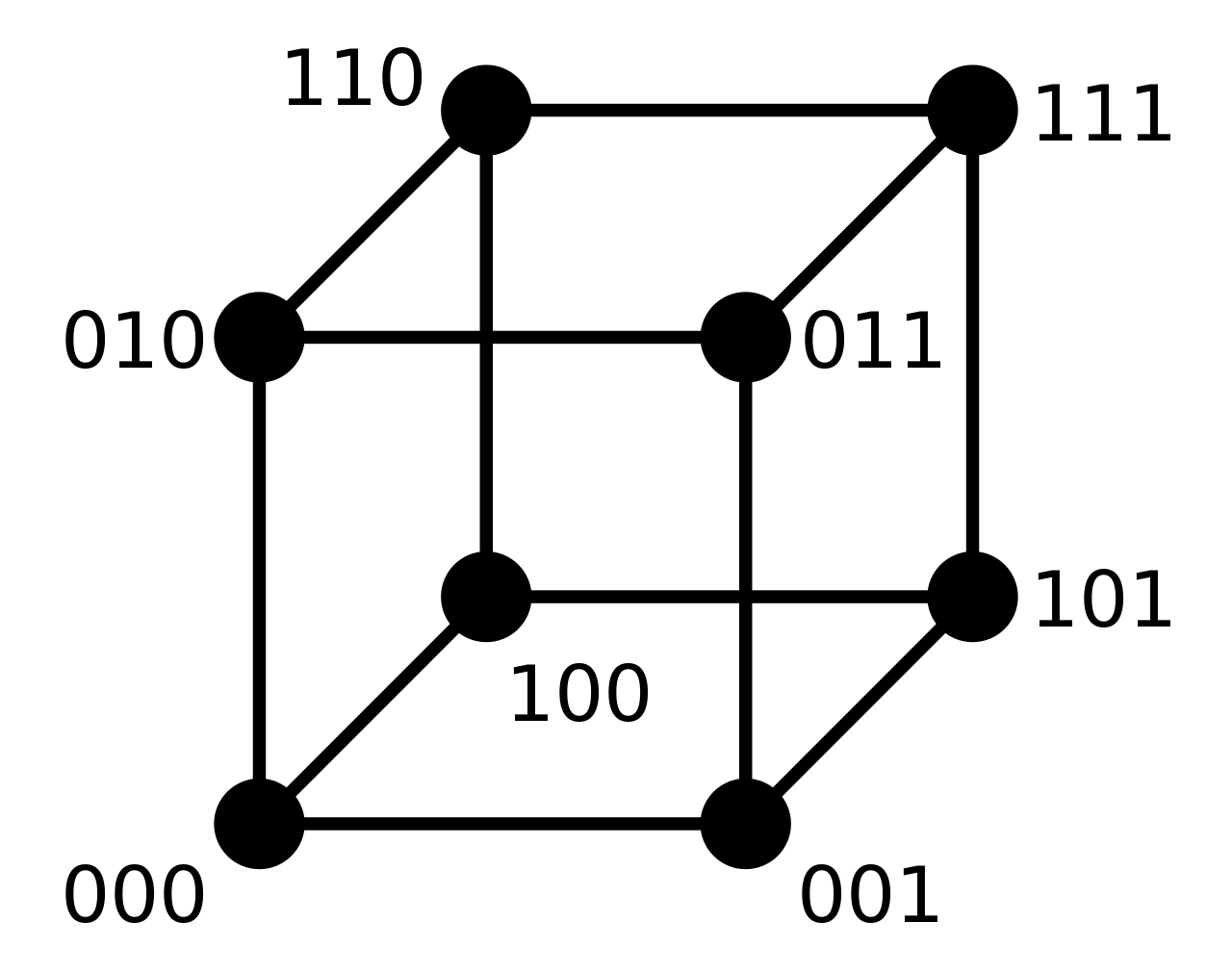

1. it is possible to halve the max distance in a hypercube graph by adding one more "edge" (in discussion with @denkorzh ) This is known as folding the cube: connecting each node to the single farthest other node. Hence, node 1101 would connect to 0010. This corresponds to adding one more "1" to each row in the layout matrix. The max length changes from log2 num_blocks to (log2 num_blocks)/2. In other words, the information can travel from any unit to any other unit with half as many layers.

This may be useful if we scale pixelfly to very large models, e.g. GPT-3 has 12288 hidden units, this would translate to a 256x256 grid of 48x48 blocks. It would take 8 consecutive layers to propagate signal from block 00000000 to block 11111111. After folding, we get marginally more parameters, but it now takes only 4 layers (instead of 8) to connect any pair of blocks.

2.Relation to efficient convolutions and GNN / GCN ( proposed by @TimDettmers )

3. There are directed graphs that have similar properties with less weights and/or compute due to assymetry:

[projected usefulness = low] 4. product spaces

[projected usefulness = none] 5. (low) there are two "brethren" to the binary hypercube that are more dense (in discussion with @denkorzh )

Update ( from @ostroumova-la )

- There are directed graphs that have similar properties with less weights and/or compute due to assymetry:

Actually, exponential graph has exactly the same diameter as a hypercube but worse theoretical properties (e.g. it cannot be folded). Therefore, in theory, a folded hypercube should be superior to it in all ways: 2x smaller diameter and just as redundant for the price of 1 extra edge. However, this does not guarantee practical performance for transformers.

Kautz graphs are a whole different matter. For a given hypercube, you can find a Kautz graph with better sparsity and a smaller diameter at the cost of less redundancy. In other words, any block of inputs can contribute to any other block of inputs through other blocks. In Kautz graph, you meed a smaller number of steps (layers), but there are less possible paths along which you can contribute

Kautz(m=4, n=3) => V=320, E/V=4.0, diameter=4 | hypercube V=256 E/V=8 diameter=8 -or- E/V=9 diameter=4

Kautz(m=4, n=4) => V=1280, E/V=4.0, diameter=5 | hypercube V=1024 E/V=10 diameter=10 -or- E/V=11 diameter=5

Kautz(m=4, n=5) => V=5120, E/V=4.0, diameter=6 | hypercube V=4096 E/V=12 diameter=12, etc...

Kautz(m=5, n=2) => V=150, E/V=5.0, diameter=3

Kautz(m=5, n=3) => V=750, E/V=5.0, diameter=4

Kautz(m=5, n=4) => V=3750, E/V=5.0, diameter=5

Kautz(m=5, n=5) => V=18750, E/V=5.0, diameter=6

Kautz(m=6, n=2) => V=252, E/V=6.0, diameter=3

Kautz(m=6, n=3) => V=1512, E/V=6.0, diameter=4

Kautz(m=6, n=4) => V=9072, E/V=6.0, diameter=5

Kautz(m=6, n=5) => V=54432, E/V=6.0, diameter=6

Kautz(m=7, n=2) => V=392, E/V=7.0, diameter=3

Kautz(m=7, n=3) => V=2744, E/V=7.0, diameter=4 | hypercube V=2048 E/V=11 diameter=11 or E/V=12 diameter=6Again, this may not reflect deep learning performance, but it just might work for transformers -- and it has less edges -> less compute.

Okay, so imagine the full pixelfly matrix with 2^n blocks

Let's give each input block a number 0... 2^n-1 Then, the pixelfly matrix can be defined as such:

This is the same condition that defines a binary cube in n dimensions:

Ergo, pixelfly neurons actually form a cube and "connect" over the edges of said cube.

p.s. not my original thought, discovered in discussion with @ostroumova-la

p.p.s. if that is the case, are there other geometric shapes we could try? So, for instance, fully connected matrix can be viewed as an n-dimensional simplex (triangle -> tetrahedron -> simples) because all blocks connect to all other blocks. Than goes the hypercube of pixelfly. Then what?