I am trying to do this as well, but on C++ instead of C#.

@RealSense-Customer-Engineering Quick Question that is not really related: Why isn't the alignment of the depth and color frames done by default? Imho it would make more sense in either calculations or comparison etc. Is this because of performance? As Craig/AussieGrowls mentioned "Align method aligns every pixel and slows down the frame rate dramatically".

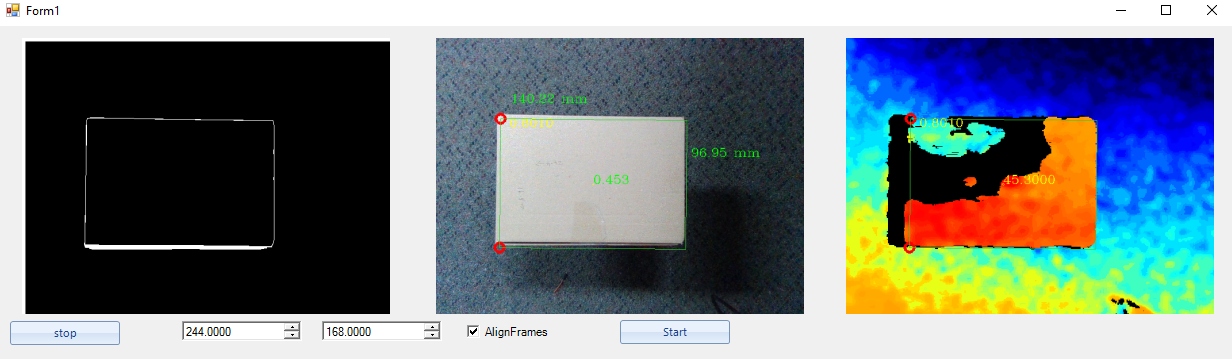

The green circles in the above are the pixel coordinates I took from the rs_measure example, yet they are very different to the pixel coords in the c# app (the red circles).

The SDK is built from source, not precompiled.

This is the measurement I took in the rs_measure example:

The green circles in the above are the pixel coordinates I took from the rs_measure example, yet they are very different to the pixel coords in the c# app (the red circles).

The SDK is built from source, not precompiled.

This is the measurement I took in the rs_measure example:

I put a breakpoint on this, and took the pixels (from and to) that the dist_3d method requires:

`

auto from_pixel = s.ruler_start.get_pixel(depth);

I put a breakpoint on this, and took the pixels (from and to) that the dist_3d method requires:

`

auto from_pixel = s.ruler_start.get_pixel(depth); (0.24m or 24.4cm)

However that is using pixel X/Y coords from rs_measure, which do not match the stream as shown in c# app.

(0.24m or 24.4cm)

However that is using pixel X/Y coords from rs_measure, which do not match the stream as shown in c# app. looks like the color frame has an interlace issue?

looks like the color frame has an interlace issue? using the cs-tutorial-2-capture:

`using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading;

using System.Threading.Tasks;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

using System.Windows.Threading;

using the cs-tutorial-2-capture:

`using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading;

using System.Threading.Tasks;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

using System.Windows.Threading;

Hi All, I am having trouble getting box dimensions from the the D435. The first issues is that the depth frame and color frames are not aligned because of the FOV. I can use the Align method, however it aligns every pixel and slows down the frame rate dramatically. Slows it down so much that the syncer throws an error after a minute or two: Frame didn't arrived within 5000

My objective is to measure a box from top down. Note the red circles is where I am trying to measure.

I can use the rs-measure example to accurately measure the box, however because the C# wrapper does not have a wrapper for rs2_deproject_pixel_to_point I had to write my own in c# (it is only Math processing). The results are very very wrong through. Is it because of the BrownConrady intrinsic model? the Assert fails in my C# version.

static float[] DeprojectPixelToPoint(Intrinsics intrin, PointF pixel, float depth) { //Debug.Assert(intrin.model != Distortion.BrownConrady); // Cannot deproject from a forward-distorted image Debug.Assert(intrin.model != Distortion.Ftheta); // Cannot deproject to an ftheta image var ret = new float[3]; float x = (pixel.X - intrin.ppx)/intrin.fx; float y = (pixel.Y - intrin.ppy)/intrin.fy; if (intrin.model == Distortion.BrownConrady) { float r2 = xx + yy; float f = 1 + intrin.coeffs[0]r2 + intrin.coeffs[1]r2r2 + intrin.coeffs[4]r2r2r2; float ux = xf + 2intrin.coeffs[2]xy + intrin.coeffs[3](r2 + 2xx); float uy = yf + 2intrin.coeffs[3]xy + intrin.coeffs[2](r2 + 2yy); x = ux; y = uy; } ret[0] = depthx; ret[1] = depthy; ret[2] = depth; return ret; }

Get distance 3d in c#:

public static float GetDistance_3d(this DepthFrame frame, PointF from, PointF to, Intrinsics intr) { // Query the frame for distance // Note: this can be optimized // It is not recommended to issue an API call for each pixel // (since the compiler can't inline these) // However, in this example it is not one of the bottlenecks

var vdist = frame.GetDistance((int)from.X, (int)from.Y); var udist = frame.GetDistance((int)to.X, (int)to.Y);

My main issue I think is converting points to pixel space [0,1]. If I run the measure example, and put a breakpoint in the code, and take the pixel x/y coordinates then put them into the c# app, the coordinates are not where they should be.

Any help would be much appreciated. Thanks Craig