Hi @rghl3 ,

I noted in your video that the confidence of the tracking stays medium (yellow trace in 3D view). In order to gain high quality tracking you should cause the T265 enough motion to help it understand its local position accurately.

Moreover, it's highly recommended to use wheel odometry input in your case.

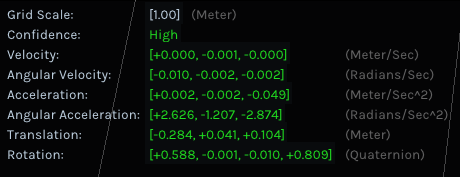

Note that you have an option to display T265's info (including translation data) by pressing on the "i" button on the 2D/3D view.

Issue Description

Hi, I have connected the camera to laptop. When I move the camera slowly along the forward direction, the camera pose tracking doesn't follow the movements(almost stationary) . When moved a distance of around 6 meters, the 3d tracking shows it to be around 0.5meters. Here is the link for the video. Please have a look at it. (Grid size is 1m).

As I am using it on a rover platform moving at a slow speed, these untracked pose measurements result in accumulated drifts over long runs.