There can be differences between the RealSense Viewer and a self-written application because in the RealSense Viewer, a range of post-processing filters are active by default that alter the image for the purpose of enhancing its quality. In applications that you write yourself though, post-processing filters must be deliberately programmed into the application.

If you open the 'Post-Processing' section of the Viewer's options side panel then you will be able to see the filters being applied and their settings. The active ones are indicated by a blue icon beside the filters.

Intel have published a tutorial for adding post-processing filters to a Pyrealsense2 application.

https://github.com/IntelRealSense/librealsense/blob/jupyter/notebooks/depth_filters.ipynb

More information about post-processing filters can be found here:

https://github.com/IntelRealSense/librealsense/blob/master/doc/post-processing-filters.md

| Camera Model | D435 } | | Firmware Version | 05.11.06.250 | | Operating System & Version | Win 10 | | Platform | PC | | SDK Version | 2.25.0 | | Language | python | | Segment | vision |

Issue Description I am trying to load a json file that I created in the intel realsense viewer in order to optimize the settings for my use case. Below is my script. the json file is called "Custom.json".

I'm receiving different results when I run the camera with with the realsense viewer compared to my script.

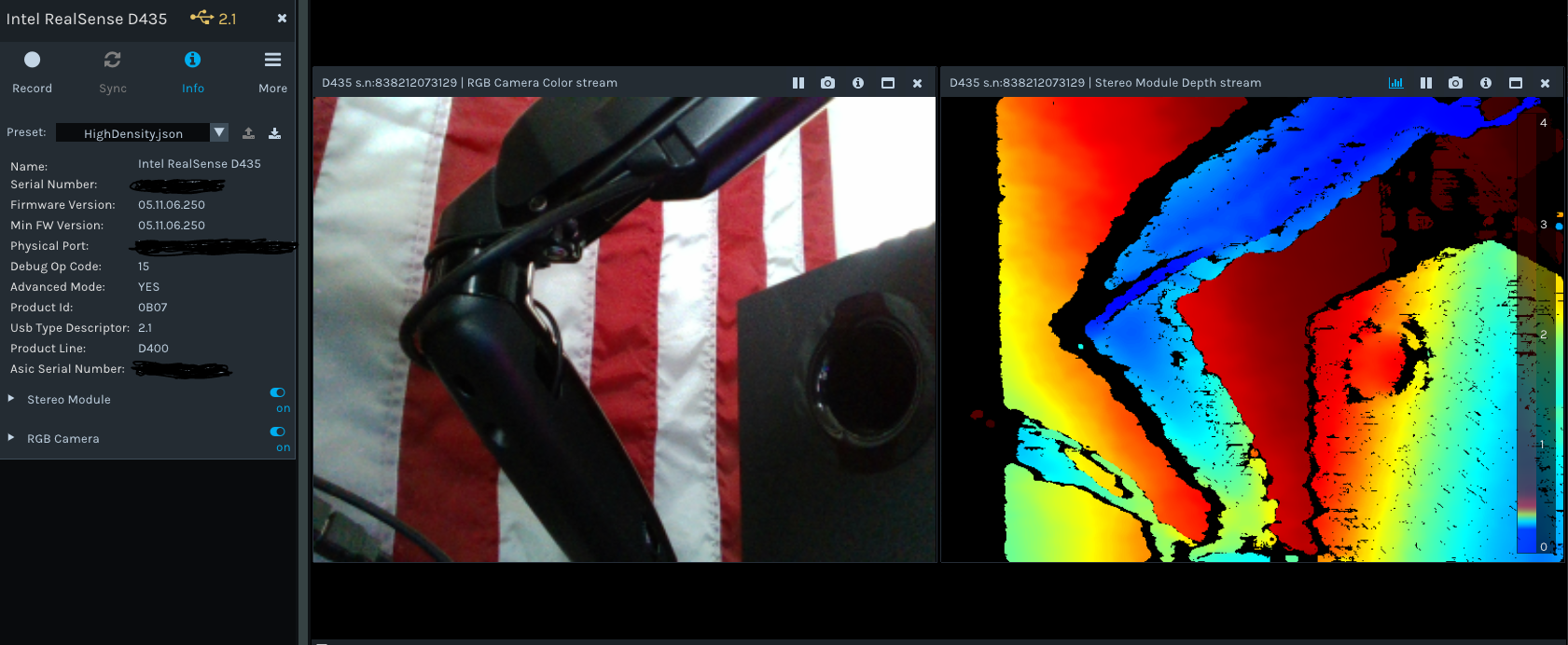

Image of what the settings look like in the viewer.

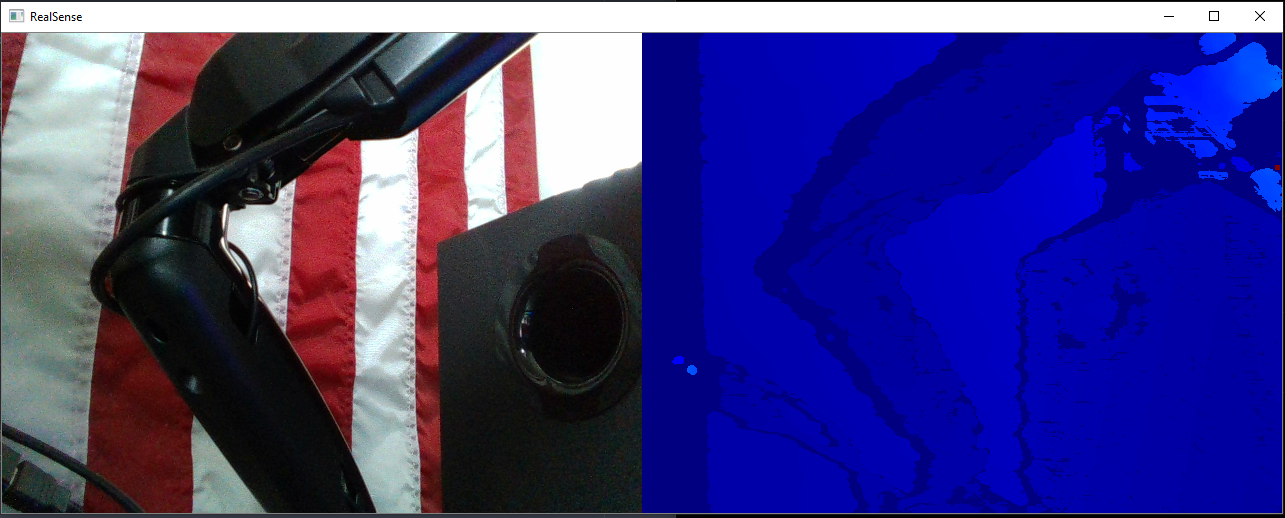

Image of what the settings look like when launched in my script.

Why does my script return only blue on the depth image?

Shouldn't it mirror the return that is shown in the realsense viewer?

I'm using the same file for both cases.