I'm not sure I fully understood:

"but using this it seems like my Z is equal to the depth to the object and that is not what I need"

Looking at the code, it seems correct.

If it computationally feasible, I would recommend using rs2::align to bring the two frames into single viewport. This should simplify the problem significantly (we have two examples on alignment - align and align-advanced)

Hello all,

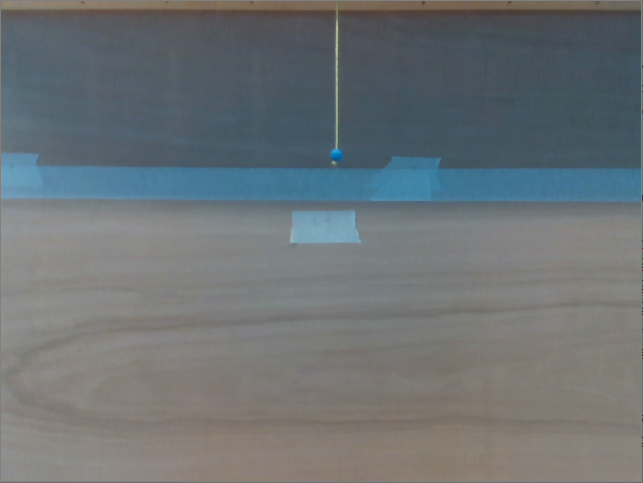

I am currently working on a vision system to detect blue marbles. I am able to detect 1 marble from each picture. I now want to know the X,Y,Z coordinates of this marble. Here are is the image I use for detection:

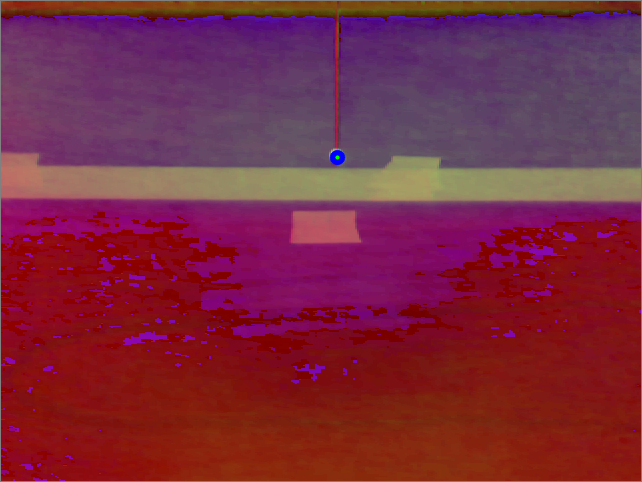

Here is the output with the detected marble:

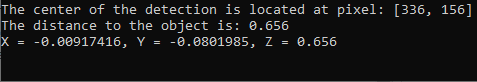

And here is my console output:

In my code I get the X,Y of the centre of the marble in the image and the distance to the marble.

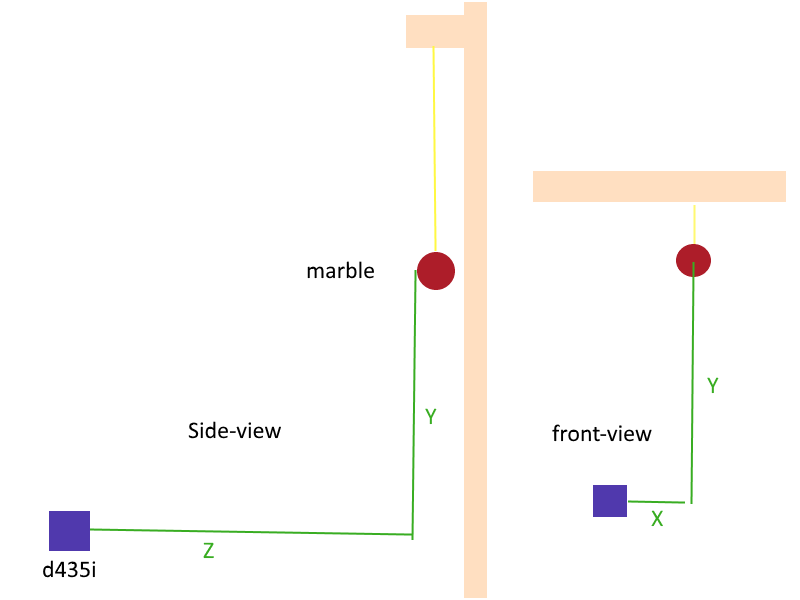

The thing what I now need is The X, Y, Z coordinate of the centre of the marble. I tried doing this by using

rs2_deproject_pixel_to_point(berrycord, &depth_intrin, dpt_tgt_pixel, distance);but using this it seems like my Z is equal to the depth to the object and that is not what I need. See illustration below for the value of X, Y and Z that I would like to get:Would anyone be able to help me with this? Last week I though I got it but now i'm seriously doubting the correctness of my Z value

This is the code I currently use for this: