Thanks for your interest! For one specific (rgb, sd, gt) tuple, the supervision is semi-dense and there're many pixels where supervision doesn't exist. However, for the whole dataset, on most of the pixels in the 2D-image coordinate the network receives supervision from different data samples. And we should also notice some pixels where there're truly no supervision, like those in the top of the full image (out of the FoV of the LiDAR).

(GT at the top and results at the bottom)

(GT at the top and results at the bottom) loss function: your code loss. sparse

result:unsupervised depth is zero?(The origin part)

loss function: your code loss. sparse

result:unsupervised depth is zero?(The origin part)

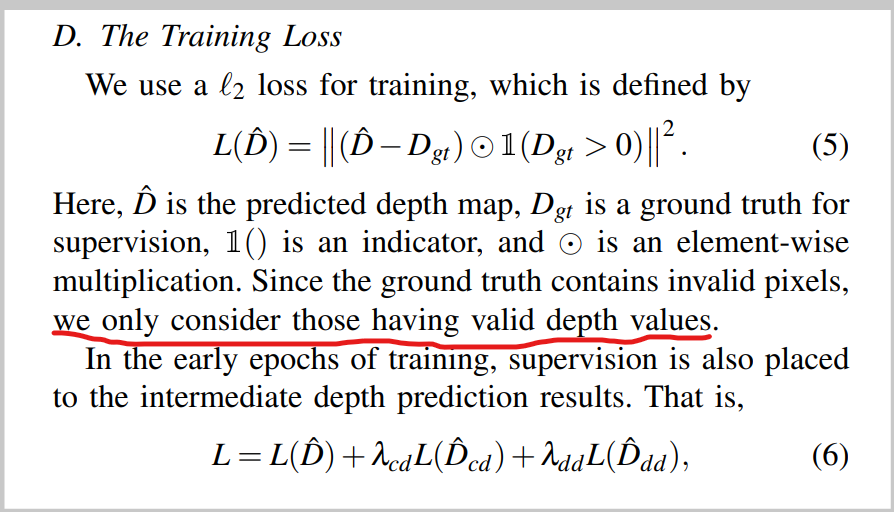

Thank you for your excellent work, network design gives me a lot of inspiration. But there's one thing I never understood. I noticed that the training loss function in the paper only focuses on pixels with valid depth(sparse),so why can networks generate dense depth maps? How to ensure that pixels are accurate without supervision?