@npfp I'm guessing you mean the other way around?

I'm all for adding a --target arg to the cli to allow deploying a single pipeline, but I feel that --env is more commonly used to specify things such as dev, prod, etc..

Closed npfp closed 3 months ago

@npfp I'm guessing you mean the other way around?

I'm all for adding a --target arg to the cli to allow deploying a single pipeline, but I feel that --env is more commonly used to specify things such as dev, prod, etc..

Just to be sure we use the same terminology.

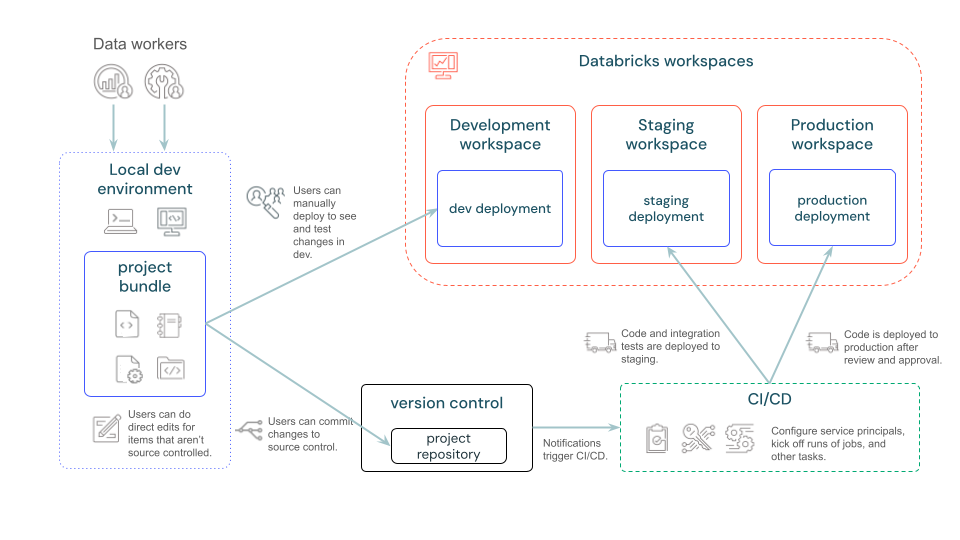

By target, I meant an entry in targets of the databricks.yml file: the target databricks environment (that can lives in different workspaces as in this image: development, staging, production)/

By env, I meant a kedro conf folder. For example base, local, etc. (https://docs.kedro.org/en/stable/configuration/configuration_basics.html#configuration-environments)

I see your point, indeed, we might have misused the kedro environment by creating 1 environment by pipeline. If you had any links to how to handle multiple independent pipelines in a single repo that would be much appreciated!

What I find confusing is that locally I can run:

kedro run --pipeline=my_pipeline --env=my_envto specify running a particular environment. But I can't find a way to do the same within databricks.

If I look to the definition of the python_wheel_task in databricks.yml I see no usage of env:

python_wheel_task:

package_name: my_package

entry_point: databricks_run

parameters:

- --nodes

- my_node

- --conf-source

- /dbfs/FileStore/my_package/conf

- --package-name

- my_package

libraries:

- whl: ../dist/*.whlwhere I would expect to have a

parameters:

- --nodes

- my_node

- --conf-source

- /dbfs/FileStore/my_package/conf

- --package-name

- my_package

- --env

- my_envDo I miss something?

No you are right! I'll have to think about this.

I use ‘kedro package‘ to build the wheel and compress conf and here the env is used to only grab the relevant configuration. I thought that would be enough, but it's still in an env folder and hence --env is required in the task spec

Good catch!

Great, thx for the confirmation! In case it helps, here is an attempt to fix this:

Currently a kedro environment is bound to a Databricks Asset bundle target. This doesn't allow to ma

Our use case:

devandprod(with the according secrets exposed as environment variables)first_pipeline,second_pipeline.What we'd want to achieve would be to be able to run

But currently as the

envparameter is used for both the target and the kedro environment it would require us to definefirst_pipeline_dev,second_pipeline_dev,first_pipeline_prod,second_pipeline_prodkedro environments and the target equivalent.