Hi, I'm also testing NormalGAN with my own data from Kinect V2, i think your problem is in depth image pixel values. Modifying 'multiframe_listener.py' example from pylibfreenect2, i save depth image as follows using numpy and PIL:

depth = frames["depth"]

depth_np=depth.asarray(np.float32).astype(np.int32,order='C')

depth_img=Image.fromarray(depth_np)

depth_img.save('depth.png')At this way, it preserves distance in mm as pixel value. After that, I removed background with a mask from color image.

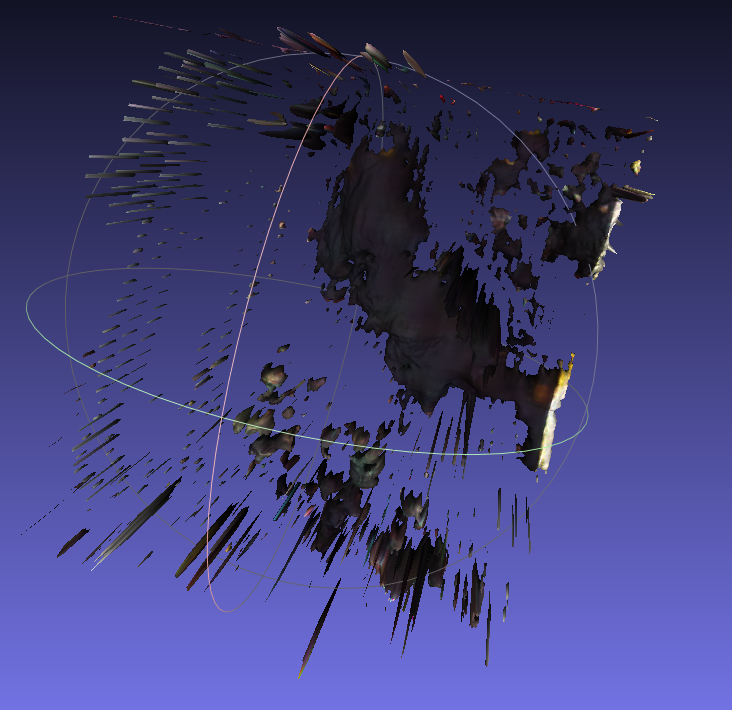

My results are not very promising but at least it looks like human:

inputs.zip

I don't know if is the right way of taking depth images, but results are a slightly better. I am really interested on how to improve my results, any suggestions will be appreciated!

)

)

Hi, Wang

I really appreciate your great work!

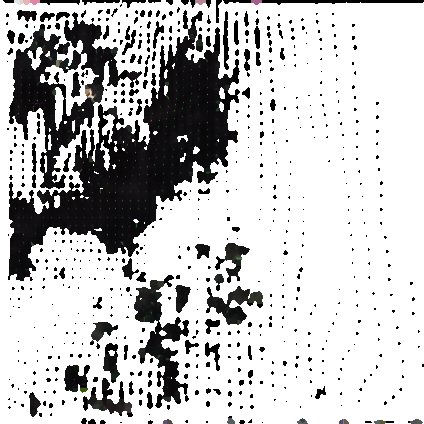

I want to run NormalGAN on my own KinectV2 data, but met some problems: The output is so terrible, and it looks even not like a human at all... I tried to put many frames into NomalGAN, and also did some simple denoising, but the outputs nearly kept the same...(T_T) My color map: size: 512 424, type: CV_8UC3 (24bit); My depth map: size: 512 424, type: CV_16UC1 (16bit); Here are my maps and output:

Here are the cz0 and cz1:

Your NoramalGAN is so magic and I really want to play it well on my data. So I hope you can give me some advice. Thanks a lot!

Best wishes