My voxelization implementation is based on this source, which provides a good insight on the subject matter.

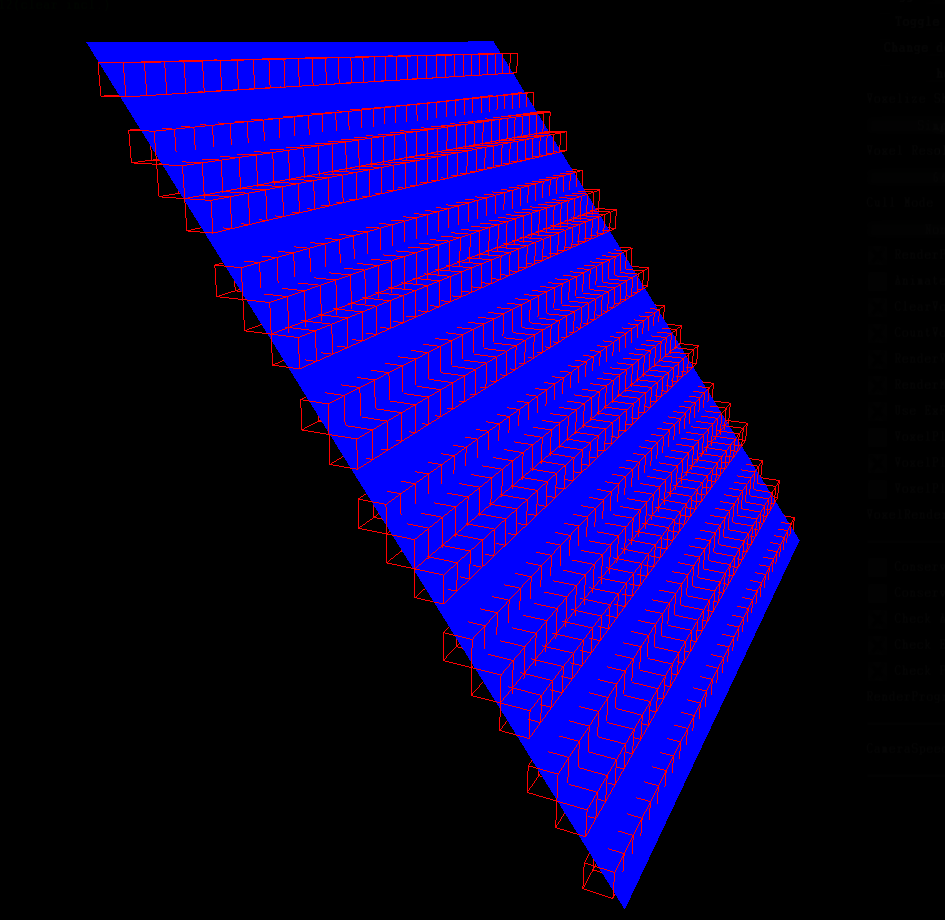

The thing about voxelization that it is just as simple as render object to the screen, but instead of writing color to the screen buffer, we collect the depth value and output to a volume buffer. The problem arises when the depth gradient of a particular triangle is high (ddx(depth) > 1.0 || ddy(depth) > 1.0), "crack" will form on the result voxel volume.

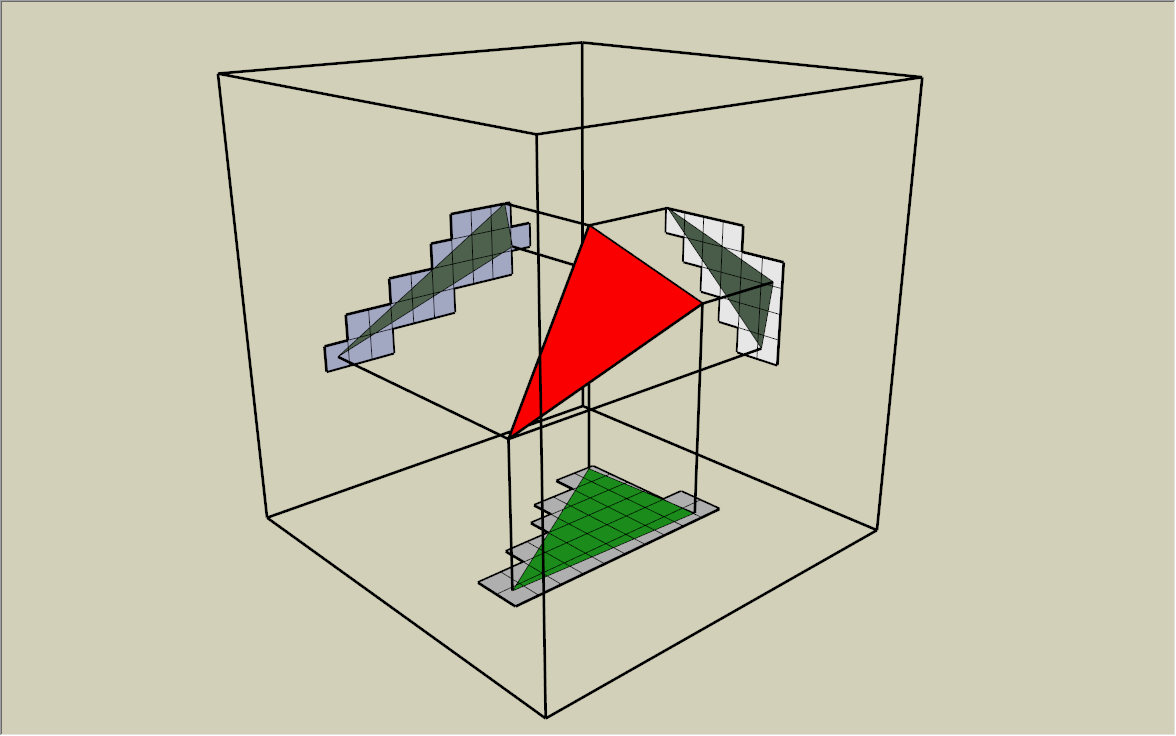

To solve this issue, we just need to project the triangle onto a plane where the projection area is the largest. That means we would need 3 rendering passes to project the scene into 3 different axes.

The nice thing about the geometry shader is that we can combine all the 3 rendering passes into one. Because the voxel volume is a cube and the projection axes X, Y, Z are orthogonal to each other, we only need to swizzle the X, Y, Z components of vertex position in voxel space. This can be easily done in geometry shader by calculating the triangle normal and selecting the corresponding projection axis. You can see it here: https://github.com/Looooong/Unity-SRP-VXGI/blob/5e3acd7f042a828883cf19dde947c38aa2516a2f/Runtime/Shaders/Basic.shader#L206-L257

About the problem on Metal API, without geometry shader, we just use 3 rendering passes to voxelize the scene, which might triple the processing time. I ran the GPU profiler, and the processing time of voxelization stage with geomertry shader is quite trivial with respect to other processing stages. So I don't think tripling the processing time doesn't matter much.

If I were to implement this on compute shader, I doubt that this would add more complexity to the project and re-invent the wheel. And I'm a simple man, I hate complexity (╯°□°)╯︵ ┻━┻

...or at least support compute shaders as an alternative...

...because (a) it'll work on Metal / Mac, and (b) the performance of geometry shaders sucks

https://forum.unity.com/threads/ios-11-metal2-has-no-geometry-shader.499676/#post-3315734

https://stackoverflow.com/questions/50557224/metal-emulate-geometry-shaders-using-compute-shaders