I agree state is impossible to reason about in star/. I think there's room to consolidate your proposal (maybe?). As well as plenty of bike-shedding over names :)

Each set on inputs (star_job, controls, pgstar) should just be moved to their own s% struct (star_job is already done. So that would be a s%star_job, s%controls, s%pgstar. This would be mostly just to tidy things up and make clearer what something is. That handles the 'meta' option. We can also put all the pgstar data in a s% pgstar struct.

Things like v_flag should just get moved into s% star_job as that's the place it is controlled from.

I think for the other state the better way(?) is think about things are:

- Things that must survive between steps (xa, xh): s% state

- Things that are valid only for that timestep (lnR, R, eps_mdot): s% step.

- Things that need saving between steps (*_start or mlt_vc_old): s%start

If step and start where the same type we could just do some pointer juggling so that at the end of the step we just move the s% step pointer to s%start and make a new s% step struct. That way s% step must always be reset for each step and start is just the last step (some stuff in start might need to be de-allocated to save memory).

For things you call output we do already have the ability to just call into the history/profile routines to get them (star_get_history_output and star_get_profile_output) and for things like center_ye do they even need to be stored? Should we just say you always need to do a lookup to get them?

Intro

I've been trying to understand what makes

stateso tricky in MESA/star. This is my attempt at expressing what's wrong, and what we might do about it.Everything here has some caveats, the biggest of which is a 'so far as I know' that should be attached to every statement about MESA.

Current State

There are at least six different kinds of state that live in

star_data:star_data_step_input.inc.xh(structure),xa(composition),mlt_vc(for TDC).s%lnRis a copy ofs%xh(i_lnR). Presumably this is for convenience and code clarity. Note that these are copies and not pointers.eoscalls or intermediate calculations likecsoundandz53bar.There are also at least three different problems we routinely hit with state:

starperforms iterations, starts a step, finishes a step, retries, model writing/reading, etc. This was a major source of bugs in the development of TDC, and even determining when a particular piece of state gets updated is challenging/time-consuming.eps_mdot, where at one points%Rands%lnRgot out of sync.Proposed Solution

I am not sure of the best solution here, but the best I've come up with is to the data inside

star_datainto six sub-types:metastructstarteaseinteroutputThese correspond to the different kinds of state above.

A few examples:

s%v_flagbecomess%meta%v_flags%xabecomess%struct%xas%lnRbecomess%ease%lnRs%mlt_vc_oldbecomess%start%mlt_vcs%eps_mdotbecomess%inter%eps_mdots%center_yebecomess%output%center_yeThese sub-types help organize the logic, at the cost of being more verbose. But for instance you could pass them directly to subroutines, so a routine that calculates outputs could be passes

s%output->oand then you write too%center_ye.We can also attach rules to some of the sub-types:

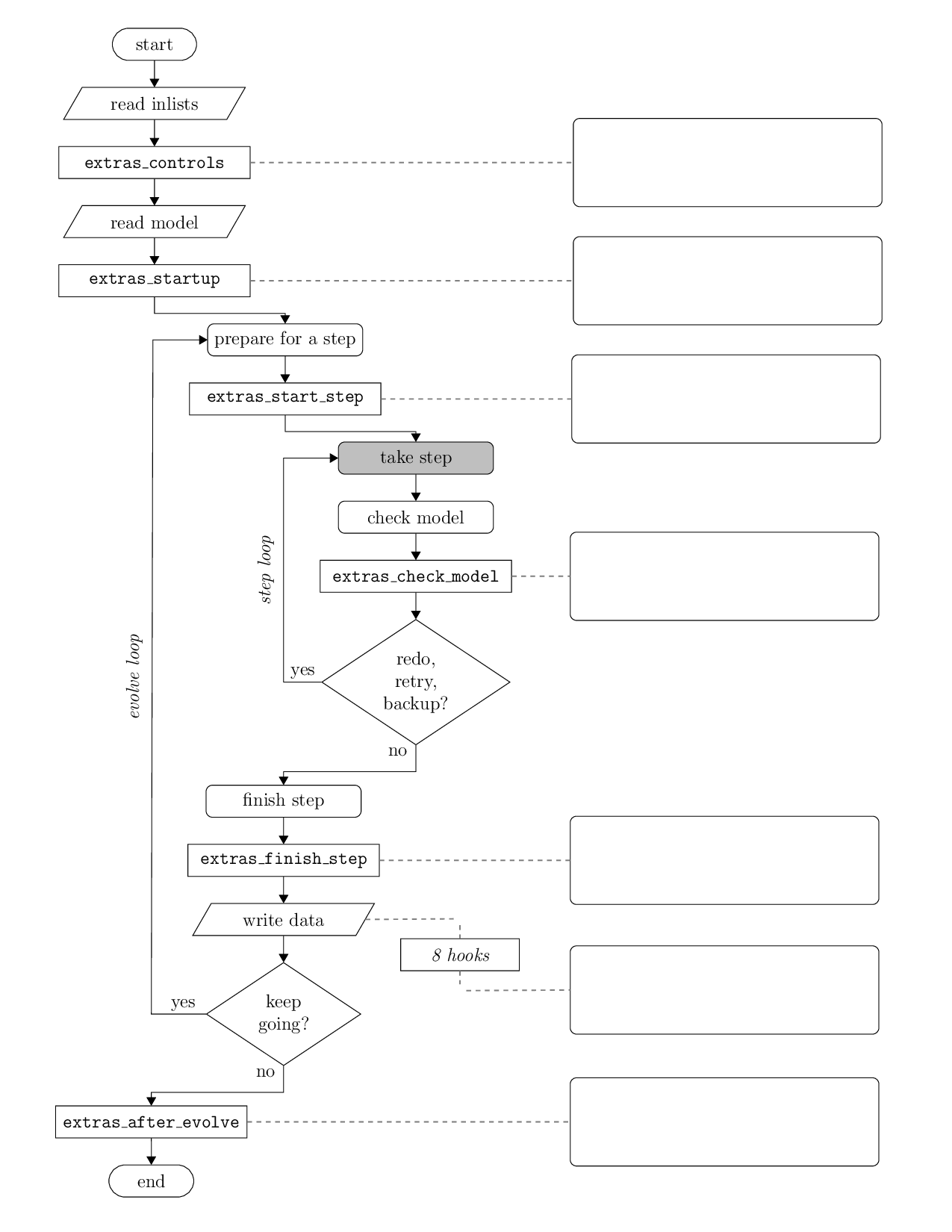

metaonly gets touched byrun_star_extrasand initialization routines. Flags don't get changed by the rest ofstar.structonly gets modified by the solver. In some cases this just means copying an intermediate variable (e.g. there can be anmlt_vcvariable that lives ininter, but the 'ground truth' will always be the version the solver writes intostruct). This ensures that you know for sure thatstructdata was last updated at the end of the most recent iteration.startvariables only get set at the start of a step or by initialization routines (including model reading).easevariables would be written once per iteration, always at the end of a solver call. This ensures that they remain just convenient places to put copies of structure variables and/or one-off derived quantities likeeosoutputs.intervariables would be a sort of catch-all for state we can't easily put in the other buckets. The goal should be for this to be a small category.outputvariables would be written once per step. They would never be read except by the profile/history/pgstar/model writing routines. We could enforce this with a derived type, or else check for it with a linter.I think this would make the state much more manageable, and make it a lot clearer what gets modified where.

I'd be very interested to hear what others think though!