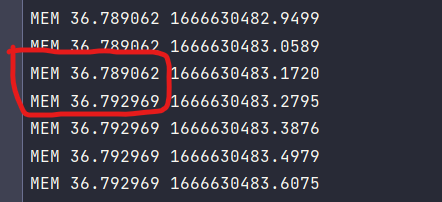

Does your result show memory usage between two different runs of this task? If so, this most likely is not an issue: the accumulation of memory is actually expected.

By default, Rocketry logs the tasks (ie. when a task started, when it succeeded/failed/etc.) to an in-memory list. This is fundamental part of Rocketry as most of the scheduling is based on whether a task started/finished at a given period of time, as does the condition every (though there is some optimization in place by default).

What does the results show if you disable the logger?

from memory_profiler import profile

from rocketry import Rocketry

from rocketry.conditions.api import scheduler_cycles

from rocketry.conds import every

import logging

logger = logging.getLogger("rocketry.task")

logger.disabled = True

app = Rocketry()

@profile

@app.task(every("1 seconds"), execution="async")

async def job():

print(1)

if __name__ == '__main__':

app.session.config.shut_cond = scheduler_cycles(more_than=10)

app.run()How did you run the memory_profiler? I tried to reproduce this but couldn't get the output out of the library.

Steps to reproduce the behavior.

View the dat file in the same directory,memory leaks will be found