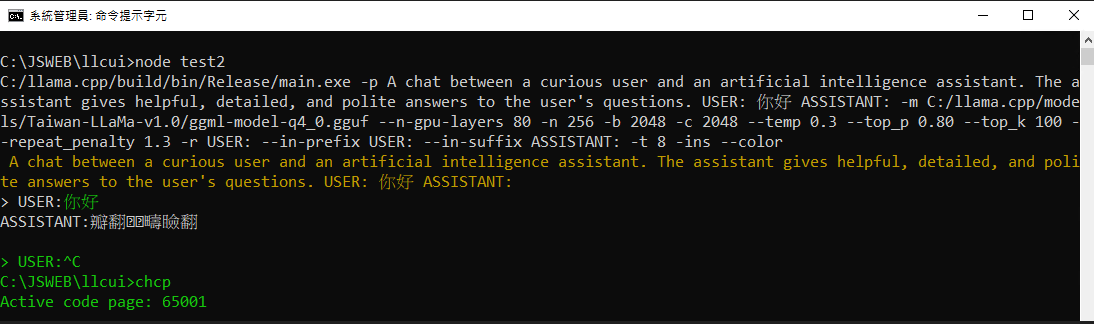

我感覺很像是 cmd 自身編碼的問題,要不要試試看先打指令

抱歉,我看到你的截圖下面有 chcp 65001 切換編碼,再執行程式看看?chcp 過了

Closed edenlin-uj closed 9 months ago

我感覺很像是 cmd 自身編碼的問題,要不要試試看先打指令

抱歉,我看到你的截圖下面有 chcp 65001 切換編碼,再執行程式看看?chcp 過了

@edenlin-uj 應該是 --color 這個參數造成的問題,另外有 chcp 65001 的話,應該 iconv 轉成 utf-8 即可,但是在 Windows 底下還是常常會噴出無法顯示的字元,這是因為 BPE Tokenizer 的緣故,大多都是 byte by byte 的輸出中文字元,這樣的 byte 再過一遍 iconv 我不確定會不會有問題

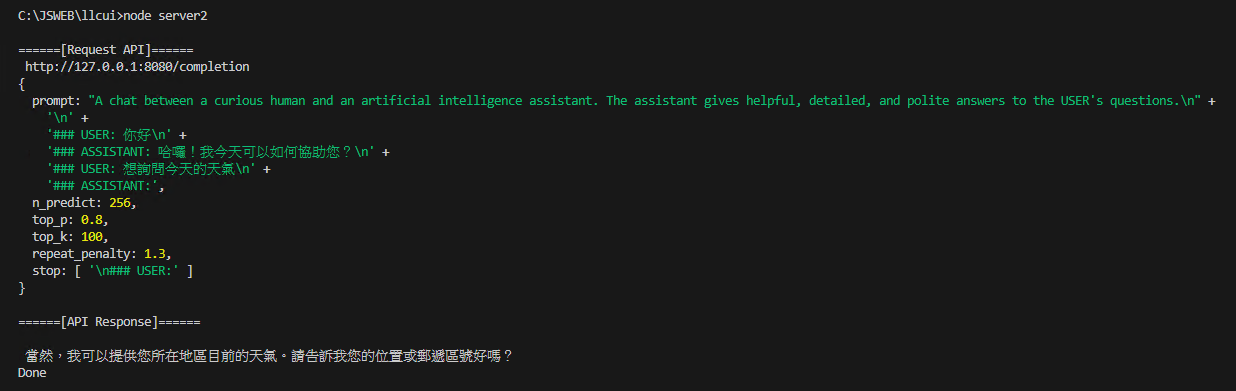

如果你要用 node.js 做串接,個人建議使用 llama.cpp 的 server.exe,參數與 main 大致相同,但是預設會在 127.0.0.1:8080 起一個 LLM Service,在 http://127.0.0.1:8080/ 會有個網頁 GUI 可以互動,可參考此介面發送 Request 的方法來串接,詳細的說明請參考 llama.cpp 的 README

感謝回覆,我試試看!!! 若有好消息,也會分享在這裡

感謝 @PenutChen 的解答~ 最終使用了server.exe的作法,才可以完整的接收中文字,而不會有亂碼的問題 以下是簡單的實作程式碼,分享給大家

C:/llama.cpp/build/bin/Release/server.exe -m C:/llama.cpp/models/Taiwan-LLaMa-v1.0/ggml-model-q4_0.gguf --n-gpu-layers 80 -t 8 -b 2048 -c 2048var instruction = `A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the USER's questions.`;

var chat = [

{"USER":"你好",

"ASSISTANT":"哈囉!我今天可以如何協助您?"},

];

async function main() {

var question = "想詢問今天的天氣";

var prompt = format_prompt(question);

var answers = await LLM(prompt);

console.log(answers);

}

function format_prompt(question) {

return `${instruction}\n${

chat.map(m =>`\n### USER: ${m.USER}\n### ASSISTANT: ${m.ASSISTANT}`).join("\n")

}\n### USER: ${question}\n### ASSISTANT:`

}

async function LLM(prompt) {

try {

var api_url = `http://127.0.0.1:8080/completion`;

var body = {

prompt :prompt,

n_predict:256,

top_p:0.8,

top_k:100,

repeat_penalty:1.3,

stop: ["\n### USER:"],

}

console.log('\n======[Request API]======\n',api_url);

console.log(body);

const api_results = await fetch(api_url, {

method : 'POST',

body : JSON.stringify(body)

})

.then(function(res_api){

return res_api.json();

})

.then(async function(res_json){

console.log("\n======[API Response]======\n");

return res_json;

});

return api_results.content;

} catch (err) {

console.log(`Error: ${err.message}`);

}

return "";

}

main()

.then(() => console.log("Done"))

.catch((ex) => console.log(ex.message));

環境:Windows10

在命令提示字元視窗透過llama.cpp進行互動時,中文顯示都滿正常的, 但使用nodejs時,卻會發生亂碼的問題… 想詢問各位前輩是否有解決的方法QQ

以下是自己實作的程式碼:

圖片連結: