Well apparently the shape of train_labels is (None, ..., None, 1). So it depends on what you want to do.

There are basically 2 ways to encode, for example, class 2. Either say 2 (sparse) or say [0, 0, 1, 0, ..., 0, 0] (categorical or one-hot encoded).

According to the encoding you use, you should set correctly:

- the activation function (of the last layer),

- loss function (and metrics).

I believe you are mixing both applications here.

In particular:

ComplexAverageCrossEntropy is made for categorical encoding (so the last dimension should be 10) but your train labels have shape 1 at the end and not 10. Probably you can use tf.keras.utils.to_categorical on the labels to solve the issue.

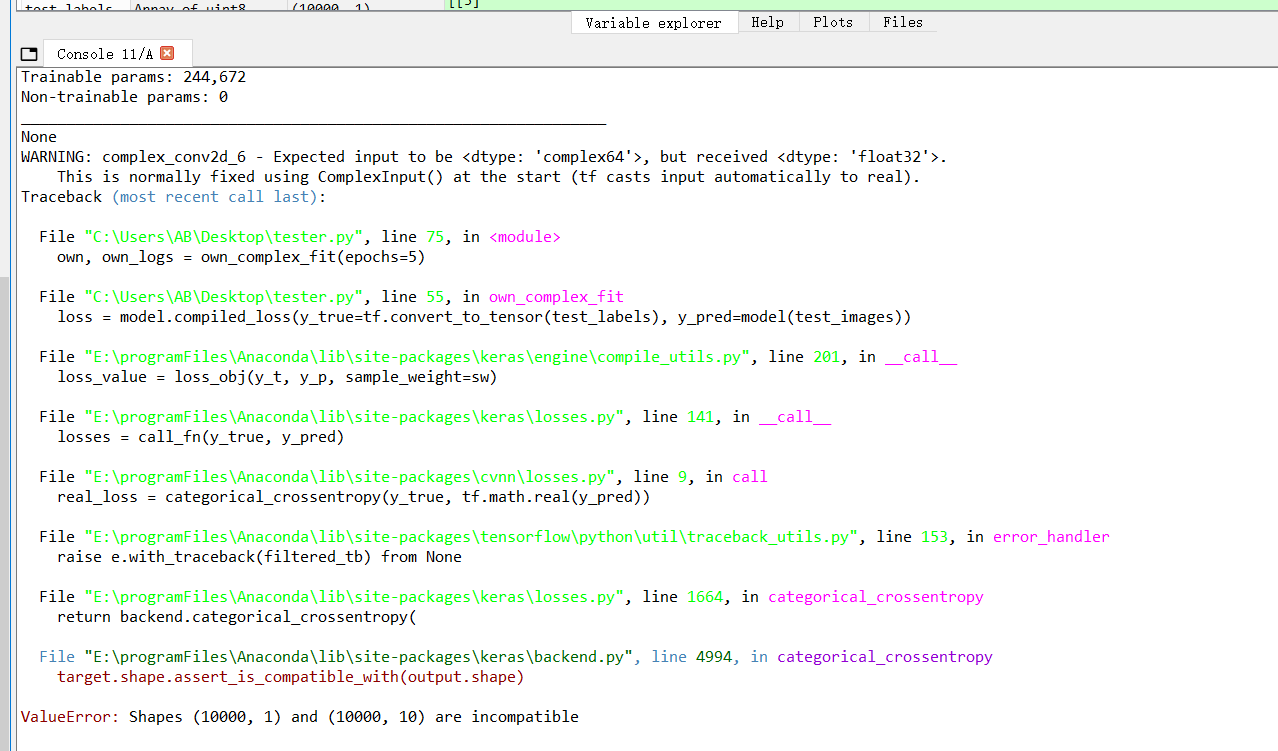

Hi, I got a new question related to this work. I'm trying to use complex inputs by changing dtype=np.complex64: _train_images, test_images = train_images.astype(dtype=np.complex64) / 255.0, test_images.astype(dtype=np.complex64) / 255.0 And I also modify activation = 'crelu', kernel_initializer='ComplexGlorotUniform', init_technique='mirror', as well as model.compile(optimizer='sgd', loss=losses.ComplexAverageCrossEntropy(), metrics=metrics.ComplexAccuracy())_. But it encountor this error:

The complete modified codes are copied as follows:

If I change the code

model.add(complex_layers.ComplexDense(10, activation=acti, kernel_initializer=init, use_bias=False, init_technique=init_tech))tomodel.add(complex_layers.ComplexDense(1, activation=acti, kernel_initializer=init, use_bias=False, init_technique=init_tech)), the error disappears but the train and test accuracy will continue to 0 without learning. Actually it does not make any sence to simply change 10 to 1 since the number of classes for this example is 10. I'm wondering what should I do to debug this issue properly. Many thanks for your help.Originally posted by @annabelleYan in https://github.com/NEGU93/cvnn/issues/16#issuecomment-987927633