Hi @Weigaa,

The error tells that the Torch tensor and DALI output used in feed_ndarray types don't match. DALI returns int64 while torch.zeros creates float32.

Your code should do something like:

to_torch_type = {

types.DALIDataType.FLOAT : torch.float32,

types.DALIDataType.FLOAT64 : torch.float64,

types.DALIDataType.FLOAT16 : torch.float16,

types.DALIDataType.UINT8 : torch.uint8,

types.DALIDataType.INT8 : torch.int8,

types.DALIDataType.INT16 : torch.int16,

types.DALIDataType.INT32 : torch.int32,

types.DALIDataType.INT64 : torch.int64

}

dali_tensor = pipe_out[0][0]

torch_type = to_torch_type[dali_tensor.dtype]

Inputimages = torch.zeros(file[1], dtype=torch_type).to(device)

nvidia.dali.plugin.pytorch.feed_ndarray(dali_tensor, Inputimages)

Hi, guys. I tried to combine DALI with the torch.autograd.graph.saved_tensors_hooks(pack_hook,unpack_hook) API to speed up the offloading and prefetching of intermediate feature maps to SSDs. I converted the Pytorch tensor to numpy for storage on the SSD during forward propagation, and used pipe_gds() to fetch back to the GPU during backward propagation, then completed the DALI Tensor to Pytorch via nvidia.dali.plugin.pytorch.feed_ndarray() Tensor conversion. When executing the feature map generated by the convolution layer, some errors occur and the error output is as follows.

I'm not sure if this is due to DALI or the Pytorch API, when I use torch.load() directly to read a Pytorch tensor file, no errors occur. Could you give me some suggestions for adjustments?

The reproducible code is as follows:

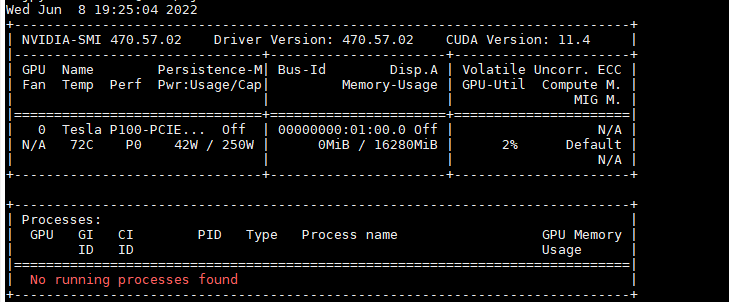

My GPU is Nvidia P100, and my Pytorch is 1.11.0+cu113. My Dali version is 1.14.