-

can the second flow be on same data. If so whats the advantage of doing more flows if the first produces good alignment? Yes and they should be trained on the same data. Doing more flows gives the model more capacity and can produce better speech/

-

in how many iterations can I expect the model to produce good alignment? In less than 24 hours on a NVIDIA V100 with batch size 1.

-

if the loss plateus for a long time and the aligment is not good what to do? If you're using a single step of flow, use it to warm start a 2-step of flow model

-

is validation loss representative of the aligment? and what validation loss did you achieve? Not necessarily, look at the alignment itself.

-

if you are trying to get good on a single speaker only is it better to use tacotron2 instead, or flowtron adds some stability with more speakers? (Please answer this one) Flowtron is better than Tacotron 2.

Hi,I appreciate you great work with flowtron, loved the paper. I have gone through all the issues and the paper but still have some problems with getting a proprietary female voice to have good alignment, I will first list some preassumptions I have so somebody can correct me if something is wrong.(this list can also be useful to help someone starting)

How I have understood the process of training should be done:

What I am not sure about:

Now specific to my problem:

I have trained 2 seperate models with difference in preprocessed text but on same data.

Model A

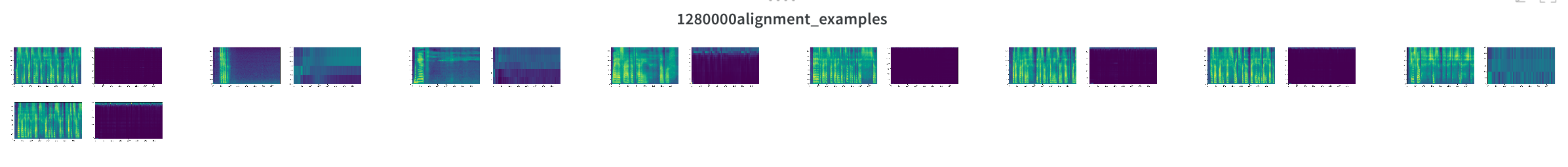

The first model (lets call it A) is trained warm started on your flowtron_ljs.pt with 3 datasets (one of those is ljspeech and 2 are proprietary with about 40000 sentences all combined).I will list the config.json and the run command for the run. Trained up to 1,300,000 iterations for 5 days with 4x1080Ti and it produces no alignment.

config.json: { "train_config": { "output_directory": "outdir", "epochs": 10000000, "learning_rate": 1e-4, "weight_decay": 1e-6, "sigma": 1.0, "iters_per_checkpoint": 5000, "batch_size": 1, "seed": 1234, "checkpoint_path": "", "ignore_layers": [], "include_layers": ["encoder", "embedding"], "warmstart_checkpoint_path": "", "with_tensorboard": true, "fp16_run": false }, "data_config": { "training_files": "data/processed/combined/dataset.train", "validation_files": "data/processed/combined/dataset.test", "text_cleaners": ["flowtron_cleaners"], "p_arpabet": 0.5, "cmudict_path": "data/cmudict_dictionary", "sampling_rate": 22050, "filter_length": 1024, "hop_length": 256, "win_length": 1024, "mel_fmin": 0.0, "mel_fmax": 8000.0, "max_wav_value": 32768.0 }, "dist_config": { "dist_backend": "nccl", "dist_url": "tcp://localhost:54321" },

"model_config": { "n_speakers": 3, "n_speaker_dim": 128, "n_text": 185, "n_text_dim": 512, "n_flows": 1, "n_mel_channels": 80, "n_attn_channels": 640, "n_hidden": 1024, "n_lstm_layers": 2, "mel_encoder_n_hidden": 512, "n_components": 0, "mean_scale": 0.0, "fixed_gaussian": true, "dummy_speaker_embedding": false, "use_gate_layer": true } }

command:

python -m torch.distributed.launch --use_env --nproc_per_node=4 train.py -c config.json -p train_config.output_directory=outdir train_config.ignore_layers=["speaker_embedding.weight"] train_config.warm_checkpoint_path="models/flowtron_ljs.pt" train_config.fp16=truegraphs: (disclamer: all aligments are shown for a single proprietary speaker)

Model B

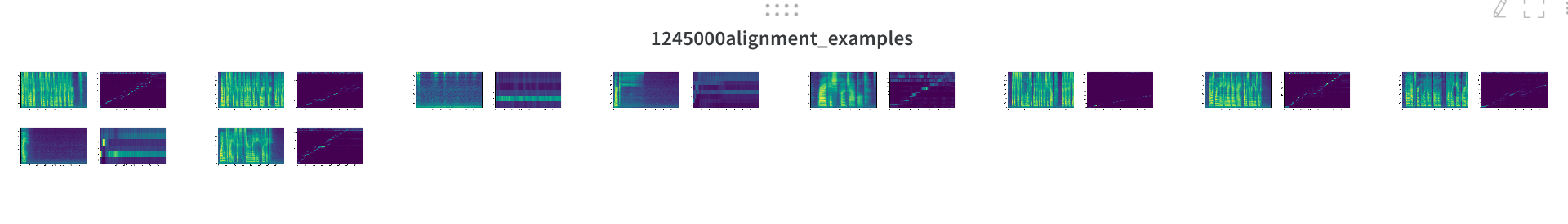

The second model (lets call it B) is trained warm started on our tacotron2 checkpoint with 3 datasets which were phonemized with my custom preprocessor so I turned off the preprocessing inside flowtron (one of those is ljspeech and 2 are proprietary with about 40000 sentences all combined).I will list the config.json and the run command for the run.Trained up to 1,300,000 iterations for 5 days with 4x1080Ti and it produced slight better aligment but this isnt it.

{ "train_config": { "output_directory": "outdir", "epochs": 10000000, "learning_rate": 1e-4, "weight_decay": 1e-6, "sigma": 1.0, "iters_per_checkpoint": 5000, "batch_size": 1, "seed": 1234, "checkpoint_path": "", "ignore_layers": [], "include_layers": ["encoder", "embedding"], "warmstart_checkpoint_path": "", "with_tensorboard": true, "fp16_run": false }, "data_config": { "training_files": "data/processed/combined/dataset.train", "validation_files": "data/processed/combined/dataset.test", "text_cleaners": ["flowtron_cleaners"], "p_arpabet": 0.5, "cmudict_path": "data/cmudict_dictionary", "sampling_rate": 22050, "filter_length": 1024, "hop_length": 256, "win_length": 1024, "mel_fmin": 0.0, "mel_fmax": 8000.0, "max_wav_value": 32768.0 }, "dist_config": { "dist_backend": "nccl", "dist_url": "tcp://localhost:54321" },

}

command:

python -m torch.distributed.launch --use_env --nproc_per_node=4 train.py -c config.json -p train_config.output_directory=outdir train_config.ignore_layers=["speaker_embedding.weight"] train_config.warm_checkpoint_path="models/checkpoint_70000_fav1" train_config.fp16=truegraphs:(disclamer: all aligments are shown for a single proprietary speaker)

Is something wrong because it takes 5 days of training for this? Should I stop this and continue on a checkpoint with flow=2?