A note on repeatability and pseudo-random numbers

(I've brought this up in #265 as well.): Usually, it is recommended to conduct all experiments that include pseudo-randomnes in a way that ensures repeatability, by specifying the initial state of the random number generator that is used. (See, e.g., https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1003285#s7.) To be fully deterministic, both, the initial state of the pseudo-random-number generator and the sequence of computation, need to be fully specified. The latter is often sacrificed for optimizing occupancy of the allocated resources. OpenMP's dynamic scheduling does this. And dask.array.random also is not deterministic (at least if the cluster differs between executions).

I'm (slowly) giving up my resistance against this trend. But I do think, that as soon as one enters the terrain of non-determinism, this has to be communicated very clearly.

Load balancing without tiling

Disclaimer: I really dislike the idea of adding the complexity of MPI, because the cost on the development side might be enormous, while there would only be benefit for users that have access to a (diminishing) subset of the existing scientific-computing infrastructure. Wall time from idea to final result is hardly ever determined by raw computational efficiency.

There's constraints on the Physics and Math side of Parcels that we can leverage to balance load without falling back to explicit domain decomposition: For any given distribution of particles, we can estimate the largest possible deformation after a given number of time steps. When parallelizing Parcels by distributing .execute() for segments of a ParticleSet, this can be used to construct heuristics for how to segment (or cluser) the particles rather than the physical domain to balance IO etc.

OpenMP

This is a low-hanging fruit. #265 looks very promising. And it also is largely orthogonal to all other efforts towards distribution of Parcels experiments. When distributed with Dask, we can still use intenal parallelization of Parcels experiments with OpenMP. The same is true for MPI, where it would be possible to have hybrid jobs with MPI across nodes and OpenMP on shared memory portions of the cluster.

Handling Scipy mode as first-class citizen

(related: #556)

I think it is very important to make it as easy as possible to not use the built-in JIT functionality of Parcels. There's two reasons for this:

-

Having a fully equivalent Python-only implementation helps developing and debugging.

-

Separation of concerns: The task of optimizing numerical Python for different environments is tackled in many different places. There's Numpy swap-ins like

bottleneckorbohrium, and other existing JIT solutions likenumbathat are very promising for efficiently using new architectures like GPUs.

Dask Make it easy to distribute Parcels experiments to arbitrary resources

Using Dask for distributing Parcels to arbitray resources is very promising. I would, however, strongly argue against adding any explicity Dask dependency into Parcels and instead prefer considering Dask support as something that will also pave the way to other approaches to parallelization.

The next main goal in Parcels development is to obtain an efficient parallel version. To do so, let's present here what is the code structure, what are its strengths and weaknesses, how we aim to tackle the different challenges. The point of this issue is to open the discussion such that we use the latest available libraries and best Python practice in Parcels development. This discussion follows on previous parcels developments (PR #265) and discussions (issue #17).

particleSet.execute() structure

Potential bottlenecks

Parcels profile

To understand better Parcels general profile, different applications will be profiled. This can be done using

cprofilefrom Python:The first profile is a 2-month simulation of floating MP in the North Sea:

The results are available here: nemo_log0083_singlePrec.pdf

Questions ?

But this still implies to load contiguous data. So if a particle is located at 170° and another one at -170°, the full domain -170:170 should be loaded? This would be annoying.

Parallel development

OPEN-MP option

This option was presented in PR #265. In the particle loop, the particles are distributed on different threads, sharing the same memory. Fieldset is shared by all threads.

dask option

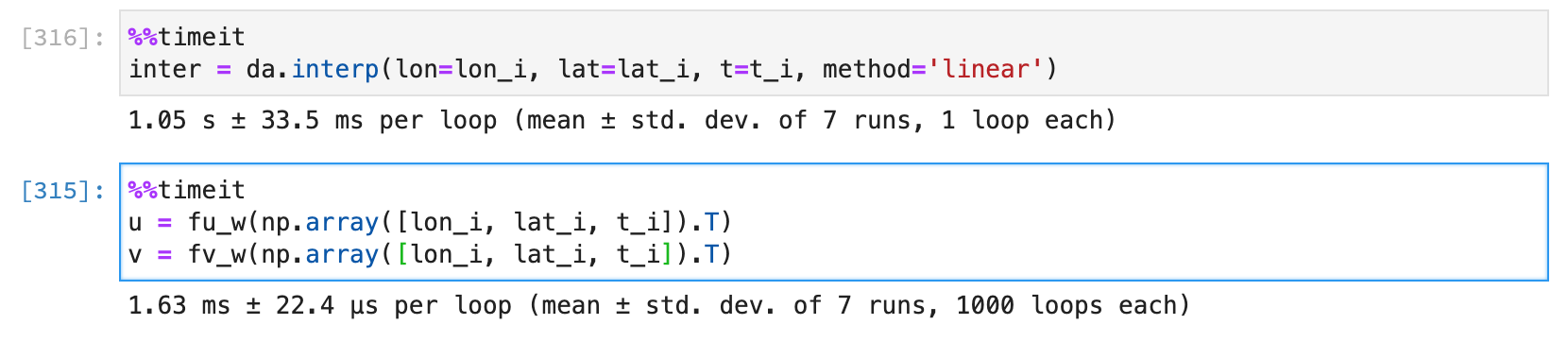

The Python way to automatically distributes the work between the cores and nodes. See this example from @willirath. https://github.com/willirath/pangeo_parcels

But how to do it efficiently for large input data applications? Can we use dask with some control on the particle distribution? If so, is it a good idea? How to efficiently load the input data?

MPI option

This option requires the more development, but it enables to have a large control over the load balance, how to distribute the particles on the differents processors (see simple proof of concept here https://github.com/OceanParcels/bare-bones-parcels) The idea is the following: