Update:

I changed my input image size where the height is a multiples of 2, so I don't run into the need of having to run these awkward padding operations

I've verified the saved_model where the input shape is now in NHWC

tf_model = tf.saved_model.load(PATH_TO_SAVED_MODEL)

infer = tf_model.signatures["serving_default"]

print(infer.structured_outputs)

>>> {'tf.identity': TensorSpec(shape=(1, 32, 256, 1), dtype=tf.float32, name='tf.identity')}In addition, I verified that the saved model produce an output that agrees with PyTorch, all the way down to 1e-5.

So NCHW -> NHWC was a success

However now I'm encountering issues with quantization:

Integer Quantization started ========================================================

ERROR: tensorflow/lite/kernels/conv.cc:349 input->dims->data[3] != filter->dims->data[3] (3 != 1)Node number 1 (CONV_2D) failed to prepare.

Traceback (most recent call last):

File "/usr/local/bin/openvino2tensorflow", line 2563, in convert

tflite_model = converter.convert()

File "/usr/local/lib/python3.6/dist-packages/tensorflow/lite/python/lite.py", line 921, in convert

result = self._calibrate_quantize_model(result, **flags)

File "/usr/local/lib/python3.6/dist-packages/tensorflow/lite/python/lite.py", line 522, in _calibrate_quantize_model

self.representative_dataset.input_gen)

File "/usr/local/lib/python3.6/dist-packages/tensorflow/lite/python/optimize/calibrator.py", line 172, in calibrate

self._calibrator.Prepare([list(s.shape) for s in sample])

RuntimeError: tensorflow/lite/kernels/conv.cc:349 input->dims->data[3] != filter->dims->data[3] (3 != 1)Node number 1 (CONV_2D) failed to prepare.It looks like there is another dimension mismatch. I'm more lost here since I don't know where in the network exactly this is occurring. Would you happen to have some debugging tips?

First of all, thank you very much for your work. I've found your blog post on PyTorch conversion very informative.

My goal was to use your package to get the model from NCHW into NHWC without having

Transposelayers everywhere in my quantized tflite model, so that it can run on an Edge TPU efficiently.I largely followed your tutorial and was able to do PyTorch -> ONNX -> OpenVINO

However, an error occurs when using

openvino2tensorflow(I used your Docker image, so I think dependencies aren't an issue here)I received this error:

(side note: this is the most informative error messages I've seen in my journey of trying out various conversion packages)

Based on those messages, I used Netron to inspect the

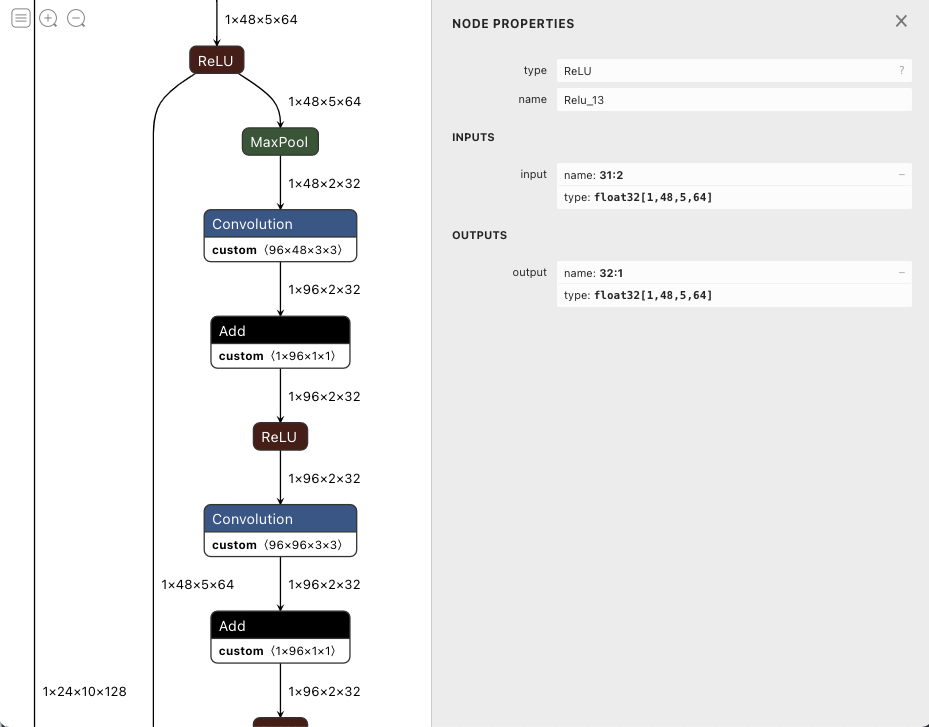

xmlfile produced by OpenVINO. I was pretty baffled by it, because from the graph itself, it doesn't look like the dimensions are mismatched This is layer 32, simply aReLUoperation (shown at the very top):And this is layer 51, a

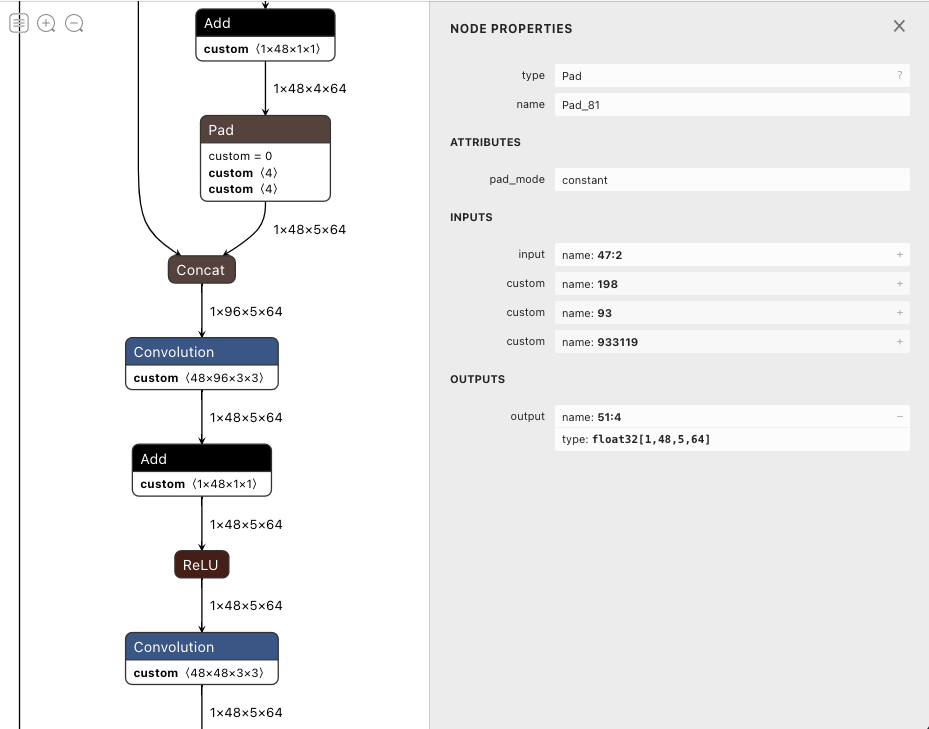

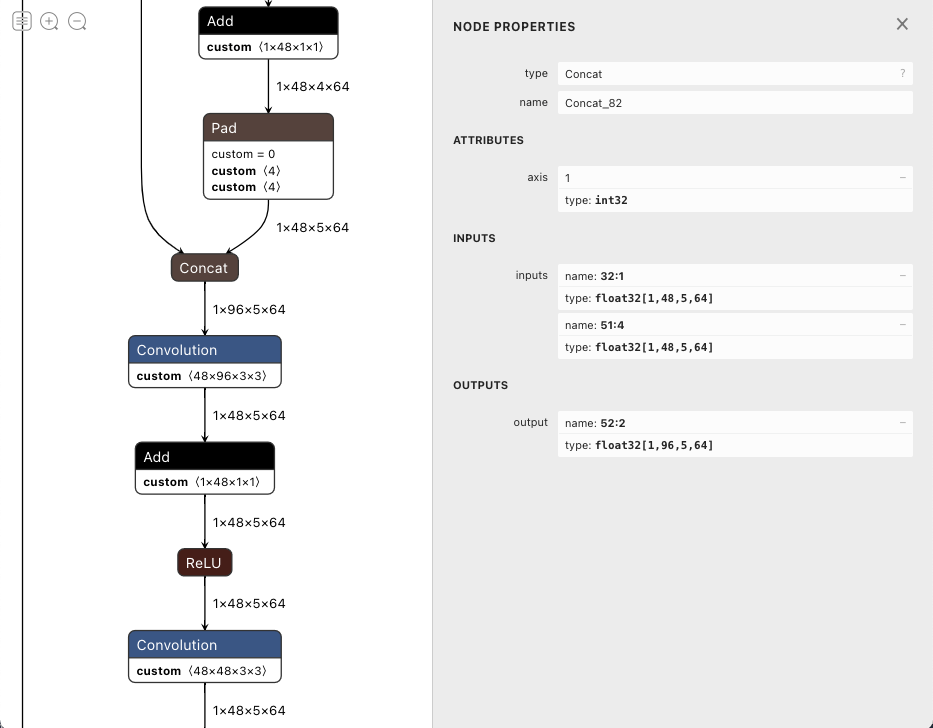

Padoperation:Even looking at the layer where the dimension mismatched supposedly happen, layer 52 a

Concatoperation, the shapes of the two inputs are correct as[1, 48, 5, 64]and[1, 48, 5, 64], there is no mismatchIs it possible that somehow the

Padoperation isn't running properly? I did find it a little strange that thePadlayer becomes antf.identityaccording to the error messageERROR: input_layer1 layer_id=51: KerasTensor(type_spec=TensorSpec(shape=(1, 4, 64, 48), dtype=tf.float32, name=None), name='tf.identity/Identity:0', description="created b y layer 'tf.identity'")Please let me know if there is anything else you want me to elaborate on, I'm happy to provide more details

One minor note is that I didn't fully follow your blog post for the PyTorch -> ONNX step. Instead of using the backend module of OpenVIO's model downloader, I just did

torch.onnx.exporton my own, where the hyperparameter settings I used wereI used

onnxsimto further optimize the ONNX model as well