I was getting that and other errors until I indented my code under the initial def handler, as far as I could work out this in a must do:

def handler(pd: "pipedream"):

# Do all stuff under this indent

string = 'Hello everybody'

print (string)

return string

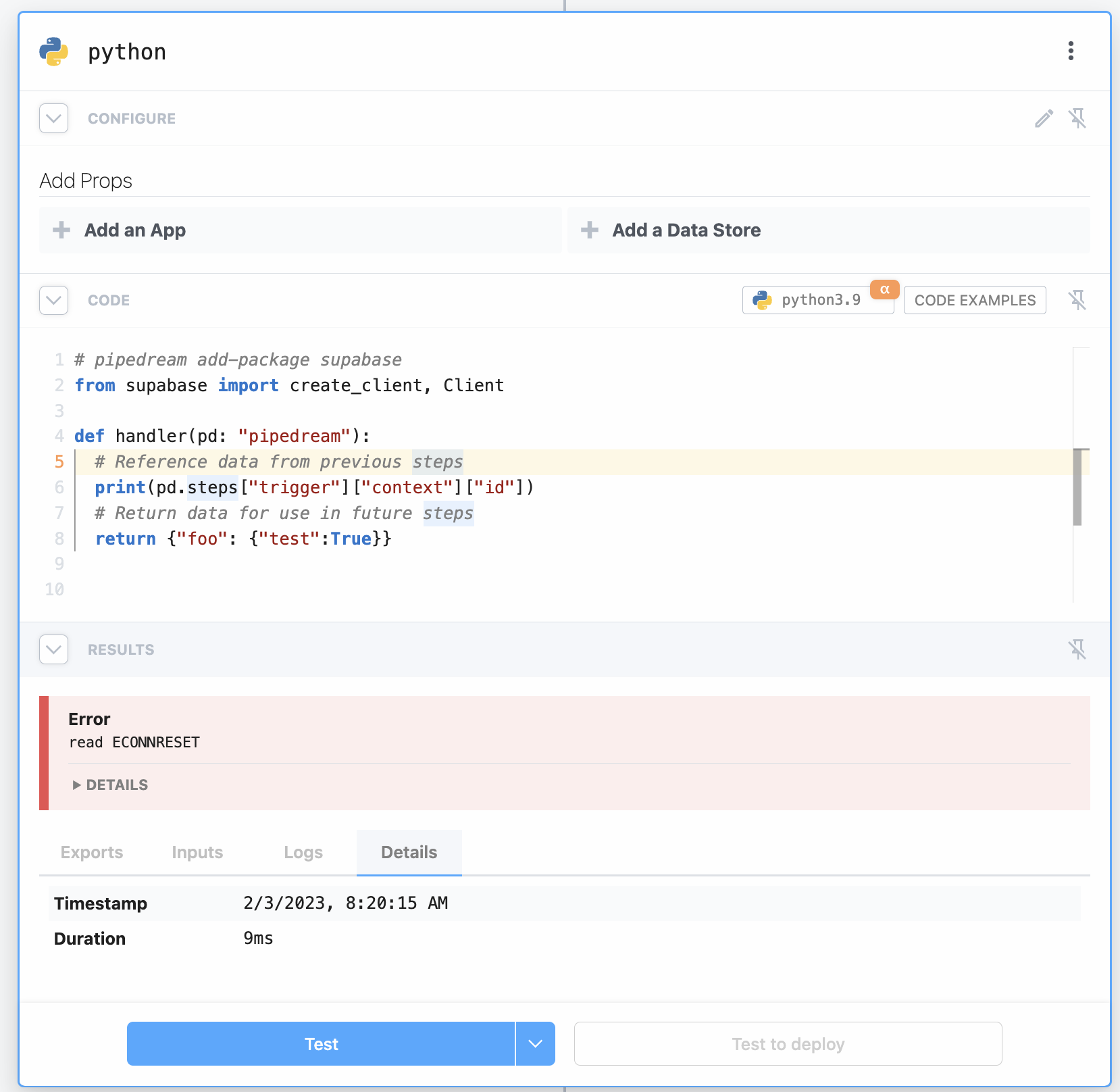

Describe the bug

Python code steps fail with a

ECONNRESETerror without any additional debugging information.It appears to be widespread with an unknown single cause, has potentially multiple cause.

Expected behavior We expect to be able to run valid Python code, or have more helpful error messages surfaced if a runtime error occurs.

Additional context

If you have a specific example, please include the code step code below to help us identify the cause.

Update Feb 22nd 2023

A new version of our Python execution environment for workflows was released. I have tested the scenarios below that I can.

The error handling of various Python exceptions has been fixed.

If you continue to have issues, please provide your Python code that throws this error, without authentication or integration with a 3rd party app is preferable so we can all reproduce the bug.