For the most part I imagine that this makes implementation for some devices easier and as you have shown the handling of received packets faster. However, I don't like that this requires an extra buffer and thus potentially breaks the copy-once paradigm a network stack might want to ensure. Moreover, for link layers with smaller packet sizes this might be fine, but e.g. all Ethernet devices would require an extra 1500 B of RAM with that. This is IMHO a no-go, especially if it is not necessarily required.

Description

While working on #11483, I started evaluating some alternative receive procedures that could help to improve the performance of transceivers and maintainability.

Our current receive procedure using the netdev interface is the following:

dev->driver->isr(dev)from thread contextdev->event_callback(NETDEV_EVENT_RX_COMPLETE)is called fromdev->driver->isr(dev)functiondev->driver->recv(dev, NULL, 0)dev->driver->recv(dev, buffer, pkt_size)However, there are some disadvantages of this procedure:

dev->driver->recvfunction is tricky (usually radios don't understand the concept of "dropping" a packet, so there are blocks and chains of if-else in order to meet the requirements ofbuf != NULL,len != 0, etc. Also, some extra care is needed in order to avoid corrupting the frame buffer when reading the first received byte (PHY layer, Physical Service Data Unit length) withdev->driver->recv(dev, NULL, 0).Proposed procedure

I changed the receive procedure to indicate the rx of a packet, and modified the

_recvfunction to simply write to a frame buffer (a.k.arx_buf,127 bytes for IEEE802.15.4). I also added a PHY Indication function as a callback for the upper layer when a packet is received and areceivefunction:The new procedure looks like:

dev->driver->isr(dev)from thread contextdev->event_callback(NETDEV_EVENT_RX_COMPLETE)is called fromdev->driver->isr(dev)function.dev->driver->recv()function to write directly in a fixed size frame buffer (static or stack allocated), without the drop and pkt size logic. Save the pkt size in apsdu_lenvariable.phy_indication(dev, rx_buf, psdu_len)to indicate the upper layer a packet was received.Results

For both setups (current

dev->driver->recvand PHY indication), I'm using an AT86RF233 radio, a logic analyzer and GPIO pins to measure time. All times include gnrc_pktbuf allocation, which can be reduced for the PHY indication if the MAC layer is processed directly in rx_buf)The logic for the measurement is:

dev->driver->isr(dev)functionnetif->ops->recv()For the PHY indication setup, I modified the

dev->driver->recvfunction to (sort of) preserve the original behavior:Here is a graph comparing our current

dev->driver->recvand thephy_indicationmechanism for packets with different PSDU size:This is the time overhead (%) of the current

dev->driver->recv()procedure:Note there's data duplication in rx_buf and the packet buffer, but could be reduced if the MAC layer is processed from the frame buffer

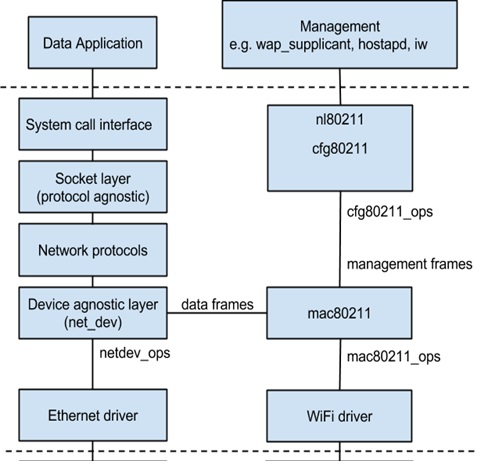

I've seen this pattern at least on OpenThread, OpenWSN and Linux (local buffers in stack). Contiki also has a similar approach.

Useful links