I was able to do this another way. I wanted to post my results:

using AbstractGPs

using Stheno

using Plots

using KernelFunctions

using LinearAlgebra

l = 0.2

x = range(0.0, 1.0; length=100)

f = GP(SEKernel() ∘ ScaleTransform(1/l))

r = transpose(rand(f(x, 1e-12), 10))Or

using AbstractGPs

using Stheno

using Plots

using KernelFunctions

using LinearAlgebra

l = 0.2

x = range(0.0, 1.0; length=100)

k = kernelmatrix(SEKernel() ∘ ScaleTransform(1/l), x)

l = cholesky(k+Diagonal(1e-13*ones(100))).L

u = randn(100, 10)

r = transpose(l * u)

p = plot(r2[1,:])

for num in range(2,length=9)

plot!(p, r2[num,:])

end

p

I've been trying to figure out how to utilize GaussianProcesses.jl to duplicate some code I found in python. I'm new to kernels and how they are used so don't hesitate to explain what I'm not understanding. The python code uses the squared exponential kernel to produce n random but smooth functions:

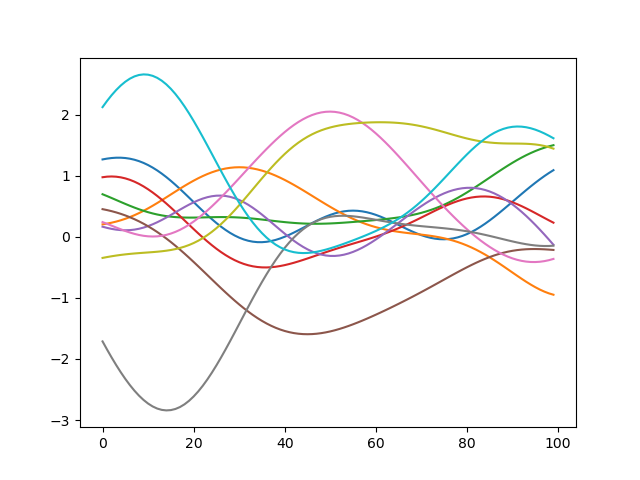

When I plot the results I get plots that look like the following for n=10:

Unfortunately I get stuck early on when I want to produce the radial basis functions of x (

k=K(x)). Any help is appreciated.