related #8217 previous #4528

derived tasks from study

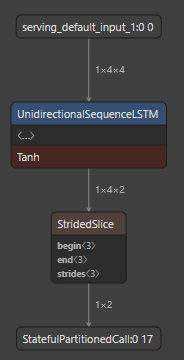

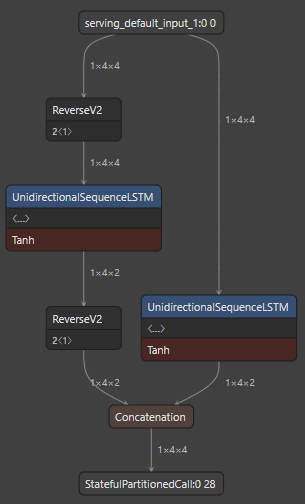

- [x] study LSTM

- [x] unroll LSTM

- [x] codegen unrolled LSTM for TRIX

- [x] generat tflite fused

UnidirectionalSequenceLSTMOp - [x] unroll GRU

- [x] codegen unrolled GRU for TRIX --> fails

- [x] unroll SimpleRNN

- [x] codegen unrolled SimpleRNN for TRIX

- [x] RNN + SimpleRNNCell

- [x] RNN + LSTMCell

- [x] RNN + GRUCell

Let's findout how to import TensorFlow LSTM operator.

reference: