@wjyustl , I encountered the same problem as you. I'm wondering if there is a multi-agent task here, although it seems to be mentioned in the paper, in the code I'm not sure.

Closed wjyustl closed 8 months ago

@wjyustl , I encountered the same problem as you. I'm wondering if there is a multi-agent task here, although it seems to be mentioned in the paper, in the code I'm not sure.

I also encounter the following problems when the code was running:

Traceback (most recent call last):

File "/home/wjy/anaconda3/envs/MyLapGym/lib/python3.9/multiprocessing/process.py", line 315, in _bootstrap

self.run()

File "/home/wjy/anaconda3/envs/MyLapGym/lib/python3.9/multiprocessing/process.py", line 108, in run

self._target(*self._args, self._kwargs)

File "/home/wjy/anaconda3/envs/MyLapGym/lib/python3.9/site-packages/stable_baselines3/common/vec_env/subproc_vec_env.py", line 45, in _worker

observation, reset_info = env.reset(seed=data)

File "/home/wjy/anaconda3/envs/MyLapGym/lib/python3.9/site-packages/gymnasium/wrappers/time_limit.py", line 75, in reset

return self.env.reset(kwargs)

File "/home/wjy/MyLapGym/sofa_env/sofa_env/scenes/rope_threading/rope_threading_env.py", line 769, in reset

super().reset(seed)

File "/home/wjy/MyLapGym/sofa_env/sofa_env/base.py", line 208, in reset

self._init_sim()

File "/home/wjy/MyLapGym/sofa_env/sofa_env/scenes/rope_threading/rope_threading_env.py", line 299, in _init_sim

super()._init_sim()

File "/home/wjy/MyLapGym/sofa_env/sofa_env/base.py", line 282, in _init_sim

self.scene_creation_result = getattr(self._scene_description_module, "createScene")(self._sofa_root_node, **self.create_scene_kwargs)

File "/home/wjy/MyLapGym/sofa_env/sofa_env/scenes/rope_threading/scene_description.py", line 144, in createScene

Eye(

File "/home/wjy/MyLapGym/sofa_env/sofa_env/scenes/rope_threading/sofa_objects/eye.py", line 90, in init

self.center_node.addObject("RigidMapping", template="Rigid3,Rigid3", globalToLocalCoords=True)

ValueError: Object type RigidMapping<> was not created

The object is in the factory but cannot be created.

Requested template : Rigid3,Rigid3

Used template : None

Also tried to create the object with the template 'Rigid2d,Vec2d' but failed for the following reason(s):

Hi @wjyustl and @DISCORDzzz ,

@ScheiklP Thanks. My SOFA version is v23.06.

I change "render_mode" to HUMAN https://github.com/ScheiklP/sofa_zoo/blob/main/sofa_zoo/envs/rope_threading/ppo.py#L44 and comment out lines 138-140 of the code. https://github.com/ScheiklP/sofa_zoo/blob/main/sofa_zoo/envs/rope_threading/ppo.py#L138-140

In addition, I need to comment out https://github.com/ScheiklP/sofa_env/blob/main/sofa_env/scenes/rope_threading/sofa_objects/eye.py#L90 in eye.py.

The ppo.py in rope_threading can operate normally. Will it make a big difference?

I am actually not sure. Have you noticed anything?

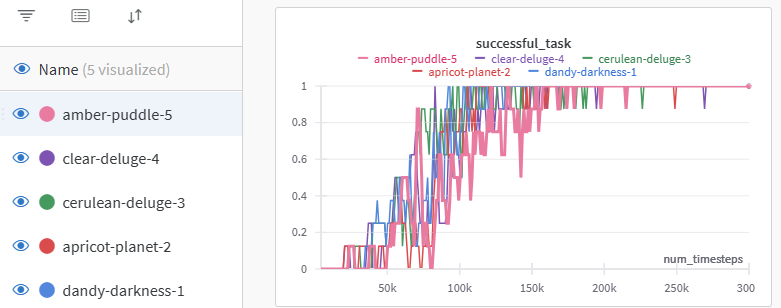

Hi, @ScheiklP Sorry disturbing you again. Some time ago the question of "successful_task" was resolved. I drew the following line diagram with wandb. Set "number_of_envs" to 8 and the results were repeated 5 times to take the average value.

My latest question is about multi-agent environment. I want to test a multi-agent reinforcement learning algorithm that needs to use multi-agent environment. Taking "RopeThreading" for example, I found that two laparoscopic graspers could not do the action at the same time, that is, when one did the action, the other had to stop. Is the "RopeThreading" environment still a multi-agent environment? Is it reasonable if I want to train this environment with a multi-agent reinforcement learning algorithm (e.g. MAPPO) instead of a PPO?