I have not published my timelapses, but could dig them out and place them somewhere for you within a few days.

OK, I had forgotten how resource hungry this still is. Then it should be a configurable option that should be run only on capable computers. (Only dedicated NVidia/AMD with enough VRAM? Or would a Core i5-4xxx be enough? Cuda required? Or any minimum OpenGL version limitation?)

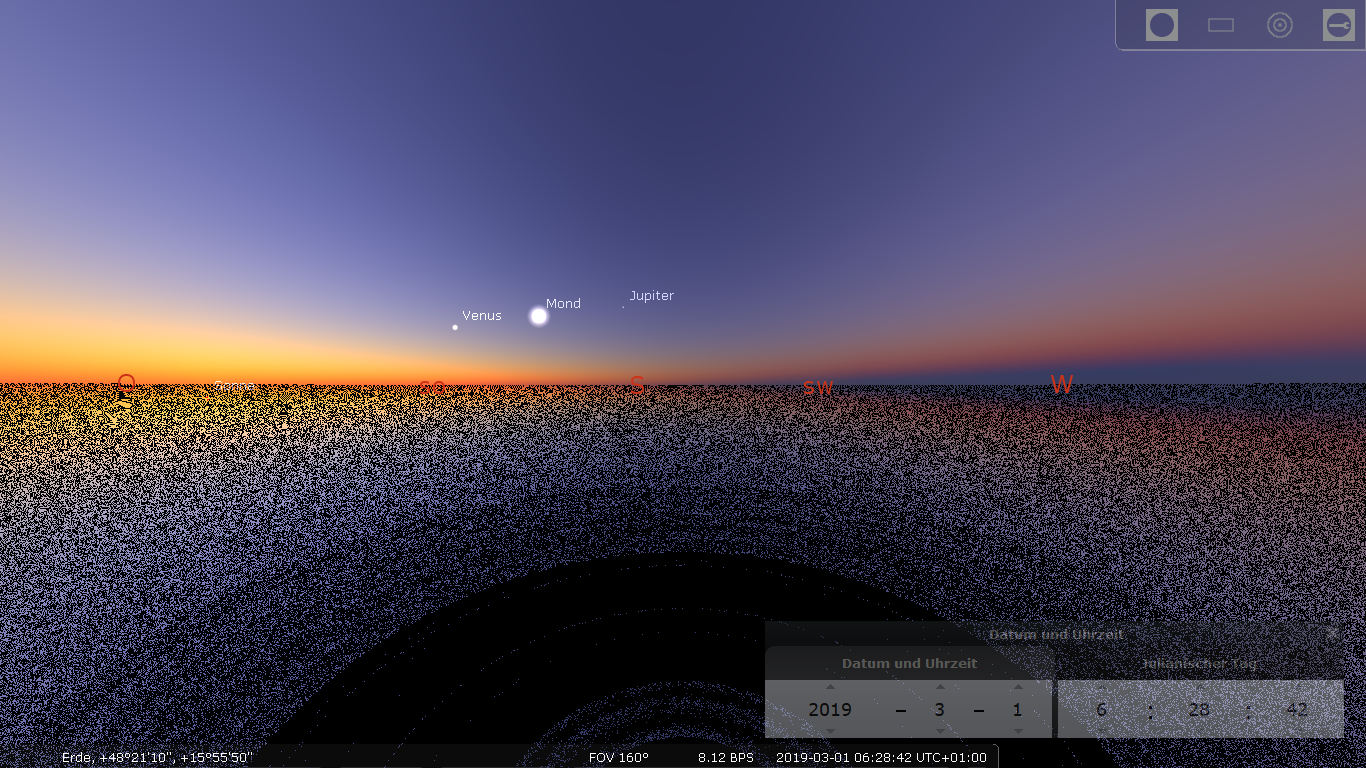

This twilight with earth shadow is great! I hope you still can report luminance etc. Maybe even adapt to ground reflectivity (added to landscape.ini files). It's OK if "precomputation to new conditions" takes up to a minute or so. Would it be useful to cache results (a few GB of storage are easily found...)? Or even add a whole tab on "Atmospheric conditions" with options to save/delete/recreate/...?

The logfile says nothing useful.

The logfile says nothing useful.

Moving discussion started in #623 here.

Are they published anywhere? Would be interesting to compare the model with real-world photos.

This has an issue. Namely, the precomputation stage is quite resource-hungry: on my nVidia GeForce GTX 750Ti it takes several tens of seconds to precompute the necessary textures, yielding 128MiB main texture when I set up constants for a decent resolution (otherwise deep twilight is blocky in azimuth and jumpy in time, see this animation; if you don't notice jumpiness, try watching it in a lower speed).

Moreover, until I added a couple of

glFinishcalls in my fork of PAS (branch "stellarium"), precomputation made my X11 session unusable (frozen mouse cursor etc.), and sometimes locked up X server indefinitely. Also, sometimes the results were garbage (e.g. missing red channel — I suppose this is due to some nvidia driver bugs, but might be races in the implementation).Also, since a decent-resolution main texture is at least 128MiB, precomputation stage takes multiple times that amount of VRAM, so this looks unfriendly to mobile devices.

So, I'm not sure if we really want the user to have an option to change parameters from within Stellarium GUI.

Well, if you insist, OK. Currently my WIP

atmospherebranch of Stellarium simply hacks into classAtmosphere.This might well be possible, but for each new planed we need its own profile of atmospheric density, scattering&absorption cross-sections, Mie scattering phase functions (based on a sensible particle size distribution) etc.. With this data we can indeed try to precompute corresponding textures (maybe increasing number of orders of scattering).