First off, I recommend the Melodic branch. It has a bunch more features and is better structured. It will work in Kinetic.

Second, it would be helpful to provide me with a bag file I can use to reproduce.

My steps to reproduce:

-

Downloaded your bag file

-

Ran

rosbag filter bag_name.bag output.bag 'topic != "/tf" or topic == "/tf" and m.transforms[0].header.frame_id != "map" and m.transforms[0].child_frame_id != "odom"' output.bagon the bag file, to remove the transformations your recorded from your SLAM/localization runs, so I was just left with the raw data to provide my own.

-

Ran: roslaunch slam_toolbox online_sync.launch with a roscore and sim time.

So make sure you see this error when following those steps on your machines.

Third, how did you install Ceres? I've heard of similar issues that were the result of a dirty Ceres install that comes with Google Cartographer. If you've installed Google Cartographer, you will need to remove that Ceres install and install it again via rosdep. Ceres that ships with Cartographer is only suitable for cartographer. This thread https://github.com/SteveMacenski/slam_toolbox/issues/167 may be helpful.

The laser is an RPLIDAR A2M8. It's installed with the cable pointing to the robot's back.

The laser is an RPLIDAR A2M8. It's installed with the cable pointing to the robot's back.

I am using ROS Noetic installed via apt. This also happens on ROS Melodic.

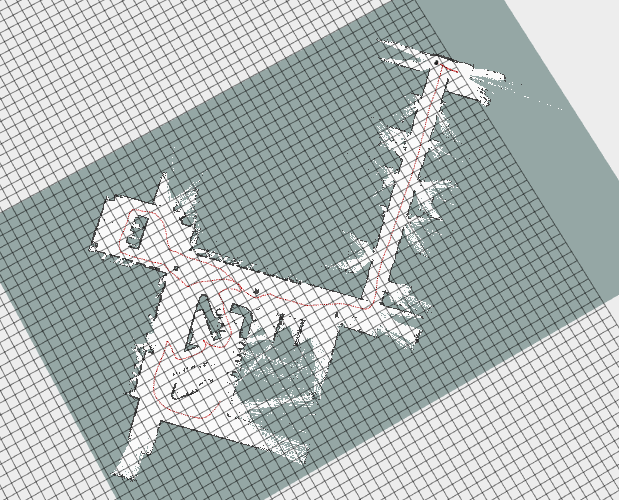

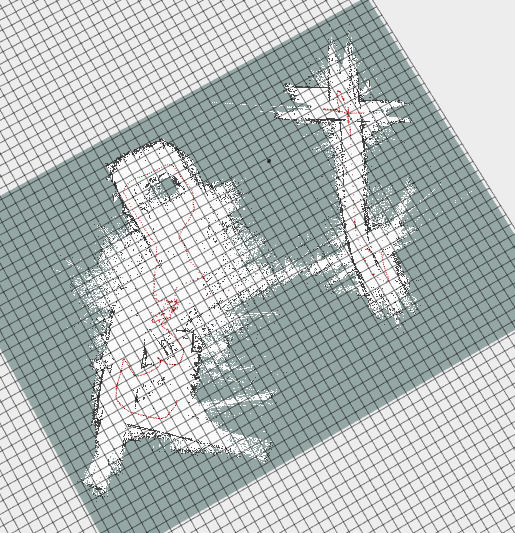

This image is from playing the bag file.

I am using ROS Noetic installed via apt. This also happens on ROS Melodic.

This image is from playing the bag file.

At least I think it does. Not knowing what the building looks like, this could be a nice looking, but ultimately incorrect view, like if some large area is duplicated.

At least I think it does. Not knowing what the building looks like, this could be a nice looking, but ultimately incorrect view, like if some large area is duplicated.

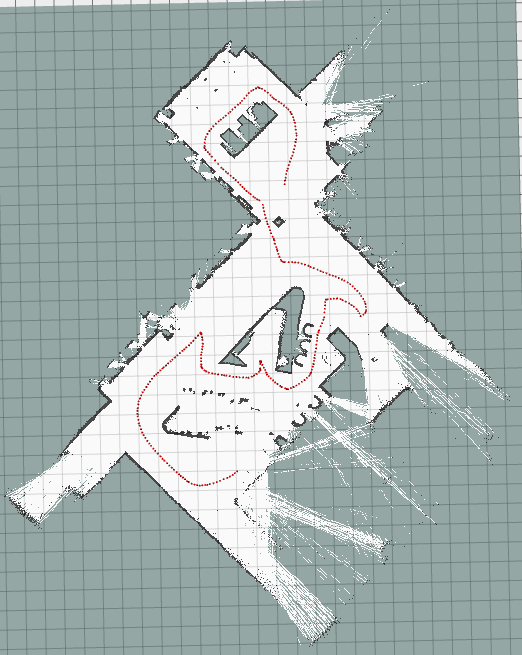

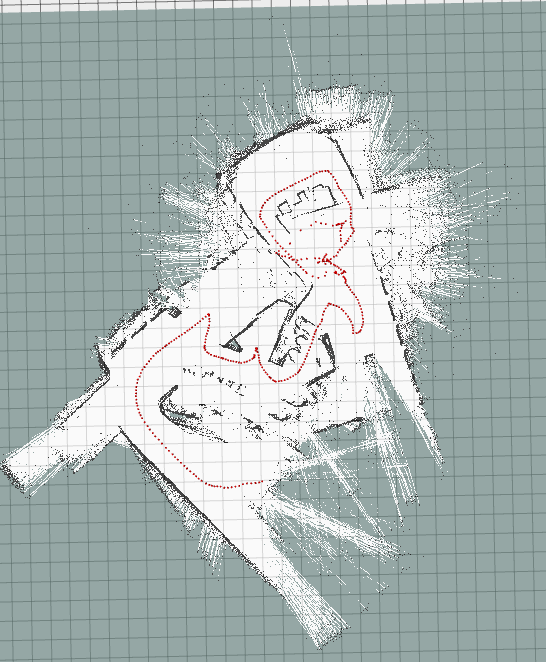

NOTE: The RED axis is "pointing" in the direction of the cord on the RPLIDAR A3 unit.

NOTE: The RED axis is "pointing" in the direction of the cord on the RPLIDAR A3 unit.

Required Info:

Steps to reproduce issue

I created a minimal repo with bags, config and launch files:

https://github.com/saschroeder/turtlebot_slam_testing

The bug always occurs with rosbags and sometimes in simulation, too. Disabling loop closure prevents the bug.

I can upload more rosbags if necessary.

Expected behavior

Record an accurate map and correctly use loop closure to improve the map.

Actual behavior

After some time the map is rotated around 180° (may be related or not) and some time after this (seconds to minutes) the pose graph is optimized in a wrong way, causing the map to be broken (see images below).

Additional information

Example 1 (before/after optimization):

Example 2 (before/ after optimization):