same problem, in the cfg.py I use Cfg.use_darknet_cfg = True and cfgfile I use yolov4.cfg. and I rewrite get_image_id like this:

Closed hongrui16 closed 3 years ago

same problem, in the cfg.py I use Cfg.use_darknet_cfg = True and cfgfile I use yolov4.cfg. and I rewrite get_image_id like this:

SAME problem!

Inference output now is split into 2 tensors:

boxes: [batch, num_boxes, 1, 4]

and

confs: [batch, num_boxes, num_classes].

The output used to be one compact tensor shaped like: [batch, num_boxes, 4 + num_classes]

Please double check consistencies in your local source code.

hello, I check the output using code "print(outputs[0].shape)" to get tensor shape, and following are my results and errors:

the shape is [1,22743,1,4] I only got the bbox values and I can not see the confs values, is there any reason for this error?

I changed the Cfg.use_darknet_cfg to False in cfg.py, Is there any relationship with this error? But if I change the value to True it will have error in this link: https://github.com/Tianxiaomo/pytorch-YOLOv4/issues/142

the shape is [1,22743,1,4] I only got the bbox values and I can not see the confs values, is there any reason for this error?

I changed the Cfg.use_darknet_cfg to False in cfg.py, Is there any relationship with this error? But if I change the value to True it will have error in this link: https://github.com/Tianxiaomo/pytorch-YOLOv4/issues/142

same problem, in the cfg.py I use Cfg.use_darknet_cfg = True and cfgfile I use yolov4.cfg. and I rewrite get_image_id like this:

I have edited the ''get_image_id'' function. The error has nothing to do with the function.

same problem, in the cfg.py I use Cfg.use_darknet_cfg = True and cfgfile I use yolov4.cfg. and I rewrite get_image_id like this:

I have edited the ''get_image_id'' function. The error has nothing to do with the function.

in fact this solution is to solve another problem: https://github.com/Tianxiaomo/pytorch-YOLOv4/issues/182, if you dont have that error you do not need to do that.

Hi,

Try to replace this line

for img, target, (boxes, confs) in zip(images, targets, outputs):

by

for img, target, boxes, confs in zip(images, targets, outputs[0], outputs[1]):

This solved my problem

Hi, Try to replace this line

for img, target, (boxes, confs) in zip(images, targets, outputs):byfor img, target, boxes, confs in zip(images, targets, outputs[0], outputs[1]):This solved my problem

I use the same way, but still has other error

same problem, in the cfg.py I use Cfg.use_darknet_cfg = True and cfgfile I use yolov4.cfg. and I rewrite get_image_id like this:

I have edited the ''get_image_id'' function. The error has nothing to do with the function.

in fact this solution is to solve another problem: #182, if you dont have that error you do not need to do that.

I KNOW

is there any one who have this problem? AssertionError: results do not correspond to current coco set https://github.com/Tianxiaomo/pytorch-YOLOv4/issues/219

same problem, in the cfg.py I use Cfg.use_darknet_cfg = True and cfgfile I use yolov4.cfg. and I rewrite get_image_id like this:

I have edited the ''get_image_id'' function. The error has nothing to do with the function.

in fact this solution is to solve another problem: #182, if you dont have that error you do not need to do that.

I KNOW Hi,have you solved this problem?I also encountered this problem

same problem, in the cfg.py I use Cfg.use_darknet_cfg = True and cfgfile I use yolov4.cfg. and I rewrite get_image_id like this:

I have edited the ''get_image_id'' function. The error has nothing to do with the function.

in fact this solution is to solve another problem: #182, if you dont have that error you do not need to do that.

I KNOW Hi,have you solved this problem?I also encountered this problem

I give up. The repo is bullshit. No specific introduction about how to implement the dataset. After I take many efforts to finish preparing the dataset to match this repo, more bugs emerge. If we put forward a repo on GitHub, we should make it work well at least, with a proper introduction, especially when the repo is a bit complicated, in my mind. Bullshit

hello guys !!

i try to implement lda2vec algorithm from (https://github.com/sebkim/lda2vec-pytorch) with my own data. when i try the model preprocess.py, i'm getting this error.

how to fix this error, someone help me

how to fix this error, someone help me

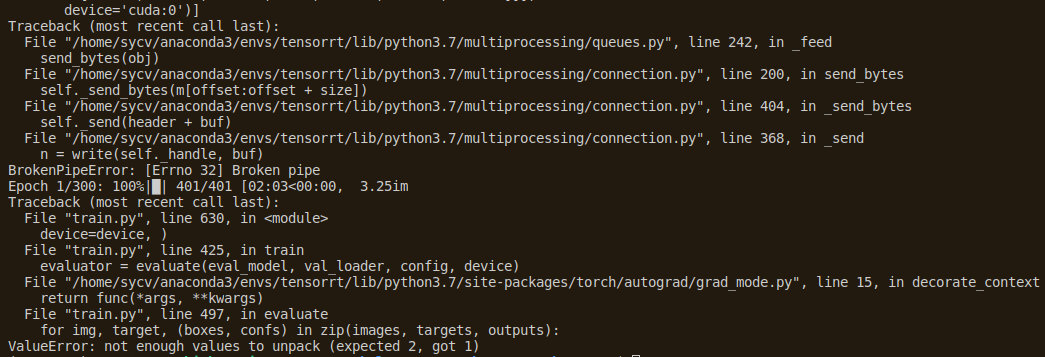

Traceback (most recent call last): File "train.py", line 635, in

device=device, )

File "train.py", line 425, in train

evaluator = evaluate(eval_model, val_loader, config, device)

File "/root/anaconda2/envs/pytorch_1_5/lib/python3.6/site-packages/torch/autograd/grad_mode.py", line 15, in decorate_context

return func(*args, **kwargs)

File "train.py", line 493, in evaluate

for img, target, (boxes, confs) in zip(images, targets, outputs):

ValueError: too many values to unpack (expected 2)

Is there any useful solution