@YourDaddyIsHere your thesis issue...

Closed synctext closed 6 years ago

@YourDaddyIsHere your thesis issue...

Well, thanks.

More reading, besides Dispersy.py reading..:

As a first warm-up experiment, please implement a Dispersy walker in Python using both Twisted and Protocol Buffers.

To prepare, please also dive into this code and understand the structure. This is a fully functional walker in Java, written by a bachelor student.. See how peer lists are stored and messages are defined there. Something much more advanced has been build by @qstokkink using Protocol Buffers, see the code repo here.

The goal is to create a as-simple-as-possible Introduction-req and introduction-response parser and generator that can take steps on our live network. For this first prototype it is OK to have infinite memory growth and simplistic non-existent error handling.

Please read the protocol rules for peer discovery carefully and try to simply always walk to a fresh walk-Candidate.

* If you want to read about my serializer you should start here to get an impression: https://github.com/qstokkink/TriblerProtobufSerialization/tree/triblermessages#complete-example

This above task might take a bit of time. A smaller 1-day starting task.. Please create a blocking cmdline tool like contact_bootstrap_peers.py which simply sends out a introduction-request message to our hard-coded bootstrap servers, blocks infinitely until it obtains a response, prints that, then exits. Usage of a minimal amount of Python lines is appreciated, as we can use this within a deeper and broader tutorial.

@synctext Thanks, I have gone through the "fully functional walker in java by bachelor student", I find that they use their own bootstrap server rather than the tracker of Tribler.

Yes, implementing a dispersy walker needs a bit time, so I am now doing the smaller experiment.

@qstokkink thanks, that is helpful

@YourDaddyIsHere to make your experiment as self-contained as possible, I would suggest to run your own Dispersy bootstrap server. This is not hard to do and you can use our existing twistd plugin for this: https://github.com/Tribler/dispersy/blob/devel/twisted/plugins/tracker_plugin.py. After you've started this plugin, you can create a bootstraptribler.txt file in your Dispersy working directory where you specify the IP/port of the tracker you just started (see https://github.com/Tribler/dispersy/blob/12c050af3b15dd0b92fa10d52aad78d40379f665/discovery/community.py#L208).

@devos50 OK

@synctext Hello, happy new year. I can now well play with twisted and protocol buffer. But: What does "take steps on our live network" mean? The live network means the REAL dispersy network? But the real dispersy network uses conversion.py, (rather than protocol buffer) to encode and decode messages (conversion.py uses built-in Struct.pack to decode and encode), if I use protocol buffer to do serialization, it won't be compatible with REAL dispersy network.

So, maybe live network doesn't mean the REAL dispersy network, and I don't need to follow the all the fashion of REAL dispersy network (using conversion.py for serialization etc.) and simply implements a walker follow protocol rules for peer discovery carefully? If so, I almost finish that.

indeed. Quinten already indicated we can't make it binary compatible easily. Due to a security fix we need to upgrade the tunnel community anyways. We can break compatibility in next release. Then you can do walks in live network for testing and maturing your code. Any unit tests yet?

@synctext not yet. Do you think I should still stick with protocol buffer (which may not be compatible with dispersy message serialization fashion) or just use conversion.py for serialization? Since it is just a warm-up, maybe...it is wise to "borrow" something from existing dispersy?

clean implementation with protocol buffers please

@YourDaddyIsHere if you want the quick and dirty for sending through Dispersy: you can simply pack the Protocol Buffers serialization into a string and use this as a Payload for a Dispersy message (example where I used this to send JSON here). The tunnel community uses a different method to sign Dispersy messages anyway (which is partially the reason why the Dispersy message definitions are all the same).

That said, I have a PR open which provides dynamic Dispersy messages using Protocol Buffers serialization. I doubt you will need all of Dispersy's fancy message sharing options for your work though.

Please focus on MultiChain community (for trusted peer discovery):

Docs on tunnel community:

Please publish for next meeting: https://github.com/YourDaddyIsHere/walker_with_clean_design Talk to real Dispersy tracker and walk on the search community. Determine response rate.

Rough timeline deadline:

@remkonaber Please sync with this student for your Ansible work. 19 NAT boxes are responsive. power cycle from software via USB. Server: natlab.ewi.tudelft.nl

More technical reading.. It is difficult to write a single NAT walker for all uses. This describes the requirements for a high-volume node. Especially the hamming/load balancing part is important to understand: http://blog.libtorrent.org/2016/09/dht-bootstrap-node/

ToDo: please understand, read their code, and evaluate the possibility of also implementing: "This function generates a transaction ID based on the destination IP address and a local secret value. This is a way to verify responses without storing state for each ping."

OK,got it,reading now

Please remove existing Dispersy and Tribler code. Create a minimal walker with just 4 messages. Objective: simplicity, clean, understandable code, correctness, and code coverage. Preference is just a single .py file with 250 lines of code, single hard-coded IPv4 to bootstrap, hard-coded multi-chain master member.

possible key thesis figures:

@synctext This is the clean-walker:https://github.com/YourDaddyIsHere/Clean_Walker there is no dependency on tribler or dispersy codes (I keep using crypto.py of dispersy, but I rewrite part of the crypto.py so it doesn't has dependency on tribler or dispersy anymore)

It can connect with the live network (walk in multichain community). The walker is simple and it can only deal with 4 walker-message (introduction-request, introduction-response, puncture-request, puncture) + 2 identity-message (dispersy-missing-identity, dispersy-identity). For other messages types of multichain community, it doesn't support.

The codes are far more than 250 lines, the serialization/de-serialization of the 6 types of messages take around 500 lines, the walker's logic takes around 250 lines indeed.

For running it, just type "python walker.py". I already tested it with real trackers in live network, all types of messages works. I am hunting bugs on it, want to make it more robust.

For NAT puncturing test, I need to add some statistic modules on it (e.g. calculating puncturing rate for each NAT)

ToDo:

~/walker__clean_code>wc -l *py

423 crypto.py

66 Message.py

40 Mylistener.py

697 walker.py

204 WcandidateGroup.py

50 Wcandidate.py

1480 totalPlease improve your code for our next meeting and make it maintainable. Ugly duplicate code: https://github.com/YourDaddyIsHere/Clean_Walker/blob/master/walker.py#L70 Ugly hardcoded stuff:

Excellent, so next improvement of MultiChain we need Protocol Buffers + identity inclusion option. This last feature adds your full public key to every message you send. This wastes bandwidth, but removes the need for dispersy-identity message. Making messages atomic is also nice.

@egbertbouman You have more code improvement pointers?

Future work, connected to #2778.

The walker uses transitive trust to discover random peers, low-latency peers, and taste-similar peers. Communities are able to insert a peer-discovery-bias into the walker. Every walk with certain reset probability produces 3-8 peers. See #2458 for the walk with visit to multiple peers.

This is the original design note from 12 years ago. I used this design during a meeting with Professor Maarten van Steen, the expert in 2005 for epidemic protocols and gossip. We now finally have this magic T() trust filter and S() similarity filter implemented and deployed (the world no longer cares about distributed recommenders, no publication possibility means we move on and no longer care). For this thesis we can put it within a generic peer-discovery-bias framework with experimental evaluation.

@synctext 1.For identity inclusion, I think the current dispersy has implemented that. The payload of dispersy-identity message is empty (you can check _encode_identity() in conversion.py). The identity of a member lies in the Header of the all types of Message (the Authentication part of the Header), for community version not greater than 1, the identity is represented by sha1 digest of public key, for community version greater than 1, it is represented by public key string. Multichain community is in version 1, so it use sha1 digest (the same as my walker). It seems that the only thing we need to do is boosting the Multichain community to version 2, we get "identity inclusion" feature, right?

2.For the original design 12 years ago, I go through all the papers you gave me (including the one in #2458), but I haven't seen that hand writing architecture graph (the one with S() and T()), where can I find more details about that?

@YourDaddyIsHere Yup, just change the community version to 2 and change to community id and you get identity inclusion.

@egbertbouman OK,thanks

You discovered a mistake, the multichain community should have been on V2 already...

@synctext XD.... Ok, then I change the encoding of Authentication in my walker, make it support both V1 and V2.

>time env PYTHONPATH=. python -O test/testWcandidateGroup.py

['/home/pouwelse/GITHUB/walker__clean_code/test', '/usr/lib/python2.7/dist-packages', '/home/pouwelse/GITHUB/walker__clean_code', '/usr/lib/python2.7', '/usr/lib/python2.7/plat-x86_64-linux-gnu', '/usr/lib/python2.7/lib-tk', '/usr/lib/python2.7/lib-old', '/usr/lib/python2.7/lib-dynload', '/home/pouwelse/.local/lib/python2.7/site-packages', '/usr/local/lib/python2.7/dist-packages', '/usr/lib/python2.7/dist-packages/PILcompat', '/usr/lib/python2.7/dist-packages/gtk-2.0', '/usr/lib/python2.7/dist-packages/ubuntu-sso-client', '/usr/lib/python2.7/dist-packages/wx-3.0-gtk2', '..']

initializing trusted_list

the length of trusted list is:

13

test_add_candidate_to_intro_list (__main__.Test_Walker) ... the intro_list is now:

('1.1.1.3', 6421)

ok

test_add_candidate_to_stumble_list (__main__.Test_Walker) ... the stumble_list is now:

[(u'1.1.1.2', 6421), ('1.1.1.2', 6421)]

ok

test_add_candidate_to_walk_list (__main__.Test_Walker) ... the walk_list is now:

('1.1.1.1', 6421)

ok

test_get_candidate_to_introduce (__main__.Test_Walker) ... the stumble_list is now:

[(u'1.1.1.2', 6421), ('1.1.1.2', 6421)]

[(u'4.4.4.4', 6421), ('4.4.4.4', 6421)]

the stumble_list is now:

[(u'1.1.1.2', 6421), ('1.1.1.2', 6421)]

[(u'4.4.4.4', 6421), ('4.4.4.4', 6421)]

[(u'5.5.5.5', 6421), ('5.5.5.5', 6421)]

FAIL

test_get_candidate_to_walk (__main__.Test_Walker) ... the stumble_list is now:

[(u'2.2.2.2', 6421), ('2.2.2.2', 6421)]

the stumble_list is now:

[(u'2.2.2.2', 6421), ('2.2.2.2', 6421)]

[(u'3.3.3.3', 6421), ('3.3.3.3', 6421)]

ok

test_is_in_list (__main__.Test_Walker) ... ok

======================================================================

FAIL: test_get_candidate_to_introduce (__main__.Test_Walker)

----------------------------------------------------------------------

Traceback (most recent call last):

File "test/testWcandidateGroup.py", line 59, in test_get_candidate_to_introduce

self.assertFalse(self.candidate_group.is_in_list(candidate1,self.candidate_group.stumble_candidates))

AssertionError: True is not false

----------------------------------------------------------------------

Ran 6 tests in 140.062s

FAILED (failures=1)

0Please use a package for the unit tests, nosetests + obtain an account on Jenkins.tribler.org :

Please tidy up the code! try to replace this entire file with 1 message_type def

Try to remove code duplication on parsing

Typo: signature

Also strip the 423 lines of code in crypto.py of everything you don't need.

wc -l test/*.py

235 test/testWalker.py

67 test/testWcandidateGroup.py

302 total

wc -l *.py

423 crypto.py

0 __init__.py

66 Message.py

2 testCoverage.py

699 walker.py

222 WcandidateGroup.py

39 Wcandidate.py

1451 totalNext step is running 1000 walkers towards 10000 walkers in 1 big computer using Gumby. Isolated experiment where they walk to eachother and there are no load balancing issues.

https://github.com/YourDaddyIsHere/Clean_Walker https://github.com/YourDaddyIsHere/load_balancing_experiment

1.Remove things I don't need in crypto.py 2.Use single message definition in Message.py 3.Replace Unittest with Nosetest 4.The load balancing experiment is done, but the results is very strange...

Please do a clean slate implementation with human readable code, use the example for easy to read code. Do not use any _struct_H.pack( unreadable silliness anymore.

Issues to address:

Candidate and Member names. Replace with a intuitive names: identity and neighbor.candidate_to_walk_ADDR = candidate_to_walk.get_WAN_ADDR() replace WAN addresses with the correct term the rest of the world uses: _public_IP_andportif message_id == 246: print("here is a introduction-request") this is a type, so message_typestumble mentions. Use the term incoming and outgoing everywhere and exclusively.WALK_LIMIT, and INTRO_LIMIT with a proper _timeout_for_incomingintroduction-req

@synctext Hi, could you give me some documents (maybe some academic papers) for this picture?

I know it is for future work, not for now. But I think I need to know more details about what should the "future walker" looks like before I finish the even-cleaner-walker. Or I may need to completely rewrite it again in the future.

no such paper exists. Was very old initial idea/dream. Please ignore.

even-cleaner-neighbor-discovery

I think it will be better to add some graphs (like I draw in the white board) to make it easier for reader. But the text itself already exceed 2 pages and I think there are still more details should be included...

Yes, please make drawings the central part of your easy-to-read docs, like:

Did you ever discover and read the current Dispersy documentation?

Instead of crawling, please use the term peer discovery. See this extended example of what people did 13 years ago. Not something we want to simply repeat, but good inspiration for future measurements and experiments. ToDo: animation with 8 peers being discovered, something similar to Gnutella example below.

With discovery effect of new nodes like this:

With discovery effect of new nodes like this:

Overlap with #2894 and BarterBrowser work from 2008 and ancient ticket #31.

Next steps the larger emulation, crawling live network, and NAT box setup by Remko.

Idea for future: integrate the peer discovery with the crawler of MultiChain records

@synctext crawling live network has been implemented: neighbor-discovery-with-crawler Every time it gets a introduction_response, it send crawl-request to the responder and will get multiple blocks.(according to the current protocol of multichain community, it gets all the blocks after a sequence_number specified in crawl-request)

the blocks will be stored in multi_chain table in BlockDatabase.db which is a sqlite database. The database also has a member table, which stores (identity, public key) pairs for all members it knows.

I realize that there is a trouble: in last meeting, we talk about the trusted-walker that prefer to walk to neighbors it has blocks with. But it is not easy to figure out whether a neighbor has your blocks (it may takes far more than 5 seconds to receive all blocks a neighbor sends you, I have no idea how many blocks a neighbor usually has, but it is possible that a neighbor has over tens of thousand of blocks, it will of course take more than 5 seconds to receive all of them, but we have to take a walk every 5 seconds)

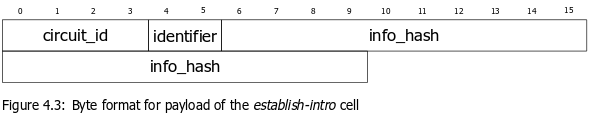

I use the built-in "bytefield" package of latex to draw the graph of message, here is the graph of introduction-request. Is that ok? If it is alright, I will put all the graphs (including crawl-request, crawl-response and crawl-resume) in the specification I wrote.

Haven't done with the gif yet, I will make it soon... soon after I find a software which can draw beautiful graphs...

The clean-slate-code can now discover transitive trust records!

Good progress, next step quality control.

walker__clean_code>python neighbor_discovery.py

neighbor discovery module started

received data from('95.211.155.131', 6428)

message id is:247

here is a missing-identity message

the global time is: 4

received data from('95.211.155.131', 6428)

message id is:245

here is a introduction-response

global time is:4You need to check incoming messages and do duplicate filtering or something. Code errors and uncaught exceptions like this should never happen..

Unhandled Error

Traceback (most recent call last):

File "neighbor_discovery.py", line 257, in on_crawl_response

self.database.add_block(block)

File "/home/pouwelse/GITHUB/walker__clean_code/database.py", line 204, in add_block

cursor.execute(script_add_block,data)

sqlite3.IntegrityError: UNIQUE constraint failed: multi_chain.hash_requesterFor reference, the R graph script: https://github.com/qstokkink/gumby/blob/live_edges/scripts/r/plot_trust.r

@synctext The prototype of trusted walker is finished, the idea is: 1.if A trust B (B used to up load something to A) 2.and B trust C, Then we have A trust C follow this idea, I construct a directed graph based on blocks. Nodes represent members and edges represent block (upload and download). As you can see, red nodes represent members that we don't trust, green nodes represent members that we trust... The problem is that... there is no red nodes at all! That means by going along the trusted chain, every member will be trusted node.

Maybe we should limited the amount of hops of trusted chain, e.g. 3 hops. Then if A trust B, B trust C, C trust D, D trust E. Then A trust B,C,D, but not E(4 hops)

Or we should create a trusted function basing on the number of hops, the idea is that the degree of trust degrades as the number of hops increase.

Now I have the graph-central documentation: walker protocol specification full-block crawler protocol specification

But I suddenly realize that the tribler has fully implemented half-block protocol, hence I had a painful redo for all crawler codes in a hurry, so... I haven't documented it yet. The crawler specification above is still for full-block protocol.

I plan to first implement the work flow of trust walker before making some nice gif graphs to prevent a painful redo again...

By the way I think there may be some mistakes in my trust chain. I doubt that some half-block has no "link public key" and that attribute is replaced by a placeholder (maybe 74 bytes of 0), and if that is true, most nodes in the graph will be linked to the node representing that placeholder, resulting in all nodes are trusted.

I need to talk with the author of half-block implementation first.

Thesis direction: the majority of the network is evil

Emulate a 1 million node network, passive non-walkers (use twisted, 5000 unique listen sockets per Python process, 200 Python processes?)

Emulate with corner cutting. So randomly generate the Trustchain records on the fly when needed, avoid generation at startup, do not parse database files. So keep in memory. keep same random seed, repeatable experiment.

Majority of this network is evil, lying, cheating, whitewashing, colluding, Sybil attacking with you

Majority is located in a single evil cluster (dishonest region), with various attack edges connected to the honest region.

1 active honest peers in the network

this honest peer does random walk across network+live edges + collects records

challenge is to offer a passive simulated network for this which provides correct message response

measure: byte usage per hour, record discovery speed, dishonest node discovery, "spam" record collection

experiment setup brainstorm: everybody evil, only 1-hop uploader "friends" are honest, friend+friends-of-friends honest; plus random (dis-)honest region and attackers edges.

Scientific research questions: find and test a random walk algorithm which avoid the dishonest region

comments:

already reach step_limit, stopping

Aborted (core dumped)@synctext I think this task is a little bit too broad, a strategy against Sybil attack itself is enough for a master thesis... let along strategies against whitewashing/colluding/cheating etc. Evil nodes committing different bad behaviors will lead to different scenario (let alone the combination of these bad behaviors...). In addition another major issue is: how many evil parties we have (evil nodes in different evil parties won't cooperate with each other). Different numbers of evil parties will of course bring in different scenario.

So... as you can see, there are too many scenarios to deal with, it is not a 2 months work (it may be enough for 20 months work,or even longer...)

My propose is: I make a "walker lab" (something like "NAT lab", but not so sophisticated, or it will consume too much time), it bases on some virtual machines (Ansible may make it). In walker lab, we can create a virtual neighbor network (in this case 1 million neighbors) and stores a random seed or topology of the network. we can reuse the topology to repeat the experiment.

In "walker lab", we can specify the ratios of evil nodes basing on parameters we give. We can also specify the behaviors of evil nodes (by giving evil nodes different scripts). The "walker lab" will generate multiple dishonest/honest nodes domain.

Then, I do a experiment using my walker in a specific scenario (e.g. 51% of nodes are evil, they use single Sybil attack strategy and they are in same evil party). And evaluate the performance and includes a discussion and open this issues to all readers, and... thesis done.

In short: I plan to make a easy to use tools to automatically generate a virtual neighbor network, and do a experiment using my worker in a specific scenario against specific kind of evil nodes, and then leave the rest of big problem (creating a walker against more challenging scenario) to future researchers (or some lucky students)...they would be happy to play with the "walker lab" and create lots of interesting walker :D

Compared with a super walker against all kinds of bad guys in the network, I think this propose is much more feasible...

First focus on a simple problem and key result:

Started from a clean-slate without my explicit request :-) CMCW: Cutting Many Corners Walker.. Re-Do Gumby light: light-weight Amazon

Emulate with corner cutting. So randomly generate the Trustchain records on the fly when needed, avoid generation at startup, do not parse database files. So keep in memory. keep same random seed, repeatable experiment.

Architecture for next meeting:

Currently all Dispersy communities have their own isolated walker. This is not very efficient. This issue aims to build upon the ongoing multichain work and create a trusted peer discovery mechnism with high-performance NAT puncturing.

Background reading (general):

Trusted peer discovery:

Technical docs: