HI @VainF - Just checking if you have any update for this issue. Thanks!

Open nikhil153 opened 3 years ago

HI @VainF - Just checking if you have any update for this issue. Thanks!

This is not a bug with the cod per say. The recursion depth for python is 990 calls, it is set to avoid stackoverflow.

File "../../Torch-Pruning/torch_pruning/dependency.py", line 397, in _fix_denpendency_graph _fix_denpendency_graph(dep.broken_node, dep.handler, new_indices) [Previous line repeated 990 more times]

As you can see it got the RecursionError after 990 calls of _fix_dependency_graph

You can try and change the recursion depth for your python or alter the pruning percentage (pruning of 50% of the smallest weights is the reason why so many indices are being chosen for pruning , which leads to stackoverflow).

If you have a huge model or combination of models, then it throws a recursion error. Would it be possible to implement a dependency graph without recursion? @VainF

If you have a huge model or combination of models, then it throws a recursion error. Would it be possible to implement a dependency graph without recursion? @VainF

Hi @vinayak-sharan , thank you for your advice. I will try to re-implement it in the next version.

Hi everyone, the non-recursive implementation of dependency graph has been uploaded. I will keep the issue open for further discussion!

Hi - thanks for a wonderful tool. I am trying to test it out with a pretrained model from here. However I am encountering the following error:

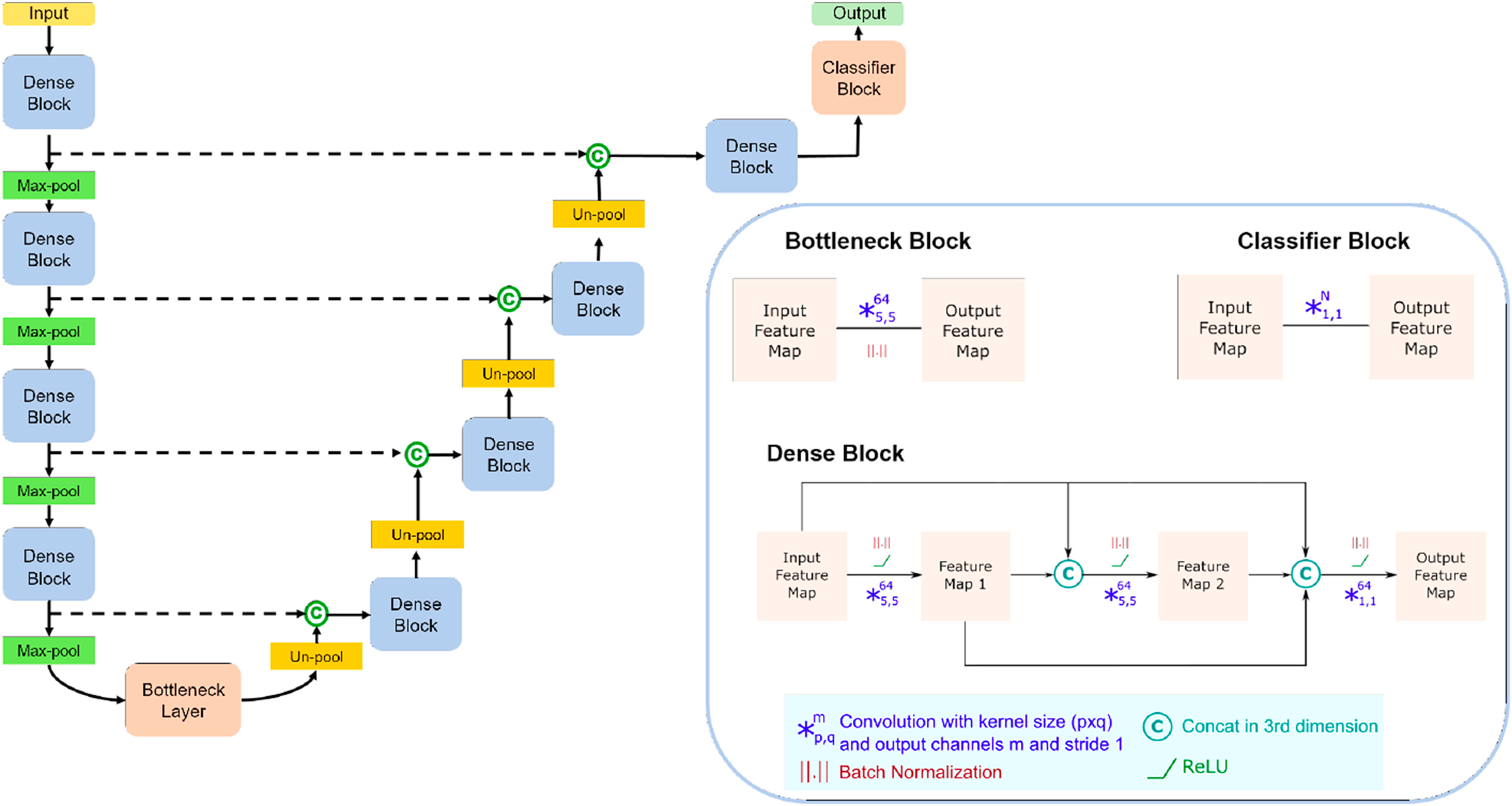

The network architecture is based on this paper. Here is a figure showing the details:

Below is my test script that uses the model definition and pretrained weights from the model repo

I will appreciate any help or suggestions! Thanks!